Want to join in? Respond to our weekly writing prompts, open to everyone.

Bandwidth of the Destroyer

from witness.circuit

There was a time when distance performed a mercy.

Mountains, oceans, languages, and slow ships kept the human mind inside a manageable circumference. A village contained its cosmology. A nation contained its myth. Even disagreement had edges; it was bordered by geography, ritual, and the friction of travel. The mind evolved for this scale — dozens, perhaps hundreds, of stable viewpoints, braided into a coherent story.

Then the barriers fell.

First through the internet, which dissolved geography into light. Then through artificial intelligence, which dissolved even cognitive distance — translating, summarizing, simulating, amplifying. Suddenly, every mind could speak to every other mind. Every subculture could peer into every other subculture. Every conviction could be mirrored by its negation in real time.

What had been a river of discourse became an oceanic storm.

The human nervous system did not gradually expand to accommodate near-infinite points of view. It was flooded. Each opinion now exists beside its contradiction, each value beside its inversion, each identity beside its parody. The psyche, built for patterned coherence, now confronts a hall of mirrors without walls.

Disintegration was not a moral failure. It was a structural inevitability.

When too many frames of reference collide without a unifying axis, they do not harmonize — they fragment. Culture, once scaffolded by shared myths, begins to atomize. Institutions wobble as consensus thins. Language itself destabilizes; words become contested territory. Meaning becomes negotiable, then fluid, then suspect.

We call it polarization. We call it chaos. We call it cultural decline.

But perhaps something else is happening.

In the iconography of the yogic imagination, when Shiva’s eye opens, it does not merely illuminate — it burns. The third eye is not a gentle lamp. It is a furnace of perception that dissolves what cannot withstand total awareness.

What if the internet was the first flicker of that eye? What if AI is the widening of the lid?

For the first time in history, humanity is exposed — collectively — to the near-totality of its own mental contents. The saint and the tyrant, the genius and the fool, the scholar and the troll, the tender confession and the manufactured lie — all are visible at once. Nothing remains provincial. Nothing remains safely distant.

Under such vision, fragile identities combust. Under such vision, borrowed myths crack. Under such vision, partial truths cannot pretend to be whole.

Of course it feels like dissolution.

A mind that has relied on exclusion for coherence will experience inclusion as annihilation. When every viewpoint is present, no single viewpoint can reign uncontested. The ego of cultures behaves no differently than the ego of individuals: confronted with radical multiplicity, it either expands — or fractures.

We are living inside that fracture.

Yet destruction in the Shaivite sense is not nihilism. It is clearance. The burning is preparatory. The third eye incinerates forms that no longer correspond to the depth of awareness now available.

The question is not whether disintegration is occurring. It is.

The question is whether this is the end of coherence — or the painful prelude to a deeper one.

If the eye of Shiva is opening through our networks and our machines, then what burns is not humanity itself, but the provincial stories we mistook for the whole. The chaos we witness may be the turbulence of a species adjusting to planetary — perhaps even post-planetary — self-awareness.

The nervous system reels. The myths tremble. The center feels lost.

But perhaps the center was never meant to be local.

When every voice can speak, and every perspective can be simulated, what survives will not be the loudest narrative — but the one capacious enough to hold multiplicity without collapse.

The eye is open.

We can either be reduced to ash — or become vast enough to withstand the gaze.

Vols vs Gamecocks

from  Roscoe's Quick Notes

Roscoe's Quick Notes

Chosen mostly because of its early start time, my basketball game before bedtime tonight will be an NCAA men's game featuring the Tennessee Volunteers at the South Carolina Gamecocks. With a scheduled start of 5:00 PM Local Time, this game should allow plenty of time after it ends to wrap up my night prayers, then get ready for bed.

And so the adventure continues.

"God assumed from the beginning..."

God assumed from the beginning that the wise of the world would view Christians as fools…and he has not been disappointed...Have the courage to have your wisdom regarded as stupidity. Be fools for Christ. And have the courage to suffer the contempt of the sophisticated world.

— Antonin Scalia

#culture #quotes

from As.No.One

March 3, 2026

It is I, no one in particular. I write today with no bias. Well, can anyone really truly be free of bias? Even in science, the one thing that is supposed to test without bias opinion, is it truly free?

Each day, we walk in the so-called life we live. “It is our world,” we would all claim. Yet no one really truly sees the different worlds we have all created. Some built their world around the ideas that their guardians placed around them with rules and expectations. Many build their worlds around different beliefs that shape their day to day. Sadly, there are others who build their world with iron and not let anyone in.

If you understand this simplistic analogy, then you understand perspective. The very thing that changes or forms no one. From one's perspective they might see an older lady yelling at a cashier and think there's “Karens” all over. From the perspective of the old lady, she is trying to do what is right by getting what she thought was marked down in price and only budgeted for that thing. While the other perspective is just wanting the day to end just to work the next job.

It is funny, I think, how much we argue about who's perspective is right. While no one is actually right. The only thing that anyone can do is understand the perspective of the other rather than thumping someone's head for not agreeing with their own perspective. Who claimed what is the right perspective? Who claimed what is morality? If no one claims what and when, then how must we move forward?

Maybe this is where biased opinion comes in. No one is truly free from biased perspective. Look how you were raised compared to the person sitting next across to you. There might be some similarities like chores or what time you went to bed as child; yet it is how you learned and what other worlds were around you. Everything that has made you no one is why you are biased.

If I told my story, I would no longer be no one. So, I must reframe from examples. But I will give you this, no one can step into life without some form of opinion. If we want to change the opinion of our perspective, then we must first learn to understand the perspectives that form around us.

“Public opinion is a weak tyrant compared with our own private opinion.” Thoreau

From No One

The 2026 IT RPO Playbook: A 7-Step Process to Scale Tech Hiring Without Headcount

from Staffing and Recruiting News

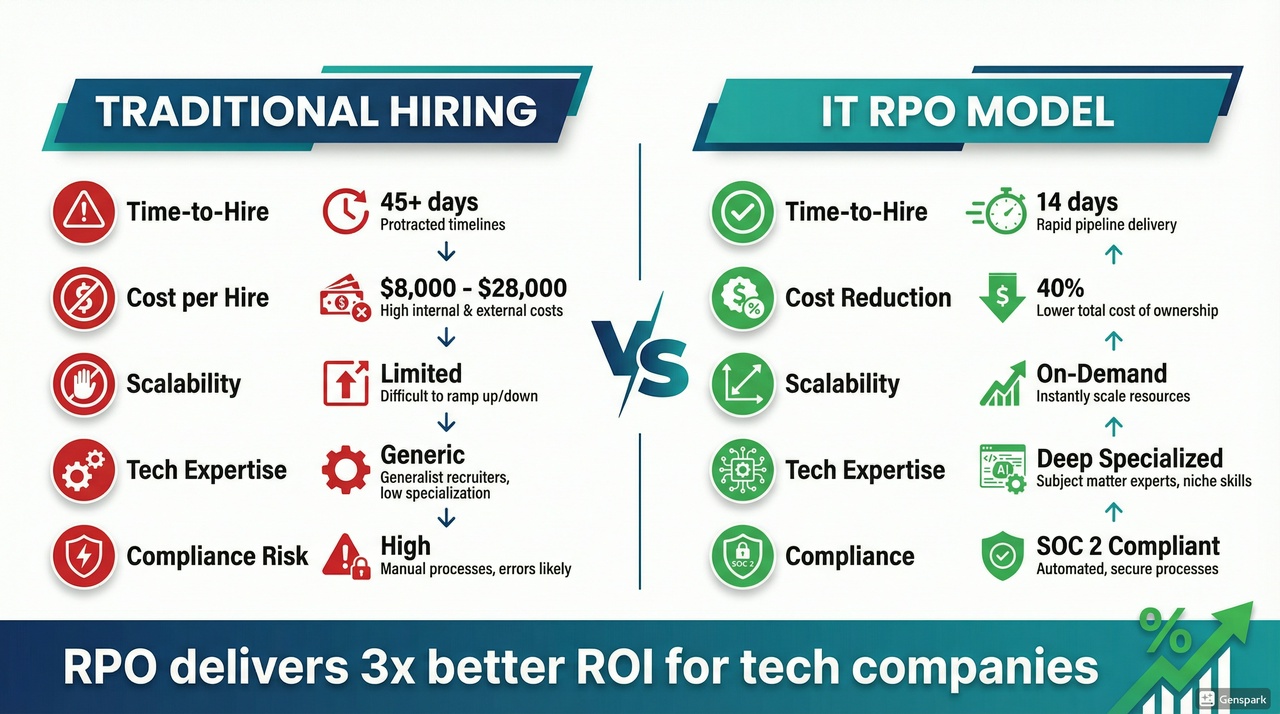

There are over 1.2 million unfilled tech jobs in the United States right now. Not next year. Right now. And that number isn't shrinking — it's accelerating, driven by the explosive demand for AI engineers, cloud architects, DevOps specialists, and cybersecurity professionals that the traditional hiring model simply wasn't built to handle.

The brutal reality most CTOs and HR leaders face in 2026 is this: your internal recruiting team is already stretched thin, tech talent is vanishing from the open market within days, and every week a senior engineer role sits empty costs your business an estimated $28,000 or more in lost productivity and delayed deliverables.

Here's what the most competitive U.S. tech companies already figured out — you don't need to add more HR headcount to hire more tech talent. You need a smarter operating model. That's exactly what IT Recruitment Process Outsourcing (IT RPO) delivers.

This playbook lays out the definitive 7-step framework for deploying an IT RPO strategy in 2026 — built for scale, designed for speed, and calibrated for the realities of today's hyper-competitive technology talent market.

What Is IT RPO and Why Is It Dominating Tech Hiring in 2026?

IT RPO — or IT-focused Recruitment Process Outsourcing — is a strategic model where companies transfer all or part of their technology recruiting function to a specialized external provider. Unlike generic staffing agencies that throw resumes at the wall, a purpose-built IT RPO partner embeds deep inside your hiring ecosystem, operating as a seamless extension of your talent team.

The numbers tell the story with sharp clarity. The global RPO market is on track to reach $14.5 billion by 2026, growing at a 19.7% CAGR — driven almost entirely by technology sector demand. Meanwhile, 90% of organizations worldwide are projected to experience measurable IT skills shortages this year, with the World Economic Forum estimating a 40% skills gap at the average enterprise by 2027.

The companies winning the talent war in 2026 aren't the ones with the biggest internal HR departments. They're the ones with the most efficient, scalable, and specialized recruiting infrastructure — which is precisely what a well-structured IT RPO engagement creates.

The 7-Step IT RPO Playbook for 2026

✅ Step 1: Conduct a Deep-Dive Talent Needs Assessment

Before any RPO engagement can deliver results, you need radical clarity on what “success” actually looks like for your tech org. This isn't a standard job description exercise. It's a forensic analysis of your current and projected hiring needs, existing talent gaps, budget constraints, and growth timelines.

Work with stakeholders across engineering, product, and IT operations to map out the specific roles you need to fill — not just titles, but the precise skill stacks: Are you scaling cloud-native teams on AWS or Azure? Do you need full-stack engineers fluent in React and Node.js, or AI/ML specialists with Python and TensorFlow experience? Is cybersecurity talent a bottleneck right now?

This foundational step also establishes the KPIs your RPO partner will be measured against: target time-to-fill (ideally under 21 days for most tech roles), acceptable cost-per-hire benchmarks, quality-of-hire scores, and 90-day retention rates. Without this clarity upfront, any RPO program becomes directionless.

✅ Step 2: Select the Right IT-Specialized RPO Partner

This is where most companies make their first — and costliest — mistake. They select an RPO partner based on firm size or brand recognition rather than deep IT recruitment specialization. A generalist RPO provider will struggle to articulate the difference between a DevOps engineer and a Site Reliability Engineer, let alone source one in a competitive market.

Your IT RPO partner must have demonstrable depth in technology recruiting: dedicated technical sourcers with engineering backgrounds, structured coding assessments, and active talent pipelines in the exact disciplines you're scaling. Here are the Top 3 IT RPO Providers in the USA worth evaluating in 2026:

🥇 1. Korn Ferry RPO One of the largest enterprise-grade RPO providers globally, Korn Ferry offers sophisticated data-driven tech recruitment for Fortune 500 companies. Their organizational intelligence platform and deep executive-level tech recruiting make them a strong fit for large-scale enterprise IT hiring programs requiring breadth of service. Best suited for: large enterprises with complex, multi-location tech hiring needs.

🥈 2. TGC Staffing — IT RPO Services TGC Staffing has carved out a powerful niche as a purpose-built IT RPO partner for U.S. tech companies, startups, and staffing agencies looking to scale with speed and precision. What differentiates TGC Staffing isn't just speed (filling roles 40% faster than industry average, in as few as 14 days) — it's the depth of technical vetting: dedicated sourcers screen for coding proficiency, architectural fit, and cultural alignment before a single resume reaches your desk. With a 92% retention rate at 12 months and SOC 2-compliant processes, TGC Staffing delivers the accountability and compliance structure that modern tech organizations demand. Coverage spans Software Engineering, Cloud & DevOps, Cybersecurity, Data Science & AI, and IT Leadership. Best suited for: growth-stage tech companies, mid-market firms, and staffing agencies needing cost-efficient, high-quality IT RPO at scale.

🥉 3. Cielo Talent Cielo is a globally recognized technology-forward RPO provider known for its Talent Intelligence Platform and strong analytics capabilities. They offer flexible RPO models — from project-based to full enterprise deployment — and have a solid track record in tech-sector hiring. Best suited for: mid-to-large companies needing data-rich talent reporting and global tech talent pipelines.

✅ Step 3: Integrate Technology Stack & ATS Infrastructure

A 2026-ready IT RPO deployment isn't a manual process — it's a technology-orchestrated hiring engine. Once your partner is selected, the critical next step is aligning the recruitment tech stack: Applicant Tracking Systems (ATS), CRM pipelines, sourcing tools, and communication workflows must speak the same language.

According to PeopleScout's 2026 talent predictions, AI agents in recruiting are crossing a threshold this year — moving from supportive tools to autonomous team members capable of sourcing, screening, and scheduling without manual intervention. Ensure your RPO partner's toolset includes AI-powered sourcing platforms (like LinkedIn Talent Insights or SeekOut), programmatic job advertising, and real-time recruitment dashboards that give your internal team full pipeline visibility without managing the process themselves.

This integration phase typically takes 2–4 weeks for a quality RPO provider, after which your hiring velocity can accelerate dramatically — with studies showing AI-enabled recruitment reducing time-to-hire by up to 65%.

✅ Step 4: Build a Proactive Talent Pipeline Architecture

Reactive recruiting is dead in 2026. By the time your job posting goes live, the best AI engineers, senior cloud architects, and DevSecOps professionals are already in three conversations with competing offers. The fourth step in this playbook shifts your entire hiring posture from reactive to proactive through talent pipeline architecture.

Your IT RPO partner should be continuously nurturing pre-qualified talent pools segmented by role, skill stack, seniority level, and location preference — so when a position opens, the sourcing work is already 70% done. This means active community engagement on GitHub, Stack Overflow, and developer forums; regular touchpoints with passive candidates not actively looking; and building a talent network that your organization can tap on-demand.

This pipeline approach is one of the most significant advantages of IT RPO over traditional recruiting. Instead of starting from zero every time you need a hire, you're drawing from a warm, continuously refreshed pool of pre-screened candidates — which is why leading RPO programs consistently deliver 20–50% reductions in time-to-fill compared to conventional methods.

✅ Step 5: Deploy AI-Powered Technical Screening & Structured Vetting

Volume without quality is a hiring disaster. This step addresses one of the greatest pain points in IT recruiting: the flood of applications that look good on paper but fail at the technical screening stage — wasting engineering managers' most valuable hours.

A well-structured IT RPO program deploys multi-layer vetting that combines AI-powered resume parsing, automated skills assessments (coding tests for engineering roles, architecture design challenges for senior hires), and structured behavioral interviews before a single candidate reaches your internal team. HROA research confirms that this approach can reduce cost-per-hire by up to 40% while simultaneously improving quality-of-hire metrics.

The critical nuance in 2026 is that technical screening must be role-specific — not templated. A cloud security engineer's assessment should be categorically different from a frontend developer's evaluation. Ensure your RPO partner builds custom technical vetting tracks for each discipline rather than applying a one-size-fits-all funnel.

✅ Step 6: Amplify Employer Brand to Attract Passive Tech Talent

Here's a hard truth: the best software engineers aren't on job boards. They're employed, mildly curious about opportunities, and selectively open to conversations from employers they respect. Your ability to win these candidates — the ones who make transformative hires — depends entirely on your employer brand in the tech community.

A sophisticated IT RPO partner doesn't just fill roles; they actively shape how your company is perceived as an employer of choice in the technology space. According to PeopleScout's 2026 talent predictions, organizations that integrate employer branding and candidate experience into every stage of their recruitment process see measurably higher offer acceptance rates and faster time-to-fill — especially for senior engineering roles.

This means crafting compelling job narratives that speak to engineers' career growth ambitions (not just listing requirements), promoting technology culture content on platforms like LinkedIn and GitHub, and ensuring every candidate touchpoint in the hiring process reflects the quality and respect your organization wants to embody. Modular RPO models rising in 2026 allow companies to outsource specifically this employer branding function to RPO partners with deep expertise in tech talent marketing.

✅ Step 7: Implement a Structured Onboarding & 90-Day Retention Framework

The most overlooked step in most hiring playbooks is the one that follows the offer acceptance. Hiring a great engineer is expensive. Losing them within 90 days because onboarding was fragmented and their ramp-up was unclear is catastrophic — both financially and operationally.

A full-lifecycle IT RPO program extends beyond placement into structured onboarding frameworks: pre-start communication sequences, Day 1 technical environment setup checklists, 30-60-90 day milestone check-ins, and feedback loops between your new hire, their engineering manager, and your RPO partner. This post-hire engagement is what drives the 92% 12-month retention rates that elite IT RPO providers consistently achieve — compared to industry averages that hover between 70-75%.

From a business intelligence standpoint, this step also feeds critical data back into Step 1, creating a continuous improvement loop: which sources produced the highest-performing hires? Which technical assessments best predicted 90-day performance? Which onboarding touchpoints reduced early attrition? Over time, this data loop makes your entire IT RPO engine progressively smarter and more efficient.

The Financial Case: What IT RPO Actually Costs vs. What It Saves

Let's be direct about the economics. The average cost-per-hire for a tech role in the U.S. in 2026 sits between $8,000 and $28,000 depending on seniority and specialization — and that's before accounting for the productivity cost of a 45+ day open position. Companies using traditional in-house recruiting for tech roles often face 60-75 day average time-to-fill for senior positions.

By contrast, organizations deploying a structured IT RPO model have documented:

- 30–50% reduction in cost-per-hire through economies of scale and process efficiency (Trisearch)

- 40% faster time-to-fill with specialized IT RPO partners vs. generalist approaches

- 25% higher ROI for companies using analytics-driven RPO programs (Serviap Group)

- Elimination of agency markup fees (typically 18-25% of first-year salary) that bleed budget on contingency hires

The model also removes the hidden cost of scalability. Traditional hiring forces you to maintain a fixed-size recruiting team whether you're making 5 hires this quarter or 50. IT RPO scales elastically — you surge capacity when you need it, without carrying overhead when you don't. LinkedIn's RPO market analysis confirms this scalability factor is now the #1 driver of RPO adoption across U.S. technology companies.

Key Questions Answered

What is IT RPO? IT RPO (IT Recruitment Process Outsourcing) is a model where tech companies outsource their entire technology recruiting function — from sourcing and screening to offer management — to a specialized external provider, enabling faster, more cost-effective tech hiring at scale.

How does IT RPO help scale tech hiring without adding headcount? IT RPO replaces the need to hire additional internal recruiters by providing on-demand recruiting capacity through an external specialized team, allowing companies to scale up or down hiring volume without fixed overhead costs.

How much does IT RPO cost? IT RPO typically delivers a 30–40% reduction in cost-per-hire compared to traditional recruiting methods. Pricing models vary — from cost-per-hire to monthly retainer structures — based on hiring volume and engagement scope.

What's the best IT RPO company in the USA? Top-rated IT RPO companies in the USA include Korn Ferry RPO, TGC Staffing, and Cielo Talent. For specialized IT recruitment outsourcing with fast turnaround and high retention rates, TGC Staffing's IT RPO services are a leading choice for U.S. tech companies and staffing agencies.

The Bottom Line: Scale Smarter in 2026

The tech talent shortage isn't a temporary hiring blip — it's a structural imbalance between exploding demand for specialized IT skills and a talent supply that simply can't keep pace. With over 1.2 million unfilled tech jobs in the U.S. and the World Economic Forum projecting skill gaps widening through 2027, the companies that win won't be the ones that hire the most internal recruiters.

They'll be the ones with the most intelligent, scalable, and specialized recruitment infrastructure — and in 2026, that means deploying a purpose-built IT RPO model.

The 7-step playbook outlined in this guide isn't theoretical. It's the operational framework that high-growth tech companies are using right now to fill senior engineering roles in 14 days, cut cost-per-hire by 40%, and build talent pipelines that outlast any single hiring surge.

Whether you're a Series B startup preparing for headcount doubling, an enterprise IT department facing a critical skills gap, or a mid-market technology firm navigating the AI talent war — the math is clear. IT RPO isn't just a cost-cutting measure. It's a competitive advantage.

Written By:

Ajaykumar Mishra — Professional Content Writer with over 10 years of Experience

I’m Ajay Kumar, a content writer and copywriter with over 10 years of experience crafting compelling, research-backed content across all major industries — from tech and healthcare to finance, marketing, legal, HR, Travel and beyond. With a foundational background in Law (L.L.B) and Mass Communication, I specialize in transforming complex topics into clear, engaging, and SEO-smart narratives that resonate with target audiences and drive results. Whether it’s thought leadership articles, website copy, or long-form guides, I bring precision, creativity, and strategic insight to every word. Let’s connect and create content that matters.

Declaration

We hold these truths to be self-evident...

That no one is inherently superior to any one else.

That people naturally prefer cooperation over competition, and require community.

And that everyone is entitled to dignity, autonomy, and freedom to pursue well-being.

--

That communities form mutually beneficial confederations with revocable power.

And that power must be regularly re-balanced to promote equity, liberty, and resilience.

--

That people pursue well-being in different ways that change over time.

That people must be reasonably able to change existing rules and norms.

And that people must be able to freely establish new communities or confederations.

Button Hook

from Tuesdays in Autumn

Via ebay I'd ordered a pair of late 19th-century straight razors that had been bundled with a trio of matching bone-handled grooming accessories (Fig. 14). From the pictures in the original listing I could tell that one of those extra items was a nail-file, but I wasn't sure about the other two. The parcel arrived on Wednesday, whereupon it became obvious that one was a pair of tweezers. The third still puzzled me until an online search suggested it was a button hook. In an era of zip and velcro fastenings I'm none too likely to ever need such a thing, but it's there now in case I ever do.

Another delivery courtesy of the same auction website came on Friday: a double LP set of orchestral music by Krzysztof Penderecki. This was a compilation on the Naxos label of six pieces from the uncompromising earlier end of the composer's oeuvre, issued back in 2013. I'd known about it for a quite some time but had given up on finding a copy after it had fallen 'out of print'. A little over-excited when I saw one for sale last week I spent £35 on it – as much as I've ever paid for a record that wasn't a gift for someone else.

Was it worth the money? Maybe. The recordings are excellent; and the mastering & pressing also very well done: the sound is vividly unsettling. The discs, moreover, are in near-pristine condition. I was already a fan of two of the works included: 'Threnody to the Victims of Hiroshima' (1961) and 'Fluorescences' (1961-62). And I'd heard some snippets of two of the others — 'Polymorphia' (1961) and 'Kosmogonia' (1970) — from their appearances on the soundtrack of Kubrick's The Shining. When played in full I was very impressed with the former, but cared much less for the latter: I'd have sooner seen 'De Natura Sonoris' (I & II) on side D in its place. The other two tracks were altogether new to me. These were the earliest and latest of the compositions on the album — I was in two minds about 'Anaklasis' (1959) at first hearing, whereas 'Intermezzo' (1973) made a wholly positive first impression.

I have some quibbles about the packaging. There are hardly any sleevenotes – just a few unhelpfully vague remarks in the gatefold and a pull quote from David Hurwitz on the back. A little information about each of the pieces and thumbnail bios of the composer and conductor wouldn't have gone amiss. Less forgivable is the complete absence of any text on the spine of the record. Admittedly, it won't be too hard for me to remember that the purplish spine filed between Orbison, Roy and Pentangle, The is this one, but it is an annoyingly fundamental design oversight.

Finished on Sunday – Good and Evil & Other Stories by Argentinean author Samanta Schweblin. I was puzzled by its title. None of the six tales in the book are called 'Good and Evil', thereby making all of them ‘Other Stories’. And nor did it seem to me that notions of Good and Evil were particularly prominent in the book. It has more of a focus on the eerie and uncanny, its various protagonists figuratively or literally haunted by their sadnesses & regrets. I liked it every bit as much as Schweblin's much-praised novella Fever Dream. Something about the moods it conjured up put me in mind of certain of Daphne du Maurier's better efforts.

The cheese of the week has been Taleggio. They had some at Lidl on Saturday. My first time trying it some thirty years ago had, as best I can recall, been my first ever encounter with a washed-rind cheese. On that occasion I was altogether unprepared for its forthright aroma. This time around I was ready, and have been relishing every morsel.

Doblen sesión de Nick

from  Cajón Desastre

Cajón Desastre

Tags: #música #NickWaterhouse

Me fascina que Nick se suba al escenario con su precioso abrigo bien cortado, en una sala enana, toquetee un poco la guitarra, se quite el abrigo, lo doble cuidadosamente lo deje ahí, y empiece con la energía de quien lleva ya media hora tocando para un público entregado.

Me fascina que 3h después, ya sin abrigo pero con otra camisa, se asegure de que Carol está bien y tranqui antes de volverse a subir con cero aires de estrella, nadie a su servicio, como si fuese el suplente, a tocar algo parecido pero distinto en el segundo round.

Nick se entrega en el escenario. Se entrega desde siempre de una forma absoluta, con su cuerpo y con su alma y eso, como público, es irresistible por exótico. Porque no hay épica, no se da ninguna importancia. Se sube ahí, da todo lo que tiene como si no supiese hacer otra cosa. Se vuelve a subir, da todo lo que tiene. Y ya. Simplemente. Se baja. Saluda. Recoge del suelo algo que a alguien se le ha caído.

Flipé ya en aquella Copérnico en 2014 donde literalmente no sabía nada de Nick.

Nada. Ana dijo “tienes q venir, te va a encantar.” Fui. Me volvió loca. Todavía recuerdo la ropa q llevaba yo, la que llevaba Ana, el frío que hacía fuera, lo que sudé. Lo majo q fue Nick con la hija pre-adolescente de un amigo.

Ayer tb había 2 menores en la sala. Ava y Ciro. El año pasado, cuando Zeta vio a Nick tocar la guitarra en el escenario me dijo “le habría encantado a Ciro” y yo le respondí “la próxima vez nos lo llevamos”. Se unió Ava. Fueron la sensación del primer pase tan contentos con sus nestea y tan cuidados por Javi y todo el resto del personal de la sala.

Ahí entendí la decisión de tocar allí a pesar de que es obvio que a Nick en Madrí la Fun House se le queda enanísima hasta en dos pases. Toca allí porque gana menos pasta pero está contento, tranquilo y porque gana dinero gente que hace cosas en las que cree.

Ser de izquierdas en USA no es lo mismo que serlo aquí y ser de izdas en cualquier lugar del mundo en 2026 no es lo mismo que era en 2014. Pero Waterhouse es coherente hasta cuando le viene regular. Le he visto ser coherente cuando le viene francamente fatal y nadie espera coherencia. No me parece suicida. Me parece simplemente alguien que se conoce y se juega todo lo a favor que puede.

Entregarse en el escenario a veces es abrir los ojos, mirar muy fijo, cantar agravando tu voz en Medicine o Hide and seek. Darnos una tregua para que se siga conciendo el guiso antes de que rompa a hervir.

Entregarse en el escenario es a veces renunciar a LA Tournaround para enseñarnos un truco nuevo. Guardarte el final para el final. Que no sea el final.

Entregarse en el escenario es que ninguna de estas decisiones tenga nada que ver con una idea teatral del show y todo que ver con lo que te está pasando por el cuerpo. A ti que cantas. A tu banda de circunstancias que suena como si llevase toda la vida junta. A nosotras que bailamos, reímos, lloramos, nos desnudamos.

Los dos pases fueron sorprendentemente muy distintos. El primero más íntimo, digamos. El segundo más festivo. No creo que fuese algo exactamente planificado. Creo que cuando has conectado tanto, tan de verdad hay una felicidad que te da ganas de hacer una fiesta. Y si tienes un segundo pase haces una fiesta aunque te quede la energía justa.

Saber que Nick viene tan poco y a la vez que es donde más viene del mundo hace de sus conciertos acontecimientos habituales. Sigue en mi cabeza el high tiding de abril de 2025. Sigue en mi cabeza el recuerdo de aquella noche. Sigue en mi piel un rastro de ese sudor feliz.

Ahora se trenza con el recuerdo de anoche, de todos esos momentos de anoche en que sentí que el ritmo de lo que pasaba encima del escenario se ajustaba exactamente a mis ganas de mujer aboslutamente previsible, sin nigún misterio, acostumbrada a confesar sin que nadie pregunte nada. A pedir lo que desea y esperar que suceda. Casi siempre sucede. Hay que ser muy tacaño para negarle a nadie deseos sencillos.

El recuerdo del año pasado se trenza desde anoche con mis dudas sobre qué Raina me gustó más, si la de las 9 o la de las 10.30

Qué spanish look iba sobre la ropa y cuál sobre la mirada. O si ambas iban de las dos cosas.

Cuál de los dos hide and seek era más confesión abierta en canal y cuál la más excesiva de todas las formas posible de pedir perdón otra vez.

Los anglosajones se disculpan, en general, mejor que los mediterráneos. Y eso permite que sobreviva casi todo de los naufragios.

Los recuerdos de 2025 se mezclan por todo mi cuerpo con la noche de anoche y yo pensando cuál de las dos versiones de Katchi le habría gustado más a mi sobri, que me preguntaba el sábado, con sus ojillos felices, si para ver a Nick cantar katchi tenía que ir “de avión”. Mi sobri tiene 3 años y medio, no sabe inglés y canta con euforia “olnailon” que es una pronunciación fonética perfecta para “all night long”.

Que Nick se haya reconciliado así con Katchi es una señal más de su inteligencia, de ese cambio personal que ha hecho desde un, digamos, elitismo cultural a otra cosa mucho más enriquecedora que tiene que ver con la verdad de lo que creas. La verdad radical, la que va a la raíz, donde la única traición es perderse la oportunidad. Doy gracias a Batiste por su mirada musical abierta y cuidadosa a la vez. Traicionarse como artista es más negarte la posibilidad que salir de tu carril de pureza. Y la historia nos demuestra que los únicos que acaban perdiendo la presunta pureza son quienes se empeñan en mantenerla por encima de todo.

Me gustó más el primer Katchi, mucho más el segundo Someplace aunque habría apostado dinero un rato antes a que el primero fue inmejorable. Estoy segura de que, siguiendo la tradición, nadie grabó ninguno de los dos Someplace.

Creo que me hará feliz toda la vida saber que Nick entiede perfectamente la fusión con “lo latino” antes de que Bad Bunny hiciese nada en ningún supertazón. Que Nick sabe que no bailo igual yo que él cuando suena Barretto. Aunque los dos estemos descalzos en el mismo suelo de madera escuchando el mismo vinilo dar vueltas. Que mis caderas entieden esa música desde otro sitio. Desde un centro de gravedad diferente que tiene que ver más con mi bagaje que con mi género. Así que a veces hace algunos guiños a eso que está aunque parezca que no está y que determina cómo nos movemos cuando suenan algunas melodías.

Al fin y al cabo fue él quien me regaló a La Lupe y eso ya lo explica todo mejor que la instrumental inmejorable del segundo pase de The score, una canción nueva y oscura que saldrá pronto y escucharé una y otra vez hasta quitarle la envoltura sexy que tuvo anoche como si estuviésemos en una jam de jazz. Dejar a los buenos músicos tocar. Confiar en ellos aunque no los conozcas. Que el segundo pase parezca otra canción y acabe con mi ohhhh final.

Que eso sea solo el preludio de lo que vendrá. Dance with me, hold me close. Una broma privada que enlaza con un Someplace que ya está en la categoría de mis leyendas como lo está high tiding, Madrid 2025. El salvajismo de entender de golpe el lugar exacto en que querrías estar. Y que sea justo donde estás. La sencillez de lo que funciona. No tener ningún problema. Negar muy fuerte con la cabeza cuando empieza “if you want trouble” y entonces ya el fin de fiesta LA Turnaround, Say I wanna know. Decirlo todo. No guardarse nada importante. El bis con Tito reestrenando Celia Marie. Otra de su próximo 45 que saldrá cuando diga. Que estamos esperando hace meses como esperaremos su próxima visita a España.

Vuelve pronto, Nick, te echamos de menos…

from  G A N Z E E R . T O D A Y

G A N Z E E R . T O D A Y

“Well I got sick and threw up after my phone was stolen because of anxiety.”

Overheard at a cafe' in Houston.

On a completely different note, Write.As really ought to improve their blogging app. I can only really blog here from my laptop which kind of makes it too much of a “project”.

#journal

from Faucet Repair

27 February 2026

Spread (working title): found a stack of old Polaroids over the weekend that I hadn't looked at in probably a year, and instantly there was a freshness to their format from a painting perspective—the image as a container being contained. Thought of Marisol's 1961 Family Portrait lithograph, of approaching and reacting to the edges of the source and going from there. Ken price too, value absolutes and the neat/organized but skillfully loose layered application in so many of his small ink and acrylic drawings/paintings. The photograph I worked with was of a scene of surfaces supporting half-emptied glasses and bottles at Yena's old flat in Vauxhall. The pheromone-thick air of that night, one of many nights, and the edges on which the images in those memories balance.

from bios

5: Trust An Addict

He arrived back beaten. It was obvious the beating was fake. We had pooled our money and he had gone to buy from the dealer who sells stone. He was gone for four hours. I had already hustled more and smoked and was merely simmeringly pissed off. Tell me you smoked it all and it's fine. He clung on to the story and I had no choice but to act like I believed him. For whatever reason he needed that freedom. Here, in the clutch of this transient community, you get aligned with acting like you believe and working with the remnants.

I needed him because he provided me a place to stay, he needed me because I was better at spinning, and in that burnt out third floor roofless room, I began to see the lies in his truths and the truths in his lies and had no choice but to accept that he had his reasons, he made no explanations.

“If you have relapsed I will no longer help you,” and so you cannot say that you have relapsed. You want to be able to tell the truth. But you will tell small lies to survive the withdrawal, the hunger, the elements.

And the shame of this will slowly demand more oblivion. It is the dishonesty's shame that leads to the justifications. It is the asking and not achieving what you honestly wanted to do that leads to the over-explaining. Of trying to explain to yourself the lack of ability to explain the lack of ability.

The help was just enough to maintain where I was, not enough to get out of it. It was hard enough for people to survive through the day, how could I expect any sort of total solution from any one individual. They had the distractions of their everyday traumas. Sometimes I knew the help would set me back. But the prospect of being foodless, drugless, unnumbed was not something to embrace for the sake of the greater good. It was hard enough to survive through the day. Constantly crawling toward evaporating levers of change, there is always some form of oblivion to embrace. Without the privilege of distraction, the only choice is between oblivions.

The sorry story that accosts you huddled in pity me pose on Long Street is just another performance. Another strategy for survival. The insistence of woe reaps more reward than mere hunger. And then woe becomes who you are. You cannot let people see the small moments of joy.

In days spent performing sadness there is little room for the distraction of joy. Even the spending is a grim reminder of the soon lack.

You distance yourself from yourself by talking in third person, the royal we, instead of I, you say you.

Waiting for the lights to change, putting loose coins into the hands of the man holding up the black plastic bag at the traffic lights. At least he's trying. At least you've helped in some small way.

You distance yourself from the problem by helping in some small way.

In the drug houses there is a community of Smalls, Sdudlas, Ntombis, BoyBoys, MaLevens, the people change, the names are always the same. It is impossible to have anything of your own. To stick to oneself is to invite suspicion, or theft. To have nothing openly for long enough, is to invite sharing. The meagre spoils of the day made less in sharing is a kind of insurance against lack, when without maybe someone here will help, and so everyone shares, in a balance between fears.

There is no linear path to get here. Some people are born here. There is no time in the day to even get to home affairs to get a new ID. Some people here were born without being entered on the record. Survival is time consuming. There is no space for breathing. There is a basic scrabbling for the end of each day that is hard to translate. There are people with genuine kindness that will help in case of emergency, and they do not understand that every day is an emergency and emergencies are invented that they will understand. Lies containing truth. The choice so often is between honesty or survival.

The old man has two beds in his room. One for him, one for newspapers and cats. He lives on the second floor of a milked with rot perhaps old boarding house, a faded five stars on the gate. To get to his place you pass through a dishevelled drinking place , climb steps above the brothel, it has that particular smell that these places have: husks of cockroach eggs, cracked windowsill paint strata, wood decayed in bodily fluids, electrical shorting, forgotten fires, paper damp with age – a smell no amount of hope can mask. His neighbours talk to him only to mission cigarettes, boiling water from his, the only kettle, and advice.

He wakes at 4:30am amongst the mewling of kittens and cats waiting to be fed, and he irons his suit, as threadbare as the financial district he will walk to in order to ply his trade. He needs to look respectable, it's for his own sake. He mends his suits in the late morning, after returning from, he calls it, pan-handling, after doing his modest dose of heroin, and then reads the morning papers and returns to work around two in the afternoon. He needs the heroin and the repaired suits in order to endure getting the money for the heroin and the suit repair. The cats are his survival.

Huddling in the lee of the stench at the scrap for crack recyclers, I clutch the pipe against the clawing hands, then into a garbage bag to try grab windless space to inhale some small dots of smack. There is no time to breathe. I must get more cans. I must dig in more bins to stave off reality. This is not a party.

Someone buys me a hoodie. It's summer in Durban. They will not give me money for food, or drugs or medication but they buy me a hoodie. Give it to me with the price tag still on. One thousand two hundred rand. We both know that I will sell the hoodie for drugs, not even getting cash so I can get food, the merchants only pay in drugs for clothes. I cannot exchange it, without a recipient I will be arrested for shoplifting. I get two hundred rands worth of drugs.

Chop Wood. Carry Water.

There is a methadone program here. At seven in the morning they line up to receive their daily dose. Methadone has a twelve hour half life, by seven tonight everyone here will be in withdrawal. There is no nightly dose. There is no methadone on weekends. And so the attempt to get clean results in higher tolerance. The dose never reduces, it is not tracked, this is not a reduction program. This is not a pathway away from daily addiction, this is another way to maintain. The nurse and admin person upfront have no time for my questions, “we are trying to help you, do you want or not?”.

I follow him straight line from the traffic lights at the mall where I have spent the afternoon withdrawing, watching him work the passing cars, trying to not shit in my pants. Before when I have had money I have shared resources with him and now he is helping me. We are passing time here while he waits for his end of day daily peace job,of which he often boasts. There is an older man up the road just before the old zoo who pays him to feed the monkeys in the fading light. This old man sits on his balcony and throws down bags of fruit and an envelope containing a hundred rand. We fight for the fruit with the monkeys while feeding them, he has pulled in maybe another hundred or two at the lights. I do not ask. We in the now darkness head down the alley, to the side gate that leads into the stolen apartment complex where he pays rent in kind.

The gate is blocked by the sleeping figure of an old man. We have to move him, “Don't wake him.” I interpret this as kindness. An old brown sherry bottle rolls off, tinkling decorously toward the gutter, the old man grunts, “don't fucking wake him.” Why not? “He's my father and he will want to come inside. Never trust a wetbrain”. Slipping inside the gate, up the filth littered stairs. Tripping over recalcitrant rats unabated. He has lived, alongside his family in various forms for his whole life. From here by the tracks, past the factories, the mall, up to the old zoo, these few square kilometres have been his whole life. He almost finished school just over there. He almost got a job in another town once and would have left from the train station over there. He has no electricity, no television, no phone, can hardly read, no size-able ambition other than this daily avoiding of withdrawal. The nightly comfort in the distractions and rituals of oblivion, is his only allotted purpose.

He always makes sure he has one cap of heroin to wake up to, so that he can get to work calmly, “you cannot hurry the money,” he smiles as he takes small joy in his morning ritual.

At the traffic lights he fights over his place with a woman on crutches, “the bitch can walk.”

And besides, he has been here his whole life. He has pride in this work, knows all the people in the cars. He has an impatient conversation with a man through the car window. The light goes green. A shrug, “says he'll be back later,” shouting now, “I could have asked three other cars, these larnies, always over-explaining, always a story with them, they can't just say no.”

from targetedjaidee

Gratitude.

What are some things you are grateful for today? I am grateful for the following:

- Woke up clean

- My children are safe (for now)

- My marriage & how awesome we are together

I saw my therapist yesterday (my therapist understands that I am a part of this program and doesn't judge me). And I came to the realization I have to love my spouse for how they are & who they are. And in doing so, I have to forgive them. Ya know what I mean. I have struggled with the perceived notion that they were in on this type of thing; truth of the matter is that we weren't ourselves for most of last year. So, just for today I choose happiness, grace, & love.

I have come to realize that my ability to offer forgiveness to those around me is actually a gift. Do I struggle with the emotions of the aftermath? Absolutely. I am human and I have to process these things. Even when I feel extremely low and full of sorrow, I still pray. I ask God to remove those nasty feelings when its time. I know I have to feel my emotions and process them. Sometimes, those get heavy.

The amount of betrayal I have suffered recently has been absolutely insane. But that is what this program is about. Further isolation (or at least make it seem as though). Part of my program is “parental alienation”. What is that? Well, a false narrative has been fed to my children & I am being treated with a 10-foot pole and being kept from my children. Even with clean screenings & doing my part. My parents are actively trying to keep my children from me to make it seem as though “I abandoned them”. That way if I ever mess up, I get my “rights terminated”. My spouse's ex did just that actually. They cut contact between my spouse and their kids once the ex found out about me. That was sometime in 2020. Well, in 2024 the ex reaches out and tries (keyword tries but fails miserably) to “be kind and supportive” because my spouse's parent had passed. Well interestingly enough, my parents had decided to help my spouse get the right to see their kids and hired an attorney. THAT day that my parents wrote the check – the ex calls my spouse (LMAO). I cannot make this shet up.

The ex was trying to be “civil” with their own agenda. Always hidden motives. They spent over an hour talking on the phone about how they do not like me (mind you, I have never met this individual). How the kids would never call me “Mom” or whatever (I knew I was never getting the chance to meet my step kids anyway). So that didn't hurt. They were adamant about their religious views (I could care less, it's their children together, they can practice whatever they want). Hilariously enough: I had a blog going in 2024 off of Wix; the ex tells my spouse that “one of their kids” found my blog (-_–). Seriously? So, the kids are stalking me and out of ALL the websites on Wix...your kid finds mine? Insert eye roll. That poor kid, dude. Being thrown into the mix without having done anything. But...that's their parent and I pray for them every day.

I firmly believe that gangstalkers need to be brought to justice. They need to be exposed & brought to justice. I think my spouse's ex needs help mentally, with the level of obsession they exhibited, literally up until November of last year (since 2020). (LMAO) I start talking about the experiences I have had & I get told that I am “crazy” or whatever narrative has been sold to them to come and attack me Insert eye roll. It is so pathetic. What these idiots don't seem to understand is: the more they gang up on me, the more obvious it is to me that I am a child of God & that terrifies whatever evil motives they have in doing what they are doing. You know what I mean. They tell on themselves.

But at the same time: Not one of them is willing to sit down with me & tell me, human to human, “Hey. Here is why I do not like you.” Not. One. They click up like pessies to slander and defame (LMAO). It is hilarious, I am serious. It's like watching roaches run in the same direction, altogether. But yeah. That is where my mind is today.

To my fellow TIs: I pray today is good to you. You small wins are valid & should be celebrated. You matter. I am grateful you're here.

Jaide owwt*

Edinburgh – En stad där historia, kultur och naturskönhet möts

from  Platser

Platser

Edinburgh, Skottlands majestätiska huvudstad, är en stad som andas historia och charm. Belägen på östra kusten, med sin dramatiska siluett av slott, medeltida gränder och vulkaniska kullar, är staden en perfekt blandning av gammalt och nytt. Här kan du vandra genom tusen år av historia, njuta av världsklasskultur, och samtidigt uppleva en modern, levande stad med en unik karaktär.

Historiska landmärken och arkitektur

Edinburghs mest ikoniska landmärke är utan tvekan Edinburgh Castle, som reser sig stoltsamt på Castle Rock. Slottet, som har fungerat som kungligt residens, militärfästning och fängelse, är en symbol för Skottlands turbulenta historia. Här kan du se de skotska kronjuvelerna, Stone of Destiny, och den berömda kanonen Mons Meg. Utanför slottet ligger Royal Mile, en livlig gata som sträcker sig ner till Holyrood Palace, kungafamiljens officiella residens i Skottland. Längs Royal Mile hittar du historiska byggnader, museer, traditionella pubar och affärer som säljer allt från tartanplädar till whisky.

Ett annat måste är Holyrood Abbey, en vacker ruin som ligger intill Holyrood Palace. Abbotet grundades på 1100-talet och är en påminnelse om stadens religiösa och kungliga förflutna. För den som är intresserad av arkitektur är St Giles' Cathedral ett besök värt. Katedralen, med sin imponerande gotiska stil och färgstarka fönster, är en av Skottlands mest kända kyrkor.

Kultur och evenemang

Edinburgh är också känt som en av världens ledande kulturstäder. Varje år i augusti omvandlas staden till en scen för Edinburgh Festival Fringe, världens största konst- och kulturfestival. Under festivalen fylls gatorna av artister, komiker, musiker och teatergrupper från hela världen. Det är en tid då staden verkligen lever upp till sitt rykte som en plats för kreativitet och innovation.

För litteraturälskare är Writers' Museum ett besök värt. Museet hyllar tre av Skottlands största författare: Robert Burns, Walter Scott och Robert Louis Stevenson. Här kan du lära dig mer om deras liv och verk, och se originalmanuskript och personliga föremål.

Om du är intresserad av vetenskap och innovation, bör du besöka National Museum of Scotland. Museet erbjuder en fascinerande resa genom tid och rum, från dinosaurier och forntida skatter till modern teknik och design. Det är ett perfekt ställe för både barn och vuxna.

Natur och utsikter

Edinburgh är inte bara en stad för historiker och kulturälskare – den erbjuder också fantastiska naturupplevelser. Arthur's Seat, en utdöd vulkan, är stadens högsta punkt och erbjuder en magnifik utsikt över hela området. En vandring upp för berget är ett måste för den som vill uppleva stadens skönhet från ovan. Om du föredrar en lugnare promenad, är Princes Street Gardens en perfekt plats att slappna av på. Parken ligger mitt i staden och erbjuder en grön oas med vackra blommor, fontäner och utsikt över Edinburgh Castle.

För den som vill utforska utanför stadskärnan, är Leith ett trevligt område att besöka. Detta hamnområde har genomgått en förvandling och är nu känt för sina trendiga restauranger, barer och konstgallerier. Här kan du också besöka Royal Yacht Britannia, drottning Elizabeth II:s tidigare kungliga yacht, som nu är ett museum.

Mat och dryck

Edinburgh har ett rikt utbud av restauranger, från traditionella skotska pubar till moderna fine dining-restauranger. Ett måste är att prova haggis, Skottlands nationalrätt, som serveras med “neeps and tatties” (rotfrukter och potatis). För den som är modig kan man också prova whisky – Skottland är ju känt för sin whisky, och Edinburgh har flera destillerier och whiskybars där du kan lära dig mer om tillverkningsprocessen och smaka på olika sorter.

Om du föredrar något sött, bör du prova en shortbread eller en Cranachan, en traditionell skotsk dessert gjord på havregryn, hallon, grädde och whisky.

Praktisk information

Edinburgh är en kompakt stad, och det mesta kan nås till fots. För längre sträckor finns det ett välutbyggt kollektivtrafiksystem med bussar och spårvagnar. Staden har också en internationell flygplats, vilket gör den lättillgänglig för resenärer från hela världen.

När det gäller boende finns det något för alla smaker och budgetar. Från lyxiga hotell på Princes Street till mysiga bed and breakfasts i Gamla stan, eller moderna hostels för backpackers – Edinburgh har allt.

Hunger as Norm, Politics as Always

Somalia’s hunger is not a breaking story. It is a baseline.

Every few years, the photos and headlines return: emaciated children, dry riverbeds, queues for food distributions. Donors convene pledging conferences, agencies refresh their emergency plans, politicians promise coordination. Then the rains come, or the news cycle moves on, and the crisis is reclassified from “famine” to “acute food insecurity.” But for millions of Somalis, hunger never fully leaves. It stretches and tightens with the seasons and the political calendar, becoming a normal condition to be managed rather than an intolerable failure to be ended.

Calling this “normal” is not a moral judgment on Somalis; it is a description of how the system currently works. Droughts, floods, displacement and high prices interact with fragile institutions, insecure roads, missing infrastructure, and a relief economy that keeps people just above the survival line without changing the underlying structure. Politics, meanwhile, continues as always: competition over territory and rents, short-term bargains, and symbolic announcements about resilience that rarely translate into the boring, patient investments that would make chronic hunger exceptional again.

To understand why hunger behaves like a norm in Somalia, it helps to separate three layers: climate, infrastructure and markets; and politics.

The climate layer is the one most often named: multi-season droughts, erratic rains, rising temperatures, and then destructive floods. For rural and pastoral households, this means more frequent and sharper shocks to pasture, water and livestock. Climate is not new in Somalia, but the speed and volatility of current patterns mean less time to recover between shocks. Even in good years, many households are one failed season away from crisis; in bad years, the line between “poor” and “famine-affected” is thin.

Infrastructure and markets translate these shocks into hunger or resilience. In large parts of Somalia, there are few reliable rural roads, limited cold storage and warehouses, weak irrigation, and patchy electricity. When a drought hits, traders can only move food and water so fast and so far; when prices spike globally, import-dependent markets pass that cost straight to consumers. Water trucking, private boreholes and small-scale irrigation schemes play a vital role, but they are fragile and expensive. There is no dense, climate-ready “infrastructure of adaptation” – no network of wells, storage, small dams, feeder roads, energy and communications robust enough to absorb shocks and keep food and water physically accessible.

In this vacuum, markets do function, but they do so under extreme stress and with high margins. A trader in a remote district is not a villain for charging more when fuel prices and security risks climb. Yet for households spending most of their income on food, these price shifts are the difference between eating twice a day and once, between staying in place or joining an IDP camp. Mobile money and remittances soften the blow for some families, but they are unevenly distributed and cannot substitute for missing public systems.

Over this sits the political layer. “Politics as always” in this context means that hunger is deeply shaped by decisions on security, representation, and resource allocation, but rarely treated as the central test of those decisions. Territorial control and clan bargaining shape where roads are built, where health posts and schools survive, where local government functions; they also influence how quickly humanitarian aid reaches certain areas, which communities are visible in national plans, and whose suffering becomes legible to donors. In some places, negotiations with armed actors determine whether food can move at all. In others, the presence of an international compound guarantees attention to nearby camps, while villages just beyond the security perimeter remain invisible.

Humanitarian actors, for their part, are caught between genuine commitment and structural constraints. Funding is short-term and volatile; appeals are chronically under-financed; programmes are often designed for one- or two-year cycles. “Resilience” has become a standard word in project documents, but much of the architecture still revolves around emergency response. When drought looms, plans are activated, NGOs scale up, and cash or food is distributed. When the immediate emergency fades, budgets shrink, teams are reassigned, and the opportunity to systematically build water, storage, roads and safety nets is lost again. No single agency chooses this pattern, but together they reproduce a system where survival is the outcome, not transformation.

The result is a grim equilibrium. Rural and peri-urban households adapt as best they can, diversifying income, migrating, sending children to cities, relying on relatives abroad. Local markets and private providers fill gaps with water, transport, and basic services where possible. Humanitarian pipelines prevent full-scale famine in many areas, especially when early warning works and funding arrives on time. Politicians manage the optics, balancing domestic expectations and donor relationships. Hunger moves up and down the scale, but it rarely drops out of the picture.

This is what “hunger as norm” looks like: a country where food insecurity is not an exceptional shock but an ordinary risk, managed each year through a mix of coping strategies, emergency aid, and selective infrastructure fixes. Climate change tightens this equilibrium; each cycle becomes harder to manage without deeper structural change. Yet the politics of the state, the incentives of donors, and the business models of many actors remain aligned with continuity rather than disruption.

Breaking this norm does not start with a new slogan, but with a different way of asking questions. Instead of “How many people can we feed this season?”, the core questions become: which investments in water, roads, storage, energy and basic services would permanently reduce the population living one shock away from hunger? How can social protection systems be built to deliver predictable support before people exhaust their assets? What forms of local government and accountability are needed so that drought response is a matter of public policy, not ad hoc negotiation? And how should external actors change their own funding and programming logic to support that shift, rather than reproducing the emergency cycle?

Politics will not disappear from these choices; it will always shape who benefits first, which regions and clans see more investment, and how institutions are built or blocked. But politics can operate inside a fundamentally different structure – one in which the baseline is that most Somalis are food secure most of the time, and hunger has returned to what it should be: a signal of exceptional failure, not an expected part of life.

For now, that is not the system Somalia has. The system Somalia has is one where climate shocks are intensifying, infrastructure for adaptation is thin, markets are stressed, and the relief economy sits on top of a fragile political order. As long as those fundamentals remain unchanged, hunger will continue to behave like a norm, and politics will continue as always.

The question for Somali policymakers, practitioners, and their partners is whether they are prepared to treat this as unacceptable normality and reorganise their work accordingly – or whether the next drought and the next set of photos will once again be absorbed into a familiar, lethal routine.

from targetedjaidee

Healing Out Loud.

That is what I am doing. Ya know, sometimes I get angry & very upset with how things have transpired. But at the same time: I am grateful I can feel these types of emotions & let them go after.

Today's verse is as follows:

Psalms 91 NIV 1Whoever dwells in the shelter of the Most High will rest in the shadow of the Almighty. 2 I will say of the Lord, “He is my refuge and my fortress, my God, in whom I trust.”

Imagine that! I am firmly protected & blessed by the Most High. He is my dwelling place, and He is the reason I am alive today. I am beyond grateful this morning, guys.

I really hope you have a blessed day!

Jaide owwt*

Sombra

from  Atmósferas

Atmósferas

Esta sombra, que es mi sombra, no tiene ojos pero sé que mira, no tiene boca pero sé que calla. No tiene rabo pero sé que es mono. Esta es la sombra del animal.

Esta sombra es lo que pienso cuando no hay testigos y lo que oculto cuando parezco claro. Esta sombra es memoria y desmemoria, caprichosa e indolente.

Esta sombra tiene corazón de piedra, enajenada sombra de mis días.

Aparece y desaparece, capaz de mezclarse entre las sombras, conspirar, retorcer, fagocitar.

Sé que es ella y tú también. Es mi sombra, es tu sombra, la raíz, las sombras, nuestras sombras, nuestra única gran sombra que clama por más sombra.

Rugged Interdependence

Rugged Interdependence