Want to join in? Respond to our weekly writing prompts, open to everyone.

from  Roscoe's Quick Notes

Roscoe's Quick Notes

Rangers vs Mariners.

My Friday game to follow today will be an MLB Spring Training Game between the Texas Rangers and the Seattle Mariners. I don't have access to a video feed for this game, instead I'll be watching the score and stats updated in real time on an MLB Gameday screen. The radio call of the game is coming from Seattle's KIRO 760 AM. GO Rangers!

The Covenant That Changed the Architecture of Reality

from Douglas Vandergraph

There are moments in Scripture where the curtain quietly opens and we are allowed to glimpse something far larger than the immediate words on the page. Hebrews 8 is one of those moments. At first glance it may seem like a theological explanation about priests, covenants, and temple systems, but if we slow down and listen carefully, we begin to realize that what the author of Hebrews is describing is nothing less than a restructuring of the spiritual architecture of the universe. Hebrews 8 does not merely clarify religious doctrine; it reveals that the entire system through which humanity relates to God has been transformed from the inside out. The writer is not interested in minor adjustments to religious practice. He is unveiling the replacement of an entire framework that governed human access to the divine for over a thousand years. When this chapter is understood in its full weight, it becomes clear that something breathtaking has happened through Jesus Christ. The old system that defined distance between humanity and God has been replaced with something radically different, something personal, internal, and alive.

The opening lines of Hebrews 8 immediately direct our attention upward. The writer explains that the true High Priest of believers is seated at the right hand of the throne of the Majesty in heaven. This single sentence carries enormous implications. In the Old Testament system, priests never sat down while serving in the temple because their work was never truly finished. Sacrifices had to be offered again and again because sin continued to exist, and the sacrificial system functioned as an ongoing temporary covering rather than a final solution. But the writer of Hebrews deliberately emphasizes that Jesus is seated. The work is complete. The sacrifice is finished. The system of endless repetition has been replaced by a decisive act that resolved the deepest problem humanity has ever faced. This is not merely poetic language. It is a theological declaration that the relationship between God and humanity now rests on something permanent rather than something provisional.

What makes Hebrews 8 particularly fascinating is that it shifts our focus away from the earthly temple entirely. For centuries, the temple in Jerusalem represented the center of Israel’s religious life. It was the place where heaven and earth symbolically met. It was the location where sacrifices were offered and where priests mediated between God and the people. Yet Hebrews tells us that this earthly system was only a shadow of something greater. The temple itself was not the ultimate reality. It was a symbolic preview of a deeper spiritual truth that would later be revealed in full clarity. The writer explains that the priests who served in the earthly temple were operating within a copy and shadow of the heavenly reality. That statement alone reshapes how we understand the entire Old Testament structure. It suggests that the elaborate system of rituals, sacrifices, priesthood, and temple worship was never intended to be the final destination. Instead, it functioned like scaffolding around a building that had not yet been completed.

To understand the magnitude of this idea, we need to appreciate how central the temple system was to ancient Jewish life. Every sacrifice, every priestly duty, every ritual purification was designed to maintain the covenant relationship between God and Israel. The law given through Moses structured every aspect of religious life. It governed moral behavior, community structure, and worship practices. Yet Hebrews boldly states that even this divinely established system contained a built-in limitation. It could reveal sin, it could temporarily address sin, but it could not fully transform the human heart. The law could instruct people about righteousness, but it could not permanently produce righteousness within them. This is not a criticism of the law itself. The law was holy and good. The limitation lay in the human condition. Humanity needed something deeper than external instruction. Humanity needed internal transformation.

The writer of Hebrews addresses this by pointing to a promise found centuries earlier in the writings of the prophet Jeremiah. Long before Jesus was born, Jeremiah had spoken of a future covenant that would differ fundamentally from the one given at Mount Sinai. The promise was radical. Instead of laws written on stone tablets, God would write His law directly on human hearts. Instead of a covenant maintained through external rituals, the relationship between God and His people would become internal and personal. Instead of a system where people needed priests to mediate their access to God, there would come a time when people would know God directly. This prophecy appears in Jeremiah 31, and Hebrews 8 quotes it extensively because it represents the heart of what Christ accomplished.

This promise of a new covenant reveals something profound about how God works throughout history. God often introduces a structure that prepares humanity for something greater that is still to come. The first covenant established through Moses created a moral framework that helped people understand the seriousness of sin and the holiness of God. It created categories of thought that allowed humanity to grasp concepts like sacrifice, atonement, and purification. Without that foundation, the work of Christ might have been impossible to understand. But once the fullness of God’s plan was revealed through Jesus, the earlier system had served its purpose. Hebrews states this very clearly when it says that by calling this covenant new, God has made the first one obsolete.

The word obsolete here does not mean that the old covenant was wrong or useless. It means that it has been fulfilled and surpassed. Just as a blueprint becomes unnecessary once the building has been completed, the old covenant prepared the way for something far greater. The writer is not dismissing the history of Israel. Instead, he is revealing how that history finds its ultimate meaning in Christ. The law pointed forward. The sacrifices pointed forward. The priesthood pointed forward. Every element of the old system functioned like a signpost directing humanity toward the moment when God would solve the problem of sin at its root.

When we consider this from a broader perspective, Hebrews 8 reveals something astonishing about the nature of God’s relationship with humanity. From the very beginning, God’s desire was not merely to give humanity rules to follow. His ultimate goal was always relational. God created humanity in His image, which means humanity was designed for connection with the Creator. Sin disrupted that relationship and introduced separation, guilt, and spiritual confusion into the human experience. The entire story of Scripture can be understood as God’s long and patient work to restore that broken relationship. The old covenant represented an early stage in that restoration process, but it could not accomplish the full transformation that humanity needed.

The new covenant described in Hebrews 8 addresses this problem in a completely different way. Instead of focusing primarily on external behavior, it focuses on internal renewal. God promises to write His laws on the minds and hearts of His people. This means that obedience is no longer driven primarily by external enforcement. It becomes the natural result of an inner transformation. The Spirit of God begins shaping the desires, motivations, and character of believers from the inside out. This represents a fundamental shift in how spiritual life operates. Instead of trying to achieve righteousness through human effort alone, believers are invited into a process where God Himself actively works within them.

This concept carries enormous implications for how we understand spiritual growth. Many people approach faith as if it were primarily about behavior modification. They believe that if they can simply try harder, follow the rules more carefully, and maintain stronger discipline, they will eventually become the people God wants them to be. While discipline and obedience are certainly important, Hebrews 8 reminds us that the foundation of the Christian life is not human effort but divine transformation. God is not merely asking people to conform to an external standard. He is offering to reshape the human heart itself.

One of the most beautiful promises in the new covenant appears in the statement that everyone will know God, from the least to the greatest. This line eliminates the spiritual hierarchy that often emerges within religious systems. Under the old covenant, knowledge of God was often mediated through priests, teachers, and religious leaders who held positions of authority within the community. While these roles served important purposes, they also created a certain distance between ordinary people and the presence of God. The new covenant collapses that distance. Every believer has direct access to God through Christ. Spiritual intimacy with God is no longer reserved for a select group of religious specialists. It becomes the birthright of every person who enters the covenant through faith.

This democratization of spiritual access is one of the most revolutionary aspects of Christianity. It means that the presence of God is not confined to temples, institutions, or specific geographic locations. The presence of God now dwells within the hearts of believers. The temple is no longer a building made of stone. The temple has become a living community of transformed people. This idea appears throughout the New Testament, where believers are described as the body of Christ and the dwelling place of God’s Spirit.

Another promise contained in the new covenant is that God will remember sins no more. This does not mean that God literally loses the ability to recall human wrongdoing. Rather, it means that the barrier created by sin has been permanently removed through the sacrifice of Christ. Under the old covenant, sacrifices had to be repeated continually because they symbolically addressed sin without fully removing its underlying power. The new covenant accomplishes something deeper. Through the work of Jesus, the power of sin to separate humanity from God has been decisively broken. Forgiveness is no longer temporary. It is complete.

When we reflect on this promise, it becomes clear that Hebrews 8 is describing a spiritual revolution. The system of religious striving that defined so much of ancient life has been replaced with a covenant built on grace, transformation, and direct relationship with God. The implications of this change reach into every corner of human experience. It affects how people understand forgiveness, identity, purpose, and spiritual growth. Instead of living under the constant pressure of trying to earn God’s approval, believers are invited to live from the reality that God’s approval has already been secured through Christ.

Yet even with this extraordinary gift, many people continue to live as if they are still under the old system. They carry unnecessary guilt, fear, and spiritual anxiety because they believe their standing with God depends primarily on their own performance. Hebrews 8 gently dismantles that misunderstanding. The new covenant is not sustained by human perfection. It is sustained by the finished work of Jesus, the High Priest who sits at the right hand of God because the work has already been completed.

When we begin to see Hebrews 8 in this light, the chapter becomes more than a theological explanation. It becomes an invitation to step fully into the new reality that God has created through Christ. It reminds believers that their relationship with God is not built on fragile human effort but on the unshakable foundation of divine grace. And when that truth settles deeply into the human heart, it begins to change everything.

The deeper we continue to walk into Hebrews 8, the more we begin to realize that the new covenant is not simply a religious improvement over an older system. It represents a fundamental transformation in the way God interacts with humanity. The old covenant revealed God's holiness and humanity’s inability to fully live up to that standard. It showed people what righteousness looked like, but it also exposed how far humanity had fallen from the life God originally intended. The sacrifices offered year after year became a constant reminder that something was still unfinished. They revealed the seriousness of sin, but they also pointed toward a future solution that had not yet fully arrived. Hebrews 8 invites the reader to understand that with Jesus Christ, that long-awaited turning point finally came. The temporary shadows that once guided humanity have now given way to the substance itself.

One of the most powerful ideas woven throughout this chapter is the difference between external religion and internal transformation. The old covenant relied heavily on outward obedience. People followed laws that were written on tablets, memorized commands that were recited within their communities, and participated in rituals that reminded them of God’s holiness and their need for purification. These practices were meaningful, and they created a shared identity for the people of Israel, but they could not reach into the deepest layers of the human soul where the true struggle with sin takes place. Anyone who has honestly examined their own heart understands this reality. Human beings often know what is right long before they actually begin living it. Knowledge alone does not always create transformation. The law could instruct, but it could not recreate the human heart.

This is where the promise of the new covenant becomes breathtaking in its scope. God declares that He will write His laws directly into the minds and hearts of His people. Instead of righteousness being imposed from the outside, it begins to grow from within. The Spirit of God becomes the active force shaping a believer’s thoughts, desires, and character over time. This is not instant perfection, but it is real transformation. It means that the Christian life is not simply about trying to mimic goodness through sheer effort. It is about allowing God to reshape the deepest motivations that drive human behavior. When the heart begins to change, obedience becomes less about obligation and more about alignment with the life God created humanity to experience.

The writer of Hebrews wants the reader to grasp that this new covenant changes the very nature of spiritual intimacy. Under the old system, the presence of God was associated with sacred spaces. The temple represented the meeting place between heaven and earth. Access to the most sacred part of the temple, the Holy of Holies, was restricted to the high priest, and even he could only enter once a year. The design of the temple communicated an important truth about God’s holiness, but it also reinforced the reality that humanity stood at a distance from Him. Barriers, curtains, and layers of ritual reminded people that approaching God required mediation and careful preparation. It was a sacred system, but it also emphasized separation.

The new covenant dismantles that distance in a remarkable way. Through Christ, the presence of God is no longer confined to a building or guarded by layers of priestly access. Instead, the presence of God becomes something that dwells within believers themselves. This is one of the most radical ideas ever introduced into human religious thought. The temple is no longer made of stone and gold. The temple becomes living people whose hearts have been renewed by the Spirit of God. This shift means that access to God is no longer defined by geography, ritual timing, or social position. Every believer carries the presence of God with them wherever they go.

This transformation has enormous implications for how faith is lived out in daily life. If the presence of God truly dwells within believers, then every moment becomes an opportunity to walk in awareness of that relationship. Faith is no longer confined to specific religious events or weekly gatherings. It becomes a continuous experience woven into ordinary life. Conversations, work, struggles, decisions, and quiet moments of reflection all become places where the relationship between God and His people unfolds. The sacred is no longer isolated inside temples. The sacred moves through the everyday lives of those who belong to God.

Another remarkable aspect of the new covenant is the promise that everyone within it will know God personally. This statement reaches far beyond intellectual knowledge. The kind of knowing described here refers to relational familiarity, the kind that develops through ongoing interaction and trust. In many religious systems, spiritual understanding is often concentrated among leaders or scholars who possess specialized knowledge. While teaching and leadership remain important within the Christian community, Hebrews emphasizes that every believer has direct access to the knowledge of God through the Spirit. This removes the idea that spiritual intimacy is reserved for an elite group of individuals. The invitation to know God personally extends to everyone, regardless of background, education, or social standing.

When we step back and view the entire biblical narrative, Hebrews 8 begins to feel like the culmination of a long story that started in the earliest pages of Scripture. In the Garden of Eden, humanity walked in direct fellowship with God. There were no temples, no sacrifices, and no barriers between the Creator and His creation. That original relationship was fractured by sin, and much of the biblical story describes God’s ongoing work to restore what was lost. The old covenant functioned as an important stage in that restoration process, but the new covenant brings humanity much closer to the original design. Through Christ, the distance introduced by sin is overcome, and the relationship between God and humanity is reestablished on a deeper foundation.

One of the final promises quoted in Hebrews 8 may be the most comforting of all. God declares that He will forgive the wickedness of His people and remember their sins no more. This statement touches on one of the deepest fears present within the human heart. Many people carry a quiet anxiety that their past mistakes permanently define them. They worry that their failures place them beyond the reach of grace, or that God’s patience will eventually run out. The new covenant speaks directly to that fear. Forgiveness in Christ is not partial, temporary, or fragile. It is decisive and complete. When God says He remembers sins no more, it means that those sins no longer stand as barriers between the believer and the presence of God.

This truth reshapes how believers understand their identity. Instead of defining themselves by past failures, they are invited to define themselves by the grace that God has extended to them. This does not mean that sin becomes irrelevant or that moral responsibility disappears. Rather, it means that transformation is now possible because the burden of guilt has been lifted. People who know they are forgiven are free to pursue growth without the crushing weight of condemnation hanging over them. They can move forward with humility and gratitude, trusting that God’s mercy is stronger than their past.

Hebrews concludes this section by stating that the old covenant is becoming obsolete and aging away. For the original audience of this letter, this statement would have been both profound and unsettling. The temple system had shaped Jewish religious life for generations. Suggesting that it was fading away would have sounded almost unimaginable. Yet the writer understood that God was doing something new through Christ that could not be contained within the old structures. History itself would soon confirm this shift when the temple in Jerusalem was destroyed in the year 70 AD. The center of religious life would no longer revolve around sacrifices offered in a specific location. Instead, the faith of believers would revolve around the finished work of Jesus and the living presence of God within His people.

For modern readers, Hebrews 8 offers an invitation to rethink what it truly means to live in relationship with God. It challenges the tendency to reduce faith to routine or tradition. It reminds believers that Christianity is not merely about preserving religious forms from the past. It is about participating in the living covenant that God established through Jesus Christ. The law written on the heart, the Spirit dwelling within believers, and the complete forgiveness offered through Christ create a relationship with God that is both deeply personal and spiritually transformative.

When this chapter is understood in its full depth, it becomes clear that the new covenant is not simply a theological concept. It is the living foundation of the Christian life. It explains why believers can approach God with confidence. It explains why spiritual transformation is possible even for people who feel broken or unworthy. It explains why the message of Christ continues to spread across cultures and generations. The new covenant reveals that God’s ultimate desire has always been to dwell among His people and to restore the relationship that sin once shattered.

Hebrews 8 stands as a powerful reminder that the story of redemption is not merely about correcting human behavior. It is about renewing the human heart and restoring humanity’s connection with its Creator. Through Jesus Christ, the High Priest seated at the right hand of God, the barrier between heaven and earth has been overcome. The shadows have given way to the reality. The temporary has been replaced by the eternal. And every believer now stands invited into a covenant that will never fade away.

Your friend, Douglas Vandergraph

Watch Douglas Vandergraph’s inspiring faith-based videos on YouTube https://www.youtube.com/@douglasvandergraph

Support the ministry by buying Douglas a coffee https://www.buymeacoffee.com/douglasvandergraph

Donations to help keep this Ministry active daily can be mailed to:

Douglas Vandergraph Po Box 271154 Fort Collins, Colorado 80527

from  Kroeber

Kroeber

#002299 – 14 de Setembro de 2025

Os mesmos que, na indústria da música, criaram um espalhafato sobre a pirataria e ameaçaram mesmo tratar quem escutava música e a partilhava como criminosos, agora roubam descaradamente a música a quem a criou, para depois gerar música por inteligência artificial, que por isso não implica nenhuma relação laboral nem nenhum direito de autor, a não ser o da propriedade intelectual do software que foi alimentada pela música roubada.

from  Kroeber

Kroeber

#002298 – 13 de Setembro de 2025

O Benn Jordan e a sua “adversarial music” a envenenar os dados que a seguir serão canibalizados pela inteligência artificial. Uma resistência a nascer entre artistas, que encontram formas de sabotar o roubo de que são alvo. Jordan chama-lhe Poisonify.

2026-03-05

from Two Sentences

I needed to catch up on sleep. As a wise friend said at dinner today: “I gotta sleep early”; and so I did.

When the World Punishes Truth: The Quiet Courage of Living Honestly in an Age of Illusion

from Douglas Vandergraph

There are moments in history when truth becomes uncomfortable, not because it has changed, but because the surrounding world has drifted so far from it that its presence exposes the illusion everyone has grown accustomed to living within. Human societies have always constructed narratives about themselves, stories that explain who they are, what they value, and where they believe they are going. These narratives can be noble when they align with truth, but they become fragile the moment they depend on denial, distortion, or convenient silence. At that point something subtle begins to happen. The culture gradually rewards those who repeat the approved story and quietly punishes those who question it. In those seasons the person who simply speaks honestly can suddenly appear rebellious, disruptive, or even dangerous. What once would have been called integrity begins to look like treason, not because truth has become radical, but because the environment surrounding it has grown allergic to reality. This strange dynamic is not new to our generation, nor is it unique to modern society. It has existed for as long as human beings have struggled with the tension between comfort and truth, and Scripture reveals that this conflict lies at the very center of the spiritual battle that has unfolded since the beginning of human history.

The biblical story begins with an act of creation rooted entirely in truth. God speaks, and reality forms in perfect alignment with His word. Light separates from darkness, land rises from the waters, and life begins to flourish in every corner of creation. Nothing in that original moment is built on illusion, manipulation, or deception. Everything is grounded in the simple authority of divine truth expressed through the voice of God. Humanity is then created in the image of that truth-speaking Creator, meaning that the human mind, heart, and spirit were designed to function best when aligned with reality as God defines it. This alignment is not merely intellectual but relational, because truth in Scripture is never presented as an abstract concept detached from life. Truth is the atmosphere in which human beings were meant to live, breathe, and flourish. It is the moral oxygen of the soul. When humanity walks in truth, everything from relationships to communities to nations begins to reflect a harmony that mirrors the character of God Himself. But when truth becomes distorted or ignored, the consequences ripple outward in ways that gradually reshape every aspect of life.

The first fracture in humanity’s relationship with truth occurred in a moment that seemed deceptively small. The serpent in the garden did not begin with an open declaration of rebellion against God, nor did he attempt to overpower Adam and Eve with force or intimidation. Instead he introduced something far more subtle and far more powerful: doubt about the reliability of God’s words. His strategy revolved around one simple question that has echoed through human history ever since. “Did God really say?” In that moment the serpent shifted the conversation away from trust in divine truth and toward human interpretation, speculation, and suspicion. Once that door opened, the ground beneath humanity’s understanding of reality began to move. If God’s word could be questioned, then truth itself became negotiable. If truth became negotiable, then the authority to define reality shifted away from the Creator and toward the creature. What followed was not simply a moral mistake but the birth of a pattern that continues to shape the world today: whenever humanity attempts to construct reality apart from God’s truth, confusion multiplies and disorder spreads.

This ancient moment in the garden explains something about the world we now inhabit. Human beings possess an extraordinary capacity for creativity, intelligence, and innovation, yet they also possess an equally powerful ability to construct narratives that protect their comfort even when those narratives drift away from truth. Entire cultures can gradually become invested in illusions that feel stable and reassuring while quietly ignoring the deeper reality beneath them. These illusions often become reinforced by systems of influence, authority, and tradition until they appear nearly unshakeable. In such environments speaking truth can feel socially disruptive because truth threatens the stability of the illusion itself. The person who calmly points out reality may not intend to challenge the system, but the system reacts defensively because it senses the potential collapse that truth could bring. This is why throughout history truth tellers have often been treated not as guides back to reality but as threats to the existing order.

The biblical prophets understood this tension with remarkable clarity. Men like Isaiah, Jeremiah, and Ezekiel were not political agitators in the conventional sense, yet their commitment to speaking God’s truth placed them in direct conflict with the comfortable narratives of their time. These prophets often confronted leaders who preferred reassuring messages over honest warnings. They addressed communities that had grown accustomed to hearing what they wanted to hear rather than what they needed to hear. When prophets spoke about injustice, idolatry, or moral drift, the reaction was rarely celebration. Instead they encountered resistance, ridicule, and sometimes outright persecution. The reason was simple: their words exposed the gap between the reality God saw and the story people preferred to believe about themselves. That exposure felt threatening because acknowledging truth would require change, humility, and repentance. As long as the illusion remained intact, life could continue without disruption.

This pattern reaches a profound climax in the life of Jesus Christ. When Jesus entered the world, He did not arrive merely as a teacher offering interesting ideas about morality or spirituality. He came as the embodiment of divine truth itself. The Gospel of John opens with language that reveals the depth of this reality, describing Christ as the Word through whom all things were made. The same voice that spoke creation into existence now walked among humanity in human form. Truth was no longer simply spoken from heaven; it was standing in the streets, healing the sick, teaching crowds, and confronting the distortions that had taken root within religious and political systems alike. Jesus did not seek conflict for its own sake, but His presence naturally created tension wherever illusion had replaced truth. His teachings challenged the hypocrisy of religious leaders who valued outward appearance over inner transformation. His compassion exposed social systems that ignored the suffering of the marginalized. His authority unsettled political structures that depended on control rather than justice.

One of the most revealing moments in the life of Jesus occurs during His trial before Pontius Pilate. Pilate represents the Roman Empire, the most powerful political force of that era, a system built on authority, order, and the preservation of imperial stability. As Jesus stands before him accused of threatening the established order, Pilate asks a question that has echoed through centuries of human reflection: “What is truth?” The question itself reveals something about the mindset of power. Pilate speaks as someone who has seen countless political narratives, competing claims, and shifting loyalties. In his world truth may appear fluid, shaped by perspective and circumstance. Yet the irony of the moment is striking. The man asking the question is standing face to face with the embodiment of truth and still cannot recognize it. This encounter illustrates how systems built primarily on maintaining power can gradually lose the ability to perceive truth clearly. When stability becomes the highest priority, truth becomes inconvenient if it threatens the existing structure.

The crucifixion that follows might appear, from a purely earthly perspective, to be the victory of the empire over truth. Jesus is condemned, executed, and placed in a tomb under the authority of the state. For those watching from the outside, the machinery of power seems to have succeeded in removing the threat. Yet the resurrection three days later reveals a deeper reality about the nature of truth itself. Truth can be attacked, silenced, and temporarily buried, but it cannot ultimately be destroyed. The resurrection of Christ stands as the most profound demonstration that divine truth does not depend on human approval or institutional protection in order to endure. While empires rise and fall across centuries, the truth embodied in Christ continues to transform hearts, cultures, and civilizations. The Roman Empire that once executed Jesus eventually faded into history, while the teachings of Christ spread across continents and generations.

This reality carries profound implications for believers living in any era. Every generation eventually encounters moments when speaking truth becomes costly. Sometimes the cost is social, involving misunderstanding or criticism. Sometimes the cost is professional, affecting opportunities or advancement. In other cases the cost may involve deeper forms of opposition that test a person’s courage and faith. The question that arises in those moments is not simply whether truth is correct, but whether the individual is willing to stand with it even when doing so carries consequences. Scripture consistently portrays this choice as a defining element of faithful living. The heroes of faith described in the Bible were not individuals who avoided tension but individuals who remained aligned with God’s truth even when it placed them at odds with prevailing cultural narratives.

At the same time the Bible never portrays truth as a weapon intended to crush or humiliate others. The life of Jesus demonstrates that truth and compassion are inseparable. Christ spoke truth boldly, yet He also extended grace to those who were lost, confused, or trapped within deception. His confrontations were directed primarily toward systems of hypocrisy and power that oppressed others, not toward individuals who were sincerely searching for understanding. This balance is essential for believers seeking to live truthfully in a complex world. Truth without compassion can become harsh and self-righteous, while compassion without truth can drift into sentimentality that fails to address reality. The example of Christ calls believers to hold both together, reflecting a character that is firm in conviction while generous in mercy.

Living in truth also requires a deep internal alignment that begins within the heart of the individual. Before believers can speak truth into the world, they must first allow truth to reshape their own lives. This involves examining personal motives, confronting hidden fears, and surrendering areas where self-deception may have taken root. The spiritual journey is not merely about correcting external behavior but about transforming the inner landscape of the soul so that it reflects the character of Christ. When this transformation begins to take place, a quiet confidence emerges. The believer no longer depends on the approval of shifting cultural trends for identity or purpose. Instead identity becomes rooted in the unchanging truth of God’s love and calling.

History repeatedly shows that individuals who live with this kind of grounded integrity often exert influence far beyond what their circumstances might suggest. Many of the most transformative movements in human history began with small groups of people who simply refused to compromise truth. Early Christians gathering quietly in homes and underground communities eventually reshaped the moral imagination of the Roman world. Reformers within the church challenged centuries of corruption by returning to the foundational truths of Scripture. Abolitionists confronted systems of slavery by insisting that human dignity must align with the truth that every person bears the image of God. In each of these moments the individuals involved were often seen as disruptive or unrealistic by the societies around them. Yet their commitment to truth ultimately shifted the course of history.

The same principle continues to operate in everyday life today. Truth does not require vast platforms or global recognition in order to have impact. Often its most powerful influence occurs in ordinary environments where individuals choose integrity in quiet moments that others may never see. A parent who teaches children to value honesty over convenience contributes to shaping a future generation grounded in truth. A professional who refuses to manipulate or deceive despite pressure from competitors demonstrates that success and integrity do not need to be mutually exclusive. A friend who speaks gently but honestly when someone is drifting toward harmful decisions provides a lifeline back to reality. These seemingly small acts of truthfulness create ripples that extend far beyond the immediate moment.

Over time those ripples accumulate into something far more powerful than most people initially realize. Truth does not always advance through dramatic confrontations or sweeping cultural revolutions. More often it spreads quietly through the steady influence of people who refuse to live inside convenient illusions. When a community begins to notice individuals whose lives are marked by calm integrity, thoughtful wisdom, and unwavering honesty, curiosity begins to grow. People start asking questions about the source of that steadiness. They notice that while others are constantly adjusting their beliefs to match shifting trends, these individuals remain anchored to something deeper and more permanent. That curiosity opens doors for conversations that reach beyond surface-level debates and move toward the deeper spiritual foundations that shape how people see the world. In this way truth does not merely confront error; it gently invites others back into alignment with reality as God designed it.

The challenge in our present era is that modern culture often moves at a speed that encourages shallow thinking and emotional reaction rather than patient reflection. Information flows constantly across screens and platforms, creating an environment where narratives can spread widely before they are ever examined carefully. In such a landscape the temptation to simplify complex issues into slogans or tribal loyalties becomes extremely strong. People often feel pressured to adopt positions quickly in order to belong to a particular group or identity. Yet truth rarely reveals itself fully through rushed conclusions or emotional impulses. Scripture repeatedly encourages believers to cultivate wisdom, discernment, and humility when approaching questions about reality. These qualities require time, prayer, study, and a willingness to listen carefully before speaking. When believers practice this kind of thoughtful engagement, they model a different way of interacting with the world, one that values understanding over reaction and clarity over noise.

Another important aspect of living truthfully involves recognizing that deception often disguises itself as something attractive or beneficial. Very few people knowingly embrace falsehood if it appears obviously destructive. Instead deception usually presents itself as helpful, progressive, or compassionate while subtly shifting the foundation beneath those words. The serpent in the garden did not advertise his message as rebellion against God. He framed it as an opportunity for greater knowledge and independence. In the same way modern distortions of truth frequently appeal to human desires for comfort, security, or control. The danger arises when those desires begin to override the deeper call to remain faithful to God’s definition of reality. Discerning this difference requires a spiritual sensitivity that grows through consistent engagement with Scripture and prayer. The more familiar believers become with the character and voice of God, the easier it becomes to recognize when something is subtly drifting away from that truth.

It is also important to remember that truth is not merely a set of doctrines or moral rules that believers defend intellectually. Truth ultimately points toward the person of Jesus Christ, whose life reveals the full character of God’s love, justice, and holiness. When believers speak about truth in a way that loses sight of Christ’s example, the message can become abstract or detached from the relational heart of the gospel. Jesus demonstrated that truth is inseparable from love because both originate from the same divine source. His interactions with people were marked by a remarkable balance of honesty and compassion. He could confront religious hypocrisy with piercing clarity while also welcoming those who felt rejected or unworthy. He could challenge deeply held assumptions while still extending mercy to those who struggled to understand. This combination allowed His truth to penetrate hearts rather than simply winning arguments.

For believers seeking to follow Christ in a world where truth is often contested, this example offers both guidance and encouragement. The goal is not to dominate conversations or prove intellectual superiority but to bear witness to a reality that transforms lives. When truth is lived authentically rather than merely defended rhetorically, its power becomes far more persuasive. People may resist arguments, but they often find it difficult to ignore a life that consistently reflects integrity, peace, and genuine love for others. Over time the credibility of that life opens opportunities to share the deeper spiritual foundation that sustains it. This approach does not eliminate conflict entirely, because truth will always challenge certain assumptions or behaviors. However, it ensures that the motivation behind speaking truth remains aligned with the redemptive purpose of the gospel.

Another dimension of this calling involves courage, a quality that Scripture frequently associates with faith. Courage does not mean the absence of fear but the willingness to act faithfully despite fear. Throughout the Bible God repeatedly encourages His people to be strong and courageous because they are not facing the challenges of the world alone. The presence of God accompanies those who commit themselves to living in alignment with His truth. This assurance does not guarantee that every situation will unfold comfortably or without difficulty. Yet it provides a deeper confidence that ultimate outcomes are held within God’s sovereign purposes. When believers internalize this perspective, they gain the freedom to act with integrity even when immediate circumstances appear uncertain or unfavorable.

History offers countless examples of individuals who embodied this courage in quiet but transformative ways. Many of the early Christians who spread the gospel across the Roman world possessed no political authority or social prestige. They were ordinary people whose lives had been changed by encountering the truth of Christ. Their commitment to living faithfully within their communities gradually attracted attention. Neighbors observed their generosity toward the poor, their refusal to participate in exploitative practices, and their unwavering devotion to caring for one another during times of crisis. These everyday expressions of truth and love created a compelling alternative to the prevailing cultural patterns of the time. Over generations that witness contributed to a shift in moral awareness that reshaped the foundations of Western civilization.

The same potential exists in every era because truth ultimately derives its power not from human institutions but from the character of God Himself. When believers align their lives with that character, they participate in a reality that transcends temporary cultural trends. While specific challenges and debates may change across decades or centuries, the underlying spiritual dynamics remain remarkably consistent. Humanity continues to wrestle with the temptation to redefine truth according to convenience or desire, and God continues to call people back into alignment with the reality He established from the beginning. Each generation must decide whether it will build its identity upon shifting narratives or upon the enduring foundation of divine truth revealed through Christ.

For those who choose the latter path, the journey often includes moments of solitude or misunderstanding. Standing for truth may sometimes feel like walking against the current of prevailing opinion. Yet Scripture repeatedly reminds believers that they are part of a much larger story that stretches across centuries of faithful witnesses. The prophets, apostles, and countless ordinary believers who came before faced similar tensions and challenges. Their perseverance demonstrates that truth has always advanced through individuals who trusted God more than they feared the disapproval of others. This historical continuity provides encouragement for believers today, reminding them that their efforts are not isolated acts but contributions to a legacy of faithfulness that spans generations.

As this legacy continues to unfold, the influence of truthful living extends far beyond immediate circumstances. Children raised in environments where honesty and integrity are valued carry those principles into their own families and communities. Young leaders who observe mentors practicing ethical decision-making often replicate those patterns when they assume positions of responsibility. Communities shaped by trust and transparency create conditions where cooperation and compassion can flourish. These outcomes may develop gradually rather than dramatically, yet their cumulative impact can transform entire cultures over time. The power of truth lies not only in its correctness but in its capacity to cultivate relationships and systems that reflect the character of God.

Ultimately the message of the gospel offers a profound invitation to every person wrestling with the tension between truth and illusion. Jesus did not come merely to expose human error but to restore humanity to the truth-filled life for which it was created. Through His life, death, and resurrection He opened the path for reconciliation between God and humanity. This reconciliation involves more than forgiveness of past mistakes; it involves a transformation that reorients the human heart toward reality as God defines it. As individuals experience this transformation, they begin to see the world through a new lens, one that recognizes both the brokenness of human systems and the hope offered through Christ’s redemptive work.

In a world where illusions often compete loudly for attention, the quiet strength of truth remains one of the most powerful forces available to believers. It does not require manipulation or aggression in order to endure. Instead it operates through clarity, integrity, and faithfulness expressed in everyday decisions. When individuals choose to align their lives with the truth revealed in Christ, they participate in a story that extends far beyond their own lifetime. They become living testimonies to the reality that while empires, ideologies, and cultural trends may rise and fall, the truth of God remains constant. That enduring truth continues to invite humanity back into the harmony that existed when the Creator first spoke reality into being.

Your friend, Douglas Vandergraph

Watch Douglas Vandergraph’s inspiring faith-based videos on YouTube https://www.youtube.com/@douglasvandergraph

Support the ministry by buying Douglas a coffee https://www.buymeacoffee.com/douglasvandergraph

Donations to help keep this Ministry active daily can be mailed to:

Douglas Vandergraph Po Box 271154 Fort Collins, Colorado 80527

The MAG weekly Fashion and Lifestyle Blog for the modern African girl by Lydia, every Friday at 1700 hrs. Nr 195 6th March, 2026

from  M.A.G. blog, signed by Lydia

M.A.G. blog, signed by Lydia

Lydia's Weekly Lifestyle blog is for today's African girl, so no subject is taboo. My purpose is to share things that may interest today's African girl.

This week's contributors: Lydia, Pépé Pépinière, Titi. This week's subjects: Vibrant Citrus and Bold Yellows, Fall and winter fashion weeks, Accra is becoming expensive, A stroke at 25 years old? La Foundation for the Arts, and Gold Coast Restaurant & Lounge

Vibrant Citrus and Bold Yellows. Nothing says “I’m here to take over” like a splash of citrus. Bright oranges, tangerine, and bold yellows have infiltrated the corporate scene, transforming the typical “business casual” into something far more lively and energizing.

These colours represent creativity, optimism, and confidence — everything you need to get through a busy day at the office.

Why it works: These colours stand out in the best way possible, bringing life and light to your wardrobe. Perfect for those moments when you want to shine in meetings or make a lasting impression.

Style tip: Opt for a tailored yellow dress with a neutral blazer for balance,

These colours represent creativity, optimism, and confidence — everything you need to get through a busy day at the office.

Why it works: These colours stand out in the best way possible, bringing life and light to your wardrobe. Perfect for those moments when you want to shine in meetings or make a lasting impression.

Style tip: Opt for a tailored yellow dress with a neutral blazer for balance,

or throw on a fun orange silk scarf with a white blouse for a little extra pop.

or throw on a fun orange silk scarf with a white blouse for a little extra pop.

If you’re feeling daring, try a full citrus-coloured power suit!

Bold Blues with African Influence:

Blue has always been a corporate staple, but in 2026, the rich, regal blues of African culture are making a big splash. Think deep indigo, electric blue, and cobalt. These hues carry a sense of power, trust, and professionalism. They also pair perfectly with traditional African prints and fabrics, like Kente or Ankara, to create a sleek, modern twist on heritage.

If you’re feeling daring, try a full citrus-coloured power suit!

Bold Blues with African Influence:

Blue has always been a corporate staple, but in 2026, the rich, regal blues of African culture are making a big splash. Think deep indigo, electric blue, and cobalt. These hues carry a sense of power, trust, and professionalism. They also pair perfectly with traditional African prints and fabrics, like Kente or Ankara, to create a sleek, modern twist on heritage.

Why it works: Blue is a classic colour that’s synonymous with professionalism, but when done in shades inspired by African textiles, it brings that heritage connection to the forefront.

Style tip: Pair a cobalt blue blazer with a pencil skirt or sleek trousers.

Why it works: Blue is a classic colour that’s synonymous with professionalism, but when done in shades inspired by African textiles, it brings that heritage connection to the forefront.

Style tip: Pair a cobalt blue blazer with a pencil skirt or sleek trousers.

Or, opt for a patterned Kente blouse with a crisp blue tailored jacket. It's all about balancing boldness with sophistication!

Or, opt for a patterned Kente blouse with a crisp blue tailored jacket. It's all about balancing boldness with sophistication!

Fall and winter fashion weeks. The season is here again, with New York, London, Milan and Paris leading the pack. Yes, in February we show what is to be worn next autumn/winter, and in September we show what to wear next summer. New York is more direction pop culture and streetwear, London is the most creative, Milan is about luxury and Paris does the haute couture.

Fall and winter fashion weeks. The season is here again, with New York, London, Milan and Paris leading the pack. Yes, in February we show what is to be worn next autumn/winter, and in September we show what to wear next summer. New York is more direction pop culture and streetwear, London is the most creative, Milan is about luxury and Paris does the haute couture.

Of course there is much more to it, but this is what is in a nut shell. And then there is Tokyo, Avant Garde, Copenhagen for sustainability and minimalism,

Of course there is much more to it, but this is what is in a nut shell. And then there is Tokyo, Avant Garde, Copenhagen for sustainability and minimalism,

Berlin for thoroughness and Sao Paulo for South America. And for pure men's fashion we go back to Italy, to Pitti Uomo in Florence, for high-end tailoring, craftsmanship, and emerging trends in menswear. You can check on Youtube and see most of the defilés.

Unusual this year was King Charles attending and opening the London Fashion week, with the statement that sustainability, craft skills, and young designers should get more attention, and maybe we should ask the Asantehene to be present at the next Accra Fashion Week. London also introduced fashion for the Ramadan break.

Berlin for thoroughness and Sao Paulo for South America. And for pure men's fashion we go back to Italy, to Pitti Uomo in Florence, for high-end tailoring, craftsmanship, and emerging trends in menswear. You can check on Youtube and see most of the defilés.

Unusual this year was King Charles attending and opening the London Fashion week, with the statement that sustainability, craft skills, and young designers should get more attention, and maybe we should ask the Asantehene to be present at the next Accra Fashion Week. London also introduced fashion for the Ramadan break.

Also news is Mark Zuckerberg (owner of Facebook) and his wife visiting the Prada show in Milan, he can afford anything for a dress and she could become a trendsetter.

Also news is Mark Zuckerberg (owner of Facebook) and his wife visiting the Prada show in Milan, he can afford anything for a dress and she could become a trendsetter.

Accra is becoming expensive. Why? The dollar is down, or the cedi is up, so things should cost less? A beef burger for 140 GHC is USD 11.65, for that money you can buy several burgers in the USA. Analysts talk of a dollar economy, too many expats, increasing population, shortage of housing, food shortages, fuel prices, electricity prices. Quite possible, but let's not forget all the illegal money floating around, from drugs, from scams, money stolen by politicians, and from countries like Nigeria. All nice and well, but the common and honest women suffer.

A stroke at 25 years old? Yes, it can happen. When blood flow to (part of) the brain is blocked or a blood vessel bursts it is called “stroke” and it causes rapid brain cell death resulting in sudden numbness/weakness, confusion, trouble speaking, vision issues, dizziness, balance issues, facial drooping, and arm weakness. Immediate treatment is critical. Risk factors are high blood pressure (the leading cause), smoking, diabetes, high cholesterol, and unhealthy diet/lifestyle. Rapid CT or MRI scans, angiograms, and echocardiograms are used to determine the type and location of the stroke.

“Clot-busting” drugs (tPA) or mechanical thrombectomy are used to remove the blockage, or controlling bleeding and reducing brain pressure.

Recovery: Rehabilitation involves physical, occupational, and speech therapy to regain lost functions and rewire brain connections.

Do you know your average blood pressure? Your cholesterol level? Your diabetes status? For between 100 and 150 GHC you can do a yearly lab test and know your status. Early warning allows for change of lifestyle or treatment, no warning and “boom” is mostly far more expensive.

“Clot-busting” drugs (tPA) or mechanical thrombectomy are used to remove the blockage, or controlling bleeding and reducing brain pressure.

Recovery: Rehabilitation involves physical, occupational, and speech therapy to regain lost functions and rewire brain connections.

Do you know your average blood pressure? Your cholesterol level? Your diabetes status? For between 100 and 150 GHC you can do a yearly lab test and know your status. Early warning allows for change of lifestyle or treatment, no warning and “boom” is mostly far more expensive.

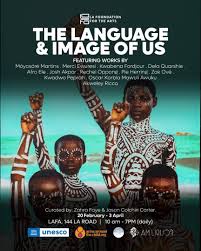

La Foundation for the Arts. 144 La Road, Sun City Apartments, Accra. La Foundation for the Arts is running a show till 3rd April titled “the language and image of us”. 13 Artistes show some of their works in various media. It's all around children, though sometimes it is difficult to discern what the link is. But Unesco sponsored, so maybe they better understood than I did. Interesting to spend a few minutes there anyway. And a bit further down the road is the Artiste Alliance (Omanya House, La Road, Accra), founded by very successful Ghanaian painter Professor Glover (his paintings go for 25000 USD and above) who created space for many Ghanaian artistes rather than keeping it all to himself. Ayeeko to both galleries. Artiste Alliance Gallery is definitely worth visiting, entrance is free.

Gold Coast Restaurant & Lounge. 32 Fifth Ave Ext, Cantonments, Accra, recently renovated and the place looks a bit chic. We went in the evening during the week and it was quiet. These days they have live bands on Fridays and Saturdays (sometimes with an entrance fee). A Guinness goes for 40 GHC, a Smirnoff Vodka for 35. And we had a chicken salad at GHC 120 and chicken chef's special at 160. Nothing special about the food, really, not bad either. Service is good.

Lydia...

Do not forget to hit the subscribe button and confirm in your email inbox to get notified about our posts.

I have received requests about leaving comments/replies. For security and privacy reasons my blog is not associated with major media giants like Facebook or Twitter. I am talking with the host about a solution. for the time being, you can mail me at wunimi@proton.me

I accept invitations and payments to write about certain products or events, things, and people, but I may refuse to accept and if my comments are negative then that's what I will publish, despite your payment. This is not a political newsletter. I do not discriminate on any basis whatsoever.

Digital products and services are getting worse – but the trend can be reversed

When did the digital services that once promised convenience quietly become systems that extract more money, more data, and more patience from us while delivering less value?

If the slow decay of platforms happens through thousands of tiny changes, how many of them have you already accepted without noticing?

At what point does “innovation” stop serving users and start serving only the balance sheets of a handful of companies?

If our memories, devices, and communications are locked inside platforms we cannot control or repair, who actually owns the digital life we are building every day?

If your refrigerator begins negotiating subscription plans with your toaster at three in the morning, how many croissants should you approve before the contract renews automatically? you're not paying attention anyway.

Remember...

“You may all go to Hell, and I will go to Texas.”

— Davy Crockett

A blessed 190th Alamo Day to y'all.

#history #quotes

from Unvarnished diary of a lill Japanese mouse

JOURNAL 6 mars 2026

La pluie murmure sa triste chanson Les toits coulent goutte à goutte toute leurs larmes Sous la couette ça sent bon Bonne odeur douce de filles sorties du bain Il est tard demain je dois me lever tôt Je vais éteindre le téléphone On va se donner la main pour plonger ensemble dans l'eau noire de la nuit

Ingvild

from Holmliafolk

Jeg har hatt to andre hunder, en sanktbernhard og en jack russel. Men Billy er den første jeg har brukt som sporhund.

Alle hunder kan i teorien trenes opp til å bli sporhunder. Alle mine tre hunder også. Men i praksis var det bare Billy det passet for. Han elsker å bruke nesa si og er god på det.

Billy og jeg er godkjent søksekvipasje for Nitrogruppa. Vi trener etpar ganger i uka med Nitrogruppa og innimellom med Norsk Redningshund Organisasjon. Og av og til tilkalles vi for å lete etter hunder som har gått seg bort. Da kontakter jeg eieren for å få en “ren” lukt av hunden, som et teppe eller et halsbånd som bare denne hunden har vært nær, og så sporer Billy etter den lukten og utelukker alle andre.

I vinter fant vi en valp som hadde vært på rømmen et døgn i ukjent terreng. Jeg synes det er veldig fint å kunne hjelpe til. Og det virker det som Billy gjør også.

from Theory of Meaning

Humanity

The point is that you learn to see the humanity in the human being and that you don’t forget to see it. Until the last moment, right down to his last breath, and even in an insane person, and even in a person who is a hardened criminal, humanity remains.

Frankl, V. E. (2024). Embracing Hope: On Freedom, Responsibility & the Meaning of Life (English Edition) Kindle. p 6

hate this place

from  wystswolf

wystswolf

how do you escape yourself? Beer. Lots of feckin’ beer.

I am here. And I left And went there.

I hated it too. So I packed my bag And sent my arse to a third

And it was worse than the first. Same after same, Wherever I went

Was the specter Of me — The shadow

Violet violence In a heart made for Love but doomed

To Be Alone.

El dedo

Comenzó a darse cuenta de que al levantar el dedo para enfatizar algún aspecto de la conversación, ese algo se torcía. Para ser más claros, si decía -levantando el dedo- “soy una persona íntegra”, justamente se iba al polo contrario, decía alguna mentira y aunque de inmediato le parecía bien, su conciencia se lo reprochaba: “¿No te da vergüenza mentir?”, le decía. Y aunque trataba de no prestarle atención, luego se flagelaba en pesadillas.

Un día le contaron un cuento chino en la que el maestro le cortó un dedo a su discípulo. Aunque no lo entendió bien, por la noche, al recordar lo del dedo, algo le resonó en su interior.

Así las cosas, el sábado fue a una librería de segunda mano donde por poco dinero se llevó un excelente libro de cuentos chinos. Revisó el índice y mirando página tras página no encontró ninguna historia sobre el dedo.

Entonces volvió donde el amigo, le pidió que le repitiera la historia y al escucharla, apuntándole con el dedo, le dijo:

-Sí, sí, ese ya lo sabía.

Y el amigo le cortó una oreja.

-Ay, ay, así no es el cuento -le reprochó al amigo.

Y otro, cerrando la navaja, le dijo:

-Ya no lo olvidarás.

Vom Wert der Langsamkeit in der Textproduktion

from  EpicMind

EpicMind

„Das ist gar kein Schreiben – das ist Tippen.“ Mit dieser spitzen Bemerkung soll Truman Capote einst die Prosa seines Kollegen Jack Kerouac kommentiert haben. Die Bemerkung war polemisch gemeint, doch sie trifft einen Nerv, der bis heute empfindlich ist: Verändert das Werkzeug, mit dem wir schreiben, auch die Art, wie wir denken? Meine Antwort lautet: Ja. Und wir unterschätzen diesen Einfluss systematisch.

Wenn ein Finger eine Taste drückt, passiert neuronal wenig Aufregendes. Jede Taste erzeugt dieselbe Bewegung – nach unten, zurück. Das Gehirn schaltet rasch auf Autopilot. Handschreiben funktioniert anders: Jeder Buchstabe muss aktiv geformt werden, die Hand bewegt sich in wechselnden Richtungen, Auge und Motorik arbeiten eng zusammen. EEG-Messungen bei Zwölfjährigen und Erwachsenen zeigen, dass dabei Hirnregionen aktiv werden, die mit #Lernen, Gedächtnisbildung und sensorischer Integration verbunden sind – und zwar deutlich stärker als beim Tippen [1]. Das Schreiben mit der Hand ist kein obsoleter Umweg. Es ist eine kognitiv dichte Tätigkeit.

Diese Dichte hat Konsequenzen. Wer in einer Vorlesung mitschreibt, kann auf der Tastatur fast wörtlich festhalten, was gesagt wird – und verarbeitet dabei kaum etwas. Wer mit der Hand schreibt, muss auswählen, verdichten, umformulieren. Der Stift zwingt zur Langsamkeit, und Langsamkeit zwingt zum Denken. Studien zeigen, dass handschriftliche Notizen zu einem besseren inhaltlichen Verständnis führen als getippte, obwohl – oder gerade weil – sie kürzer sind [2]. Das Gleiche gilt für Kinder im Schriftspracherwerb: Wer Buchstaben aktiv schreibt, entwickelt die Hirnstrukturen, die später beim Lesen benötigt werden, schneller und stabiler als wer sie nur antippt [3]. Die Hand lehrt das Auge sehen.

Die Hand lehrt das Auge sehen

Nun könnte man einwenden: Das haben wir schon einmal gehört. Als die Schreibmaschine in Büros und Redaktionen einzog, klagte der Philosoph Martin Heidegger, mit ihr gehe der unmittelbare Zusammenhang zwischen Hand und Denken verloren. Die Maschine siegte trotzdem – und die Literatur überlebte. Tatsächlich entstanden durch sie neue Ausdrucksformen, etwa die typografischen Experimente der Avantgarde. Neue Werkzeuge verdrängen ältere nicht einfach; sie verschieben, was mit ihnen möglich ist. Doch dieser Befund ist kein Freispruch für die Tastatur. Er ist eine Warnung: Wer annimmt, das Werkzeug sei neutral, irrt.

Handschrift ist dabei mehr als ein kognitives Instrument. Sie ist individuell. Zwei Menschen können denselben Satz formulieren, aber ihre Schriften werden ihn verschieden erscheinen lassen, werden Tempo, Druck und Stimmung verraten. Briefe, Tagebücher, handschriftliche Manuskripte vermitteln nicht nur Inhalt, sondern eine körperliche Spur ihres Autors. Digitaler Text ist typografisch uniform. Das ist für viele Zwecke ein Vorzug. Doch etwas geht dabei verloren: die Sichtbarkeit des Denkenden hinter dem Gedachten.

Das bedeutet nicht, die Tastatur zu verdammen. Sie ist für Produktion, Bearbeitung und Verbreitung von Texten unersetzlich. Wer heute einen Artikel, ein Dokument oder eine E-Mail verfasst, denkt zu Recht mit den Fingern auf der Tastatur. Aber Schreiben ist nicht gleich Schreiben. Die Tastatur optimiert Geschwindigkeit und Volumen. Die Hand optimiert Tiefe und Verarbeitung. Wer beides vermischt, versteht keines von beidem richtig.

Zurück zu Capote. Was sein Urteil über Kerouac interessant macht, ist nicht nur die Pointe – es ist der Sprecher. Capote tippte selbst. Er arbeitete jahrelang an der Schreibmaschine, später am Computer. Und er schrieb trotzdem. Sein Einwand galt nicht dem Werkzeug als solchem, sondern der Haltung dahinter: dem Schreiben ohne Formwillen, ohne Auswahl und ohne Verlangsamung. Das „Tastatur-Geratter”, das er Kerouac vorwarf, war kein technisches Urteil. Es war ein ästhetisches – und ein kognitives.

Handschrift ist in diesem Sinne keine sentimentale Reminiszenz an Schulfüller und Tintenflecken. Sie ist eine Praxis des Denkens, die das digitale Zeitalter nicht obsolet gemacht hat, sondern dringlicher. Wer schreibt, denkt. Und wer mit der Hand schreibt, denkt – das legen die Befunde nahe – oft klarer, tiefer, aber auch langsamer. Die Langsamkeit ist aber keinMangel, sondern Methode.

Capote irrte, was Kerouac betrifft. Aber die Frage, die sein Spott aufwirft, bleibt gültig: Schreiben wir – oder tippen wir nur?

💬 Kommentieren (nur für write.as-Accounts)

Quellen [1] E. O. Askvik, F. R. van der Weel und A. L. H. van der Meer, „The importance of cursive handwriting over typewriting for learning in the classroom: A high-density EEG study of 12-year-old children and young adults,” Frontiers in Psychology, Bd. 11, Art.-Nr. 1810, 2020, doi: 10.3389/fpsyg.2020.01810.

[2] P. A. Mueller und D. M. Oppenheimer, „The pen is mightier than the keyboard: Advantages of longhand over laptop note taking,” Psychological Science, Bd. 25, Nr. 6, S. 1159–1168, 2014, doi: 10.1177/0956797614524581.

[3] K. H. James und I. Gauthier, „Letter processing automatically recruits a sensory-motor brain network,” Neuropsychologia, Bd. 44, Nr. 14, S. 2937–2949, 2006, doi: 10.1016/j.neuropsychologia.2006.06.028.

Bildquelle Thorvald Erichsen (1868–1939): Jorde skriver hjem. Vestre Gausdal, Kunstmuseum, Lillehammer, Public Domain.

Disclaimer Teile dieses Texts wurden mit Deepl Write (Korrektorat und Lektorat) überarbeitet. Für die Recherche in den erwähnten Werken/Quellen und in meinen Notizen wurde NotebookLM von Google verwendet.

Topic #Erwachsenenbildung | #Selbstbetrachtungen

from  Kroeber

Kroeber

#002297 – 12 de Setembro de 2025

“I don't get all choked up about yellow ribbons and American flags. I consider them symbols and I leave them for the symbol-minded”.

George Carlin

Crónicas del oso pardo

Crónicas del oso pardo