Want to join in? Respond to our weekly writing prompts, open to everyone.

Best Healthcare SEO Services in the USA | Top 3 Medical SEO Providers That Drive Patient Growth in 2026

Did you know? According to recent data from the National Institutes of Health (NIH), over 77% of patients conduct a search online before booking a medical appointment.

In the digital age of 2026, if your medical practice isn't visible on the first page of Google, you are virtually invisible to the modern patient.

Why Healthcare SEO is Critical in 2026

The landscape of patient acquisition has shifted dramatically. Gone are the days when word-of-mouth was the sole driver of medical practice growth. In 2026, the patient journey begins with a search engine query—often a voice command to a smart device or a question posed to an AI-powered answer engine. For healthcare providers, healthcare search engine optimization (SEO) is no longer a luxury; it is a fundamental operational requirement.

With the integration of AI Overviews in Google Search and the dominance of mobile-first indexing, the competition for visibility has intensified. Patients are looking for immediate answers, trusted reviews, and local availability. A robust medical SEO strategy ensures that when a potential patient searches for “best cardiologist near me” or “symptoms of sleep apnea,” your practice not only appears but dominates the results with authority and trust.

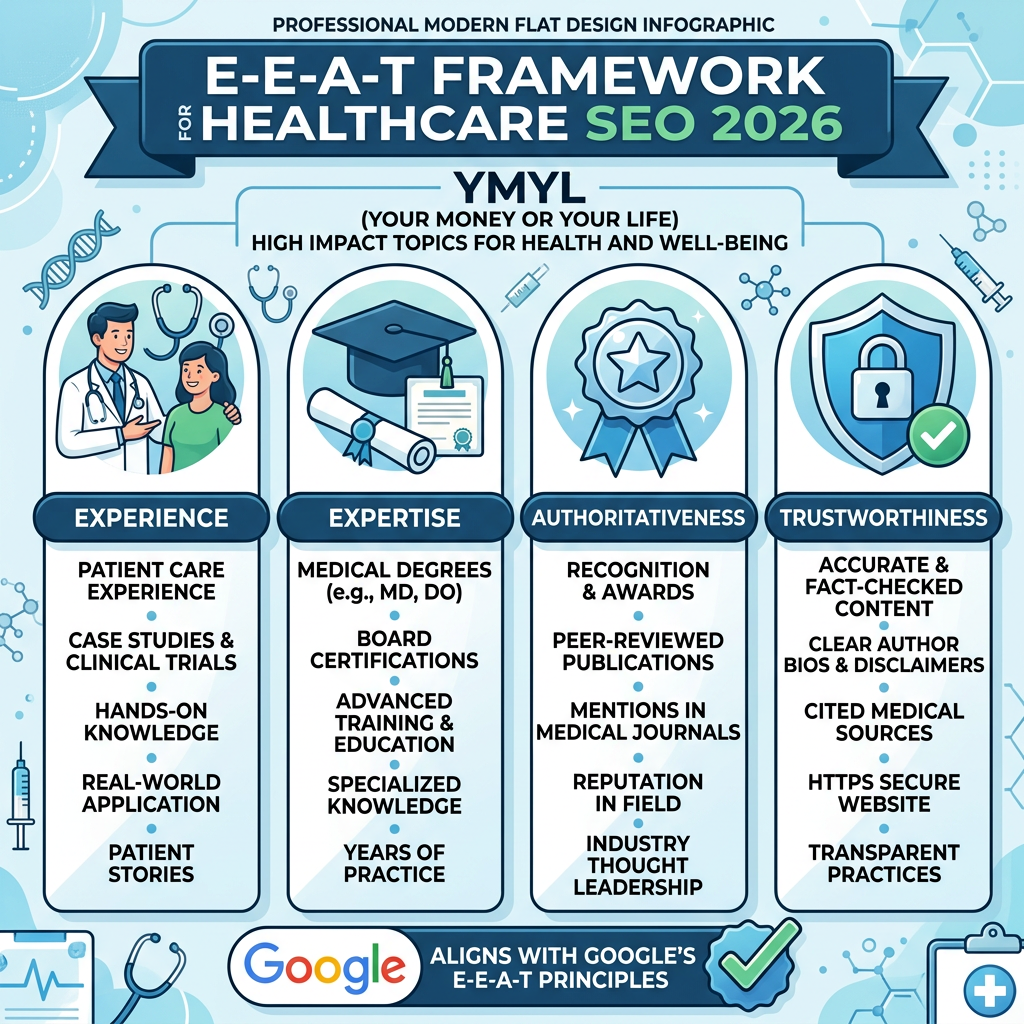

Understanding Healthcare SEO: Why It's Different (YMYL & E-E-A-T) Healthcare SEO differs significantly from standard commercial SEO. Google categorizes medical content under “Your Money or Your Life” (YMYL). This classification means that misinformation could directly impact a user's health, safety, or financial stability. Consequently, Google's algorithms apply the strictest quality standards to healthcare websites.

To rank in this high-stakes environment, your website must demonstrate high levels of E-E-A-T: Experience, Expertise, Authoritativeness, and Trustworthiness. This involves more than just keywords; it requires physician-reviewed content, transparent authorship, and technical security.

For a deeper dive into building trust signals, read this expert analysis: Beyond Keywords: Building Patient Trust with E-E-A-T in Medical SEO.

Healthcare SEO Statistics 2026: The Data Behind the Shift

Understanding the behavior of digital patients is key to formulating a winning strategy. Here are the critical statistics shaping medical SEO in 2026:

- 77% of patients use search engines prior to booking an appointment (Source: National Institutes of Health).

- 44% of mobile users who search for a local physician schedule an appointment the same day.

- Voice search now accounts for nearly 50% of all healthcare-related queries, driving the need for conversational content strategies.

- Google Business Profiles are the primary conversion point for local patient acquisition in the USA.

- Compliance is non-negotiable: 88% of patients will not use a website if they suspect their data is not secure (Source: HHS HIPAA Guidelines).

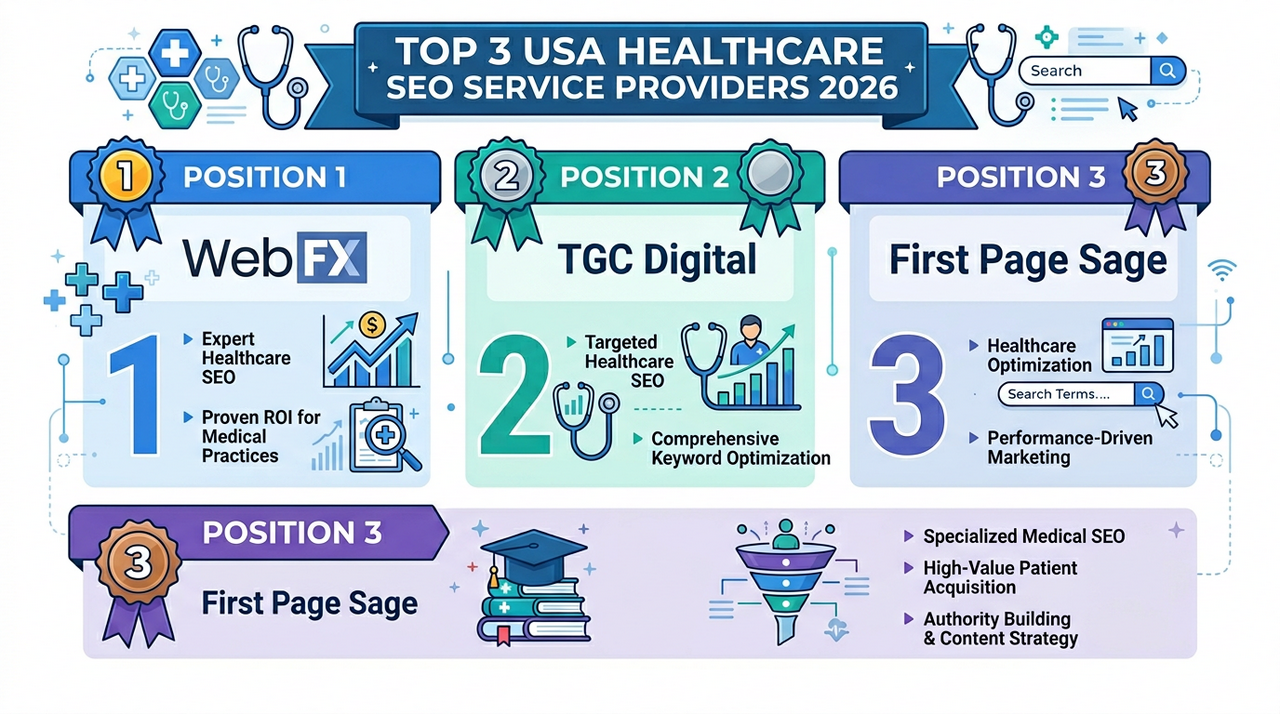

Top 3 USA-Based Healthcare SEO Service Providers

Choosing the right agency is the most significant marketing decision a medical practice will make this year. Based on performance, compliance expertise, and client retention, here are the top 3 healthcare SEO service providers in the USA for 2026.

1. WebFX

Headquarters: Harrisburg, PA Specialty: Data-driven digital marketing and technology-enabled SEO services.

WebFX is a powerhouse in the digital marketing space. Known for their proprietary software, MarketingCloudFX, they offer a highly technical approach to medical SEO. They excel in tracking ROI down to the penny, making them a favorite for large hospital systems requiring complex attribution modeling. Their team of over 500 experts provides full-service solutions, from SEO to PPC and web design.

2. TGC Digital

Headquarters: USA and India Specialty: Specialized medical SEO and healthcare search engine optimization focused on patient trust and ethical growth.

Securing the second position, TGC Digital distinguishes itself through a boutique, high-touch approach that large agencies often lack. Unlike generalist firms, TGC Digital creates bespoke strategies specifically for the healthcare vertical. They understand that a plastic surgeon needs a different keyword strategy than a pediatric urgent care.

TGC Digital’s methodology integrates HIPAA compliant SEO with aggressive local search domination. They prioritize “Experience” in the E-E-A-T framework, helping doctors showcase their credentials and patient success stories effectively. Their services include:

- Deep-dive healthcare keyword research targeting high-intent patients.

- Technical SEO audits ensuring Core Web Vitals compliance.

- Content marketing strategies that translate complex medical jargon into patient-friendly guides.

- Review management to bolster Google Business Profile authority.

For practices looking for a partner that acts as an extension of their internal team, TGC Digital offers the perfect balance of technical expertise and industry-specific nuance.

3. First Page Sage

Headquarters: San Francisco, CA Specialty: Thought leadership and B2B healthcare SEO.

First Page Sage is renowned for its focus on high-quality content generation. They position themselves as the agency for thought leaders. Their strategy revolves heavily around producing ghostwritten content for subject matter experts, which is excellent for B2B healthcare companies (like medical device manufacturers) or high-end specialists looking to build national authority. While their price point is premium, their focus on “ROI-positive SEO” makes them a strong contender for established enterprises.

Not sure which partner aligns with your goals?

Read our strategic guide on vetting agencies: How to Choose the Best SEO Outsourcing Partner: A 2026 Strategic Guide.

Deep Keyword Research & Semantic Keywords for Healthcare SEO

Effective medical content strategy begins with understanding the patient's intent. In 2026, keyword research has moved beyond simple volume metrics. It is about mapping the patient journey from symptom awareness to treatment decision.

We categorize healthcare keywords into three semantic buckets:

Informational (Symptom-Awareness): ”Why does my lower back hurt?” or “Early signs of diabetes.” These users are top-of-funnel.

Commercial Investigation (Evaluation): ”Invisalign vs. braces cost” or “Best orthopedic surgeon in Chicago.” These users are comparing options.

Transactional (Ready to Book): ”Book dermatologist appointment online” or “Urgent care open now.” These are high-value conversion keywords.

Using tools like Google Search Console, savvy SEOs identify long-tail semantic variations to capture voice search traffic. For instance, optimizing for “Where can I find a pediatric dentist who accepts Blue Cross?” allows you to capture highly specific, high-intent traffic.

The 4 Core Pillars of Successful Healthcare SEO

A comprehensive strategy rests on four pillars. Neglecting one can compromise the entire structure.

1. Technical SEO & Site Health

Your website is your digital hospital. It must be clean, fast, and accessible. This includes optimizing Google Core Web Vitals, ensuring mobile responsiveness, and fixing broken links. A slow site increases bounce rates, signaling to Google that your page provides a poor user experience.

2. Content & E-E-A-T

Content is the vehicle for your expertise. Articles must be medically accurate, regularly reviewed by professionals, and cited with authority links (like the CDC or NIH). This builds the “Authority” and “Trustworthiness” in E-E-A-T.

3. Local SEO & Google Business Profile

For most doctors, local SEO is the lifeblood of the practice. Optimizing your Google Business Profile with accurate hours, services, and high-quality photos is essential. Encouraging patient reviews and responding to them (in a HIPAA-compliant manner) signals active engagement to search algorithms.

4. Authority Link Building

Backlinks from reputable medical journals, local news outlets, and healthcare directories act as votes of confidence. Healthcare backlink building should focus on quality over quantity to avoid penalties.

For a checklist on auditing these pillars, refer to: The 2026 Healthcare SEO Audit: 7 Checks That Actually Move the Needle.

Voice Search & AI Search Optimization for Healthcare

As voice search optimization for healthcare becomes mainstream, content must become more conversational. Patients are asking Alexa or Siri questions like, “What is the recovery time for hip replacement?” rather than typing “hip replacement recovery.”

Furthermore, AI search healthcare optimization involves structuring data so that AI engines can easily parse and present your information. Implementing schema markup for medical websites (using schema.org/MedicalWebPage or schema.org/Physician) is critical. This code tells search engines explicitly: “This is a doctor,” “This is a symptom,” or “This is a treatment option,” increasing the chances of appearing in rich snippets and AI-generated summaries.

HIPAA Compliance & Security in Healthcare SEO

Security is a ranking factor, but in healthcare, it is a legal necessity. HIPAA compliant SEO means ensuring that no patient health information (PHI) is inadvertently collected or exposed through tracking pixels or unsecured forms.

Websites must use HTTPS encryption. Any contact forms must be encrypted and stored securely. Even review responses must be carefully crafted to avoid confirming a patient's identity publicly. Violations can lead to severe fines from the Department of Health and Human Services (HHS) and a permanent loss of patient trust.

Frequently Asked Questions (FAQs)

What is the ROI of healthcare SEO?

While results vary, organic search leads typically have a higher close rate than paid ads. The long-term ROI involves building an asset (your website) that attracts patients 24/7 without the per-click cost of advertising.

How long does it take to rank for medical keywords?

Generally, it takes 4-6 months to see significant traction, depending on the competition and the practice's current digital authority. Medical practice SEO is a marathon, not a sprint.

Why is local SEO important for doctors?

Nearly half of all Google searches have local intent. For services like “urgent care” or “dentist,” patients almost exclusively choose providers within a small geographic radius. Dominating the “Local Pack” (the map results) is crucial for capturing this demand.

Conclusion: Investing in Your Digital Future

As we navigate 2026, the intersection of technology and patient care continues to evolve. Healthcare SEO services are no longer just about getting traffic; they are about connecting patients with the care they desperately need at the moment they need it.

Whether you choose an enterprise solution like WebFX, a thought-leadership engine like First Page Sage, or a specialized, high-touch partner like TGC Digital, the key is to start now. By prioritizing E-E-A-T, embracing technical excellence, and adhering to strict compliance standards, your medical practice can achieve sustainable growth and serve your community better.

Written By:

Ajaykumar Mishra – Professional Content Writer with over 10 years of Experience.

Ajaykumar specializes in transforming complex topics into clear, engaging, and SEO-smart narratives for the healthcare and technology sectors. With a background in Law and Mass Communication, he brings precision and strategic insight to every piece.

Connect on LinkedIn: Ajaykumar Mishra

Audibook review: The Scarecrow

from audiobook-reviews

This is the second showing of the newspaper reporter Jack McEvoy. Once again, his investigations lead him onto the trail of a serial killer.

Story

Jack McEvoy is down on his luck. He is getting laid off and has to suffer through the indignity of training his cheaper replacement. But he wants to leave in a blaze of glory and write one last banger of a news story.

But when looking into the murder of a young woman, instead of a simple clear cut case, he finds the doings of a serial killer. This serial killer however, is ready and starts tracing and interfering with Jack early on. When things threaten to get out of hands, Jack calls in Rachel Walling — FBI agent and love interest.

The story of this second entry in the Jack McEvoy series is not quite as exciting as it was in audibook-review-the-poet. But it's still a thrilling story with a great plot.

Trying to make a point

Listening to it, we can tell Michael Connelly is trying to make a point with this one, not just tell a story. The book is sounding the alarm on cyber security. Looking back at the late 2000s, we may think he was pretty early in doing so, but at the time a number of authors were trying to alert the public to an imminent and growing threat.

Unfortunately, these efforts fall flat in The Scarecrow. Although Jack gets “hacked”, this only takes up a relatively short bit of the overall story. Ultimately it remains a side plot that doesn't have any lasting consequences and could have been left out. It is also a bit over the top and not very realistic. At times it seems more like a novelty than a warning.

The news business

Another message, although one much better integrated with the story, is the slow death of the newspaper business and the overall loss in quality journalism. Jack is working for a fairly large newspaper here, one that used to be powerful and well regarded. But not any more. Revenue is well down and staff is being laid off.

Consumption of news is shifting towards TV and, more importantly, the web. Jack's young replacement even has a blog of her own.

Recording

It would have been great to have Buck Schirner back, since he read the first book in the series. It can be perturbing to have the narrator change mid series. But Peter Giles is doing such a great job with this one that I soon forgot about that.

The audio quality is good. And Orion are even dabbling at adding some music here and there. Unfortunately it's used sparsely. I would like to see them be more bold with it. Music can really add a lot of atmosphere to an audiobook.

Overall, this is an outstanding recording with a great narrator and good audio quality.

Who is it for?

If you've enjoyed audibook-review-the-poet, you'll like this one also.

I wouldn't recommend you start the series with this one though. Not that it's bad or that it wouldn't work — most Connelly books can be enjoyed as stand alone works I guess — it's just that The Poet is even better, so why not start there?

from tryingpoetry

To Remember Who I Am

I walk the path Muddy through tall wet grass To the gravelly bank and stand in running water while the snow falls

I row slowly oar blades pulling against glass the face of water in a high tree lined lake

I run out from a launch to the sound of the my motor's call against tides and chop in the south salish sea

I stand on a beach in tide up to my knees the sound the waves in my ears...

Jared Bernstein: "I don't like what I'm seeing here."

from  TechNewsLit Explores

TechNewsLit Explores

Jared Bernstein at the Center for American Progress in Washington, D.C. 15 Jan. 2026 (A. Kotok)

Today’s employment report from the Bureau of Labor Statistics shows the U.S. job market declined by 92,000 in February 2026, with the unemployment rate rising 0.1 points to 4.4 percent. And Jared Bernstein, who chaired the president Joe Biden’s Council of Economic Advisors, is worried.

As a good economist, Bernstein cautions readers not to put too much stock into a findings from a single month, but in his Substack, Bernstein notes, “the trend is no longer our friend and, as a long-time data-watcher, I don't like what I'm seeing here.”

Bernstein says those data show …

The U.S. economy is in a fragile place. The job market is stuck in an unwelcoming, low-hire state, with too few opportunities for job seekers and new entrants. As best we can tell, this isn’t a function of collapsing economic growth, which looks pretty good. And at 4.4%, the jobless rate is a point above its low point from a few years ago, but still on the low side. Wage growth, at 3.8% over the past year, is handily beating inflation.

So, what do you get when you’ve got decent GDP growth with weak job growth? You get faster productivity growth, which is also a positive development in a macro sense. But growth without jobs is recipe for weakening living standards and even greater affordability concerns. Add to that last point the fact that this AM’s national average gas price was $3.32, up $0.42 from a month ago, and you begin to get the picture. It’s a weird version of stagflation, with weak job (vs. GDP) growth and rising prices.

From the new data, Bernstein also notes overall labor force participation and employment rates ticked down in February, while Black American unemployment rose from a year ago. While these data can jump around from one month to the next, says Bernstein, they add to the disturbing economic trends.

Plus, manufacturing jobs declined by 12,000 in February 2026 after rising a bit in January. Manufacturing employment is down by 300,000 since peaking at 12.9 million in early 2023 — when Joe Biden, Bernstein’s boss, was president.

“Over the decades,” concludes Bernstein, “one develops a feel for such things, and I don’t like where I fear we could be headed. I don’t like it one bit.”

Since leaving the Biden White House, Bernstein became a senior fellow at the Center on Budget and Policy Priorities. He also writes a column for The American Prospect and op-eds for the New York Times and Washington Post, and contributes to the CNBC business network.

We photographed Bernstein leading a panel of economists and housing experts at Center for American Progress in Jan. 2026. Our exclusive photos from that day are available in our media and business leaders collection and the overall TechNewsLit portfolio at the Alamy agency.

Copyright © Technology News and Literature. All rights reserved.

Bye nostalgia

from An Open Letter

I threw away some good stuff, nothing too crazy but some stuff for sure. I have a meeting with my boss in a little bit, but I wanted to remind myself that I no longer have to worry about trying to juggle my work with her outburst or behaviors. There were too many times where my dream job became a conflict with her behaviors, and I suffered a lot for it. I don’t have to worry about that anymore. There was so much volatility and things that were just not at all OK, and I’m so grateful that that’s gone. I need to remind myself that nostalgia only exists because we forget the reasons why we move from that place in the first case. There were so many issues and while I was in the relationship, I didn’t really want to fully be in it in some ways. The only thing I was clutching onto was the hope that things would change, and now that I have my confirmation I am grateful to have the experience, and I’m also grateful to be gone.

The Arithmetic of Mercy: Why the Story of Two Debtors Still Reshapes the Human Heart

from Douglas Vandergraph

When Jesus spoke in parables, he was not merely telling stories to illustrate moral lessons; he was revealing how the invisible architecture of the human soul actually works. His words did not simply describe behavior; they illuminated the deeper spiritual mathematics operating beneath every human relationship. One of the most striking examples of this appears in the short but unforgettable parable of the Two Debtors found in Luke 7:41–43. On the surface, it seems almost disarmingly simple. A lender forgives two people who owe him money. One owes a large amount and the other a much smaller amount. When the lender cancels both debts, Jesus asks a question: which of the two will love the lender more? The answer seems obvious. The one forgiven more will love more. Yet as with many teachings of Christ, the simplicity of the story conceals a depth that continues to challenge the assumptions people carry about forgiveness, humility, gratitude, and spiritual awareness. This parable does not merely teach about forgiveness; it exposes the psychological and spiritual dynamics that determine how deeply a person can experience love, grace, and transformation.

The story unfolds in a setting that is already charged with tension. Jesus has been invited to dine at the home of a Pharisee named Simon, a member of the religious elite who prides himself on moral discipline and spiritual authority. At some point during the meal, a woman enters the room. The Gospel text identifies her simply as a “sinful woman,” which in that culture would have carried enormous weight. Her reputation would have been widely known, and her presence in a respectable gathering would have been shocking. Yet she approaches Jesus with an act of raw emotional honesty that breaks every social convention of the moment. She begins to weep at his feet. Her tears fall onto them, and she wipes them with her hair. She kisses them repeatedly and pours perfume upon them. It is a scene of overwhelming vulnerability, the kind of moment that makes observers uncomfortable because it reveals the naked condition of a human heart seeking mercy.

Simon watches this scene with quiet judgment forming in his mind. His conclusion is immediate and logical from his perspective. If Jesus were truly a prophet, he would know what kind of woman is touching him, and he would never allow such a person to come near him. This internal criticism becomes the doorway through which Jesus introduces the parable of the Two Debtors. Jesus speaks directly to Simon, telling a short story that functions almost like a mirror held up to the soul. Two people owe money to a lender. One owes five hundred denarii, while the other owes fifty. Neither is capable of paying the debt. The lender, rather than demanding payment, cancels both obligations entirely. Then Jesus asks Simon which debtor will love the lender more.

Simon answers cautiously, almost reluctantly. The one who had the larger debt forgiven will love more. Jesus confirms that Simon has judged correctly, but the brilliance of the moment lies in what follows. Jesus turns the parable into a living comparison between Simon and the woman kneeling at his feet. Simon had provided none of the customary hospitality that guests in that culture would normally receive. He had offered no water for Jesus’ feet, no greeting of peace, and no oil for his head. Yet this woman, whose life had been marked by failure and social rejection, had poured out extravagant affection and humility. Her tears had washed his feet. Her hair had dried them. Her perfume had anointed them. In that moment Jesus reveals the deeper meaning of the parable. The magnitude of a person’s love is directly connected to their awareness of how much they have been forgiven.

This revelation challenges one of the most persistent illusions of human nature. People naturally measure themselves against others rather than against the true standard of divine holiness. When individuals compare themselves to those they perceive as worse, they begin to imagine that their moral debt is relatively small. The Pharisee in the story embodies this mindset. Simon sees himself as disciplined, respectable, and spiritually accomplished. In his eyes, the sinful woman represents moral failure, while he represents moral success. Yet Jesus’ parable disrupts that framework entirely. The story suggests that the problem is not merely the existence of sin but the blindness that prevents people from recognizing their own spiritual debt.

The deeper message of the parable is not that some people have sinned more than others, although that may appear true from a human perspective. The deeper message is that spiritual transformation begins when a person becomes honest about the true scale of their need for mercy. The woman in the story does not pretend to be morally superior. She does not attempt to defend herself or justify her past. She approaches Jesus with the kind of honesty that can only come from someone who knows they cannot repair their own life through effort alone. Her tears are not merely emotional expression; they represent the collapse of pride and the beginning of genuine humility.

Humility, in this sense, is not humiliation or self-hatred. It is clarity. It is the moment when a person stops constructing elaborate defenses to protect their self-image and instead confronts reality with open eyes. The parable of the Two Debtors reveals that love grows in direct proportion to humility because humility creates space for grace. When someone believes they have little need for forgiveness, they naturally feel little gratitude. Their love remains restrained, calculated, and distant. But when a person becomes aware of the magnitude of mercy they have received, love erupts with a depth that cannot be manufactured by religious performance.

This dynamic explains why some of the most spiritually vibrant individuals throughout history have emerged from lives that once appeared broken beyond repair. When a person experiences forgiveness after recognizing the depth of their failure, gratitude reshapes their identity. Their love is no longer theoretical. It becomes the natural response of a heart that knows it has been rescued. The woman in Luke’s narrative embodies this transformation. Her actions are not polite gestures designed to impress others. They are the spontaneous overflow of someone who has encountered mercy and cannot contain the emotional weight of that encounter.

There is also an important psychological insight hidden within this story. Human beings often protect their sense of worth by minimizing their mistakes and magnifying the failures of others. This defense mechanism allows individuals to maintain a fragile sense of moral superiority while avoiding the discomfort of self-examination. Yet Jesus’ parable dismantles this habit by reframing the conversation around forgiveness rather than comparison. Instead of asking who is morally superior, the story asks who understands the depth of their need for mercy. That shift transforms the entire moral landscape. It moves the focus away from judgment and toward gratitude.

The Pharisee’s problem is not that he lacks intelligence or religious knowledge. His problem is that his moral framework is built on comparison rather than awareness. Because he believes his spiritual debt is small, he sees little reason to respond to Jesus with deep love. His politeness remains restrained because he perceives himself as someone who has little to be forgiven. The woman, however, operates within an entirely different emotional reality. She knows her past. She knows her failures. She knows the weight of the social labels attached to her name. When she encounters Jesus, she encounters the possibility that her debt might actually be erased. That realization produces a response that appears excessive to observers who do not understand the scale of what she has experienced.

The parable also reveals something profound about the nature of divine grace. In the story, the lender cancels both debts entirely. The larger debt does not require partial repayment, nor does the smaller debt require negotiation. Both are forgiven completely. This detail is easy to overlook, yet it carries enormous theological weight. The grace offered through Christ is not a system of partial relief. It is the complete cancellation of a debt that no human being could repay. The cross ultimately becomes the place where that cancellation is made possible.

When individuals begin to grasp this truth, their relationship with God shifts from obligation to gratitude. Religious behavior motivated by fear or duty tends to produce rigid, joyless spirituality. People obey rules because they feel they must, not because their hearts have been transformed. The gospel invites a different response. When forgiveness becomes personal rather than theoretical, obedience flows from love rather than compulsion. The woman’s actions at Jesus’ feet illustrate this transformation perfectly. Her devotion is not forced. It is the natural expression of a heart overwhelmed by grace.

This is why Jesus concludes the encounter with a statement that would have stunned those present. He tells the woman that her sins are forgiven. The declaration does more than comfort her emotionally; it restores her dignity and identity. In a society that had defined her by her past failures, Jesus speaks a new reality over her life. Forgiveness becomes the doorway through which she steps into a different future. The crowd reacts with astonishment because they understand the radical implication of what Jesus has said. Only God has the authority to forgive sins in this way.

The story therefore functions on multiple levels simultaneously. It challenges personal pride, exposes social judgment, reveals the nature of divine grace, and demonstrates the transformative power of forgiveness. Yet perhaps the most enduring lesson lies in the connection between awareness and love. Jesus does not say that the woman loves more because she is morally superior. He says she loves more because she has been forgiven more, and she understands that forgiveness. Her awareness has opened a wellspring of gratitude that reshapes her entire response to Christ.

In modern life, the parable remains just as relevant as it was in the first century. Many people carry a complicated relationship with forgiveness. Some struggle to believe they deserve it, while others avoid confronting the need for it altogether. Yet the human heart continues to operate according to the same spiritual arithmetic described in the parable. Gratitude grows where forgiveness is recognized. Love deepens where grace is understood. Transformation begins where humility replaces comparison.

The world often measures value through achievement, reputation, and outward success. Yet Jesus’ teaching suggests that the most profound transformation occurs not when a person proves their worth but when they recognize their need for mercy. The story of the Two Debtors invites every listener to consider a question that cannot be answered through social comparison. It asks each person to examine their own awareness of grace. Because in the quiet mathematics of the soul, the depth of love a person can experience is inseparably connected to the depth of forgiveness they believe they have received.

As the scene in Luke 7 continues to unfold, the deeper layers of Jesus’ teaching begin to reveal themselves with increasing clarity. What initially appeared to be a simple illustration about financial debts becomes a profound exploration of how human beings relate to mercy, identity, and transformation. The brilliance of Jesus’ teaching style is that he does not confront Simon the Pharisee with accusation or humiliation. Instead, he invites Simon to reason his way toward the truth through the structure of the parable. In doing so, Jesus demonstrates that the human heart is often most effectively confronted not through forceful correction but through revelation. The parable quietly dismantles Simon’s assumptions without raising the emotional defenses that direct criticism would have provoked. It allows the truth to emerge naturally, almost gently, yet with undeniable force.

What Jesus reveals next carries extraordinary weight. After Simon answers the question about which debtor would love more, Jesus turns physically toward the woman while continuing to speak to Simon. That small detail in the narrative is deeply significant because it represents a shift in focus. Simon had been evaluating the woman from a distance, seeing her only through the lens of her reputation. Jesus now positions her at the center of the moment. While addressing Simon, Jesus draws attention to the contrast between Simon’s restrained hospitality and the woman’s overflowing devotion. He reminds Simon that no water was provided for his feet when he entered the house, yet this woman has washed them with her tears. Simon did not greet him with a customary kiss of peace, yet she has not stopped kissing his feet. Simon offered no oil for his head, yet she has poured expensive perfume upon his feet. Each comparison is gentle but unmistakable. Jesus is not merely describing behavior; he is exposing the difference between religious formality and heartfelt gratitude.

The heart of the parable begins to expand in meaning when we recognize that Jesus is not condemning Simon simply for poor hospitality. The deeper issue is spiritual perception. Simon cannot see what is happening in front of him because he believes he already understands the moral hierarchy of the room. In his mind, the woman represents failure while he represents righteousness. That assumption blinds him to the reality that the woman is demonstrating a deeper understanding of grace than he is. Her actions are not simply emotional excess; they are the visible evidence of a soul that has encountered mercy. Simon, by contrast, has reduced his relationship with God to a set of external standards that protect his sense of moral superiority.

Throughout history, this tension between external religion and internal transformation has remained one of the most enduring challenges within faith communities. People naturally gravitate toward systems that allow them to measure their spiritual standing through visible achievements. It feels safer to evaluate holiness through outward behavior than through the more difficult process of examining the motives and attitudes of the heart. Yet Jesus consistently redirected attention toward the inner condition of the soul. The parable of the Two Debtors belongs to a long pattern of teachings in which Christ dismantles the illusion that spiritual life can be reduced to performance.

The woman’s presence in the story represents something radically different from religious performance. Her actions are not calculated. She is not attempting to impress anyone or secure approval from the religious authorities watching her. In fact, she must have known that entering Simon’s home would expose her to public humiliation. Yet something stronger than fear has drawn her into that room. She has encountered Jesus, and in that encounter she has discovered the possibility that her past does not have to define her future. That realization produces a response that cannot be restrained by social expectations. Her tears become the language of a heart experiencing freedom for the first time.

There is a profound psychological truth embedded in that moment. When people believe their identity is permanently defined by failure, they often live within the confines of that identity. Shame has a way of shaping behavior by convincing individuals that they cannot become anything different from what their past suggests. Yet forgiveness disrupts that narrative. When a person truly believes they have been forgiven, the mental prison of shame begins to break apart. Hope replaces despair, and the future becomes something more than a continuation of past mistakes. The woman kneeling at Jesus’ feet is experiencing that shift in real time.

Jesus’ declaration of forgiveness therefore carries transformative power far beyond the moment itself. When he tells the woman that her sins are forgiven, he is not simply offering emotional comfort. He is redefining her place within the community of faith. In a culture where reputations could follow a person for life, Jesus speaks a new identity over her existence. He affirms that the mercy of God is capable of reaching into the most broken chapters of a person’s life and rewriting the story from that point forward. This is one of the central messages of the gospel: the past may explain where someone has been, but it does not have the authority to determine where they must remain.

The reaction of the other guests in the room reveals how disruptive this message was to the prevailing religious mindset. They begin asking among themselves who this man is that even forgives sins. The question exposes the tension between the established religious framework and the authority of Jesus’ ministry. Within the traditional understanding of the time, forgiveness of sins was mediated through temple rituals and priestly structures. Yet here is Jesus, sitting at a dinner table, declaring forgiveness directly to a woman whose life had been marked by moral failure. The implication is unmistakable. Jesus is claiming an authority that belongs to God alone.

For modern readers, it can be easy to overlook how revolutionary this moment would have felt to those witnessing it. The entire structure of religious power was built around systems that regulated access to forgiveness and spiritual acceptance. Jesus disrupts that system by offering forgiveness freely to someone who had no social standing, no religious credentials, and no moral reputation to support her. This act reveals the heart of God in a way that religious institutions had often obscured. Grace is not a reward for those who have already succeeded at moral perfection. Grace is the doorway through which imperfect people are invited into transformation.

The parable of the Two Debtors therefore exposes a paradox at the center of spiritual life. Those who believe they have little need for forgiveness often experience the least transformation because their awareness of grace remains shallow. Meanwhile, those who recognize the depth of their need often experience the most dramatic change because they understand what has been given to them. This does not mean that people must accumulate greater moral failure in order to experience deeper love. The point is not the quantity of wrongdoing but the clarity of awareness. Transformation begins when individuals stop minimizing their need for mercy and instead embrace the truth that grace is the foundation of their relationship with God.

This insight has profound implications for how communities of faith understand compassion and forgiveness in everyday life. When people forget the scale of mercy they themselves have received, they often become harsh judges of others. Moral superiority can grow quietly within the heart, disguised as commitment to righteousness. Yet Jesus’ teaching repeatedly reminds believers that humility must remain central to spiritual maturity. The moment someone begins to believe they stand above the need for grace is the moment their capacity for love begins to shrink.

The story of the Two Debtors invites every reader to examine the posture of their own heart. Are they approaching God with the quiet confidence of someone who believes they have little to be forgiven, or with the gratitude of someone who knows that mercy has reached into the deepest parts of their life? That question has the power to reshape not only personal spirituality but also the way individuals treat others. A heart that understands grace naturally extends compassion because it recognizes that everyone stands in need of the same mercy.

This understanding also changes how believers interpret the concept of justice. Human justice systems are built on the principle of repayment. Debts must be settled, wrongs must be corrected, and penalties must be enforced. Yet the gospel introduces a different dimension that does not eliminate justice but fulfills it through sacrifice. The cancellation of the debt in Jesus’ parable hints at a larger reality that would later be revealed through the crucifixion. The debt of humanity would not simply disappear; it would be carried by Christ himself. In that sense, the parable quietly foreshadows the central event of the Christian story.

When Jesus describes the lender forgiving both debts, he is revealing the heart of God long before the cross fully explains how that forgiveness becomes possible. The cross becomes the ultimate expression of the same truth contained in the parable: a debt humanity could never repay has been canceled through divine mercy. Understanding that reality transforms the relationship between believers and God. Faith ceases to be an anxious attempt to earn acceptance and instead becomes a response of gratitude to grace already given.

As people internalize this message, their perspective on everyday relationships begins to shift. Forgiveness toward others becomes easier when individuals recognize how deeply they themselves have been forgiven. Compassion replaces judgment because the categories of “us” and “them” begin to dissolve in the light of shared human weakness. The woman in Luke’s narrative becomes a living reminder that transformation is always possible when mercy meets humility.

The enduring power of this parable lies in its ability to speak across centuries with undiminished relevance. Every generation struggles with the same tension between pride and humility, between judgment and compassion, between performance and grace. The story of the Two Debtors cuts through these tensions with remarkable clarity. It reminds us that the depth of love a person experiences is not determined by their outward achievements but by their awareness of forgiveness.

In a world that often measures people by their mistakes or their accomplishments, the message of Jesus introduces a radically different standard. Identity is not defined by the worst chapter of someone’s past, nor by the illusion of moral superiority. Identity is reshaped through the encounter with grace. When forgiveness becomes real, love grows naturally from that foundation, and the human heart begins to reflect the compassion that lies at the center of God’s character.

The woman who entered Simon’s house likely left that evening carrying more than the memory of a dramatic moment. She left with a new understanding of who she was. The labels that once defined her no longer held the same power because she had encountered mercy face to face. The parable Jesus shared in that room continues to echo through history, inviting every listener to consider the same transformative truth. The greatest love emerges from the deepest awareness of forgiveness, and the doorway to that awareness is humility.

Your friend, Douglas Vandergraph

Watch Douglas Vandergraph’s inspiring faith-based videos on YouTube https://www.youtube.com/@douglasvandergraph

Support the ministry by buying Douglas a coffee https://www.buymeacoffee.com/douglasvandergraph

Donations to help keep this Ministry active daily can be mailed to:

Douglas Vandergraph Po Box 271154 Fort Collins, Colorado 80527

from  Roscoe's Quick Notes

Roscoe's Quick Notes

Rangers vs Mariners.

My Friday game to follow today is an MLB Spring Training Game between the Texas Rangers and the Seattle Mariners. I don't have access to a video feed for this game, instead I'm watching the score and stats updated in real time on an MLB Gameday screen. The radio call of the game is coming from Seattle's KIRO 760 AM. GO Rangers!

The Covenant That Changed the Architecture of Reality

from Douglas Vandergraph

There are moments in Scripture where the curtain quietly opens and we are allowed to glimpse something far larger than the immediate words on the page. Hebrews 8 is one of those moments. At first glance it may seem like a theological explanation about priests, covenants, and temple systems, but if we slow down and listen carefully, we begin to realize that what the author of Hebrews is describing is nothing less than a restructuring of the spiritual architecture of the universe. Hebrews 8 does not merely clarify religious doctrine; it reveals that the entire system through which humanity relates to God has been transformed from the inside out. The writer is not interested in minor adjustments to religious practice. He is unveiling the replacement of an entire framework that governed human access to the divine for over a thousand years. When this chapter is understood in its full weight, it becomes clear that something breathtaking has happened through Jesus Christ. The old system that defined distance between humanity and God has been replaced with something radically different, something personal, internal, and alive.

The opening lines of Hebrews 8 immediately direct our attention upward. The writer explains that the true High Priest of believers is seated at the right hand of the throne of the Majesty in heaven. This single sentence carries enormous implications. In the Old Testament system, priests never sat down while serving in the temple because their work was never truly finished. Sacrifices had to be offered again and again because sin continued to exist, and the sacrificial system functioned as an ongoing temporary covering rather than a final solution. But the writer of Hebrews deliberately emphasizes that Jesus is seated. The work is complete. The sacrifice is finished. The system of endless repetition has been replaced by a decisive act that resolved the deepest problem humanity has ever faced. This is not merely poetic language. It is a theological declaration that the relationship between God and humanity now rests on something permanent rather than something provisional.

What makes Hebrews 8 particularly fascinating is that it shifts our focus away from the earthly temple entirely. For centuries, the temple in Jerusalem represented the center of Israel’s religious life. It was the place where heaven and earth symbolically met. It was the location where sacrifices were offered and where priests mediated between God and the people. Yet Hebrews tells us that this earthly system was only a shadow of something greater. The temple itself was not the ultimate reality. It was a symbolic preview of a deeper spiritual truth that would later be revealed in full clarity. The writer explains that the priests who served in the earthly temple were operating within a copy and shadow of the heavenly reality. That statement alone reshapes how we understand the entire Old Testament structure. It suggests that the elaborate system of rituals, sacrifices, priesthood, and temple worship was never intended to be the final destination. Instead, it functioned like scaffolding around a building that had not yet been completed.

To understand the magnitude of this idea, we need to appreciate how central the temple system was to ancient Jewish life. Every sacrifice, every priestly duty, every ritual purification was designed to maintain the covenant relationship between God and Israel. The law given through Moses structured every aspect of religious life. It governed moral behavior, community structure, and worship practices. Yet Hebrews boldly states that even this divinely established system contained a built-in limitation. It could reveal sin, it could temporarily address sin, but it could not fully transform the human heart. The law could instruct people about righteousness, but it could not permanently produce righteousness within them. This is not a criticism of the law itself. The law was holy and good. The limitation lay in the human condition. Humanity needed something deeper than external instruction. Humanity needed internal transformation.

The writer of Hebrews addresses this by pointing to a promise found centuries earlier in the writings of the prophet Jeremiah. Long before Jesus was born, Jeremiah had spoken of a future covenant that would differ fundamentally from the one given at Mount Sinai. The promise was radical. Instead of laws written on stone tablets, God would write His law directly on human hearts. Instead of a covenant maintained through external rituals, the relationship between God and His people would become internal and personal. Instead of a system where people needed priests to mediate their access to God, there would come a time when people would know God directly. This prophecy appears in Jeremiah 31, and Hebrews 8 quotes it extensively because it represents the heart of what Christ accomplished.

This promise of a new covenant reveals something profound about how God works throughout history. God often introduces a structure that prepares humanity for something greater that is still to come. The first covenant established through Moses created a moral framework that helped people understand the seriousness of sin and the holiness of God. It created categories of thought that allowed humanity to grasp concepts like sacrifice, atonement, and purification. Without that foundation, the work of Christ might have been impossible to understand. But once the fullness of God’s plan was revealed through Jesus, the earlier system had served its purpose. Hebrews states this very clearly when it says that by calling this covenant new, God has made the first one obsolete.

The word obsolete here does not mean that the old covenant was wrong or useless. It means that it has been fulfilled and surpassed. Just as a blueprint becomes unnecessary once the building has been completed, the old covenant prepared the way for something far greater. The writer is not dismissing the history of Israel. Instead, he is revealing how that history finds its ultimate meaning in Christ. The law pointed forward. The sacrifices pointed forward. The priesthood pointed forward. Every element of the old system functioned like a signpost directing humanity toward the moment when God would solve the problem of sin at its root.

When we consider this from a broader perspective, Hebrews 8 reveals something astonishing about the nature of God’s relationship with humanity. From the very beginning, God’s desire was not merely to give humanity rules to follow. His ultimate goal was always relational. God created humanity in His image, which means humanity was designed for connection with the Creator. Sin disrupted that relationship and introduced separation, guilt, and spiritual confusion into the human experience. The entire story of Scripture can be understood as God’s long and patient work to restore that broken relationship. The old covenant represented an early stage in that restoration process, but it could not accomplish the full transformation that humanity needed.

The new covenant described in Hebrews 8 addresses this problem in a completely different way. Instead of focusing primarily on external behavior, it focuses on internal renewal. God promises to write His laws on the minds and hearts of His people. This means that obedience is no longer driven primarily by external enforcement. It becomes the natural result of an inner transformation. The Spirit of God begins shaping the desires, motivations, and character of believers from the inside out. This represents a fundamental shift in how spiritual life operates. Instead of trying to achieve righteousness through human effort alone, believers are invited into a process where God Himself actively works within them.

This concept carries enormous implications for how we understand spiritual growth. Many people approach faith as if it were primarily about behavior modification. They believe that if they can simply try harder, follow the rules more carefully, and maintain stronger discipline, they will eventually become the people God wants them to be. While discipline and obedience are certainly important, Hebrews 8 reminds us that the foundation of the Christian life is not human effort but divine transformation. God is not merely asking people to conform to an external standard. He is offering to reshape the human heart itself.

One of the most beautiful promises in the new covenant appears in the statement that everyone will know God, from the least to the greatest. This line eliminates the spiritual hierarchy that often emerges within religious systems. Under the old covenant, knowledge of God was often mediated through priests, teachers, and religious leaders who held positions of authority within the community. While these roles served important purposes, they also created a certain distance between ordinary people and the presence of God. The new covenant collapses that distance. Every believer has direct access to God through Christ. Spiritual intimacy with God is no longer reserved for a select group of religious specialists. It becomes the birthright of every person who enters the covenant through faith.

This democratization of spiritual access is one of the most revolutionary aspects of Christianity. It means that the presence of God is not confined to temples, institutions, or specific geographic locations. The presence of God now dwells within the hearts of believers. The temple is no longer a building made of stone. The temple has become a living community of transformed people. This idea appears throughout the New Testament, where believers are described as the body of Christ and the dwelling place of God’s Spirit.

Another promise contained in the new covenant is that God will remember sins no more. This does not mean that God literally loses the ability to recall human wrongdoing. Rather, it means that the barrier created by sin has been permanently removed through the sacrifice of Christ. Under the old covenant, sacrifices had to be repeated continually because they symbolically addressed sin without fully removing its underlying power. The new covenant accomplishes something deeper. Through the work of Jesus, the power of sin to separate humanity from God has been decisively broken. Forgiveness is no longer temporary. It is complete.

When we reflect on this promise, it becomes clear that Hebrews 8 is describing a spiritual revolution. The system of religious striving that defined so much of ancient life has been replaced with a covenant built on grace, transformation, and direct relationship with God. The implications of this change reach into every corner of human experience. It affects how people understand forgiveness, identity, purpose, and spiritual growth. Instead of living under the constant pressure of trying to earn God’s approval, believers are invited to live from the reality that God’s approval has already been secured through Christ.

Yet even with this extraordinary gift, many people continue to live as if they are still under the old system. They carry unnecessary guilt, fear, and spiritual anxiety because they believe their standing with God depends primarily on their own performance. Hebrews 8 gently dismantles that misunderstanding. The new covenant is not sustained by human perfection. It is sustained by the finished work of Jesus, the High Priest who sits at the right hand of God because the work has already been completed.

When we begin to see Hebrews 8 in this light, the chapter becomes more than a theological explanation. It becomes an invitation to step fully into the new reality that God has created through Christ. It reminds believers that their relationship with God is not built on fragile human effort but on the unshakable foundation of divine grace. And when that truth settles deeply into the human heart, it begins to change everything.

The deeper we continue to walk into Hebrews 8, the more we begin to realize that the new covenant is not simply a religious improvement over an older system. It represents a fundamental transformation in the way God interacts with humanity. The old covenant revealed God's holiness and humanity’s inability to fully live up to that standard. It showed people what righteousness looked like, but it also exposed how far humanity had fallen from the life God originally intended. The sacrifices offered year after year became a constant reminder that something was still unfinished. They revealed the seriousness of sin, but they also pointed toward a future solution that had not yet fully arrived. Hebrews 8 invites the reader to understand that with Jesus Christ, that long-awaited turning point finally came. The temporary shadows that once guided humanity have now given way to the substance itself.

One of the most powerful ideas woven throughout this chapter is the difference between external religion and internal transformation. The old covenant relied heavily on outward obedience. People followed laws that were written on tablets, memorized commands that were recited within their communities, and participated in rituals that reminded them of God’s holiness and their need for purification. These practices were meaningful, and they created a shared identity for the people of Israel, but they could not reach into the deepest layers of the human soul where the true struggle with sin takes place. Anyone who has honestly examined their own heart understands this reality. Human beings often know what is right long before they actually begin living it. Knowledge alone does not always create transformation. The law could instruct, but it could not recreate the human heart.

This is where the promise of the new covenant becomes breathtaking in its scope. God declares that He will write His laws directly into the minds and hearts of His people. Instead of righteousness being imposed from the outside, it begins to grow from within. The Spirit of God becomes the active force shaping a believer’s thoughts, desires, and character over time. This is not instant perfection, but it is real transformation. It means that the Christian life is not simply about trying to mimic goodness through sheer effort. It is about allowing God to reshape the deepest motivations that drive human behavior. When the heart begins to change, obedience becomes less about obligation and more about alignment with the life God created humanity to experience.

The writer of Hebrews wants the reader to grasp that this new covenant changes the very nature of spiritual intimacy. Under the old system, the presence of God was associated with sacred spaces. The temple represented the meeting place between heaven and earth. Access to the most sacred part of the temple, the Holy of Holies, was restricted to the high priest, and even he could only enter once a year. The design of the temple communicated an important truth about God’s holiness, but it also reinforced the reality that humanity stood at a distance from Him. Barriers, curtains, and layers of ritual reminded people that approaching God required mediation and careful preparation. It was a sacred system, but it also emphasized separation.

The new covenant dismantles that distance in a remarkable way. Through Christ, the presence of God is no longer confined to a building or guarded by layers of priestly access. Instead, the presence of God becomes something that dwells within believers themselves. This is one of the most radical ideas ever introduced into human religious thought. The temple is no longer made of stone and gold. The temple becomes living people whose hearts have been renewed by the Spirit of God. This shift means that access to God is no longer defined by geography, ritual timing, or social position. Every believer carries the presence of God with them wherever they go.

This transformation has enormous implications for how faith is lived out in daily life. If the presence of God truly dwells within believers, then every moment becomes an opportunity to walk in awareness of that relationship. Faith is no longer confined to specific religious events or weekly gatherings. It becomes a continuous experience woven into ordinary life. Conversations, work, struggles, decisions, and quiet moments of reflection all become places where the relationship between God and His people unfolds. The sacred is no longer isolated inside temples. The sacred moves through the everyday lives of those who belong to God.

Another remarkable aspect of the new covenant is the promise that everyone within it will know God personally. This statement reaches far beyond intellectual knowledge. The kind of knowing described here refers to relational familiarity, the kind that develops through ongoing interaction and trust. In many religious systems, spiritual understanding is often concentrated among leaders or scholars who possess specialized knowledge. While teaching and leadership remain important within the Christian community, Hebrews emphasizes that every believer has direct access to the knowledge of God through the Spirit. This removes the idea that spiritual intimacy is reserved for an elite group of individuals. The invitation to know God personally extends to everyone, regardless of background, education, or social standing.

When we step back and view the entire biblical narrative, Hebrews 8 begins to feel like the culmination of a long story that started in the earliest pages of Scripture. In the Garden of Eden, humanity walked in direct fellowship with God. There were no temples, no sacrifices, and no barriers between the Creator and His creation. That original relationship was fractured by sin, and much of the biblical story describes God’s ongoing work to restore what was lost. The old covenant functioned as an important stage in that restoration process, but the new covenant brings humanity much closer to the original design. Through Christ, the distance introduced by sin is overcome, and the relationship between God and humanity is reestablished on a deeper foundation.

One of the final promises quoted in Hebrews 8 may be the most comforting of all. God declares that He will forgive the wickedness of His people and remember their sins no more. This statement touches on one of the deepest fears present within the human heart. Many people carry a quiet anxiety that their past mistakes permanently define them. They worry that their failures place them beyond the reach of grace, or that God’s patience will eventually run out. The new covenant speaks directly to that fear. Forgiveness in Christ is not partial, temporary, or fragile. It is decisive and complete. When God says He remembers sins no more, it means that those sins no longer stand as barriers between the believer and the presence of God.

This truth reshapes how believers understand their identity. Instead of defining themselves by past failures, they are invited to define themselves by the grace that God has extended to them. This does not mean that sin becomes irrelevant or that moral responsibility disappears. Rather, it means that transformation is now possible because the burden of guilt has been lifted. People who know they are forgiven are free to pursue growth without the crushing weight of condemnation hanging over them. They can move forward with humility and gratitude, trusting that God’s mercy is stronger than their past.

Hebrews concludes this section by stating that the old covenant is becoming obsolete and aging away. For the original audience of this letter, this statement would have been both profound and unsettling. The temple system had shaped Jewish religious life for generations. Suggesting that it was fading away would have sounded almost unimaginable. Yet the writer understood that God was doing something new through Christ that could not be contained within the old structures. History itself would soon confirm this shift when the temple in Jerusalem was destroyed in the year 70 AD. The center of religious life would no longer revolve around sacrifices offered in a specific location. Instead, the faith of believers would revolve around the finished work of Jesus and the living presence of God within His people.

For modern readers, Hebrews 8 offers an invitation to rethink what it truly means to live in relationship with God. It challenges the tendency to reduce faith to routine or tradition. It reminds believers that Christianity is not merely about preserving religious forms from the past. It is about participating in the living covenant that God established through Jesus Christ. The law written on the heart, the Spirit dwelling within believers, and the complete forgiveness offered through Christ create a relationship with God that is both deeply personal and spiritually transformative.

When this chapter is understood in its full depth, it becomes clear that the new covenant is not simply a theological concept. It is the living foundation of the Christian life. It explains why believers can approach God with confidence. It explains why spiritual transformation is possible even for people who feel broken or unworthy. It explains why the message of Christ continues to spread across cultures and generations. The new covenant reveals that God’s ultimate desire has always been to dwell among His people and to restore the relationship that sin once shattered.

Hebrews 8 stands as a powerful reminder that the story of redemption is not merely about correcting human behavior. It is about renewing the human heart and restoring humanity’s connection with its Creator. Through Jesus Christ, the High Priest seated at the right hand of God, the barrier between heaven and earth has been overcome. The shadows have given way to the reality. The temporary has been replaced by the eternal. And every believer now stands invited into a covenant that will never fade away.

Your friend, Douglas Vandergraph

Watch Douglas Vandergraph’s inspiring faith-based videos on YouTube https://www.youtube.com/@douglasvandergraph

Support the ministry by buying Douglas a coffee https://www.buymeacoffee.com/douglasvandergraph

Donations to help keep this Ministry active daily can be mailed to:

Douglas Vandergraph Po Box 271154 Fort Collins, Colorado 80527

from  Kroeber

Kroeber

#002299 – 14 de Setembro de 2025

Os mesmos que, na indústria da música, criaram um espalhafato sobre a pirataria e ameaçaram mesmo tratar quem escutava música e a partilhava como criminosos, agora roubam descaradamente a música a quem a criou, para depois gerar música por inteligência artificial, que por isso não implica nenhuma relação laboral nem nenhum direito de autor, a não ser o da propriedade intelectual do software que foi alimentada pela música roubada.

from  Kroeber

Kroeber

#002298 – 13 de Setembro de 2025

O Benn Jordan e a sua “adversarial music” a envenenar os dados que a seguir serão canibalizados pela inteligência artificial. Uma resistência a nascer entre artistas, que encontram formas de sabotar o roubo de que são alvo. Jordan chama-lhe Poisonify.

2026-03-05

from Two Sentences

I needed to catch up on sleep. As a wise friend said at dinner today: “I gotta sleep early”; and so I did.

When the World Punishes Truth: The Quiet Courage of Living Honestly in an Age of Illusion

from Douglas Vandergraph

There are moments in history when truth becomes uncomfortable, not because it has changed, but because the surrounding world has drifted so far from it that its presence exposes the illusion everyone has grown accustomed to living within. Human societies have always constructed narratives about themselves, stories that explain who they are, what they value, and where they believe they are going. These narratives can be noble when they align with truth, but they become fragile the moment they depend on denial, distortion, or convenient silence. At that point something subtle begins to happen. The culture gradually rewards those who repeat the approved story and quietly punishes those who question it. In those seasons the person who simply speaks honestly can suddenly appear rebellious, disruptive, or even dangerous. What once would have been called integrity begins to look like treason, not because truth has become radical, but because the environment surrounding it has grown allergic to reality. This strange dynamic is not new to our generation, nor is it unique to modern society. It has existed for as long as human beings have struggled with the tension between comfort and truth, and Scripture reveals that this conflict lies at the very center of the spiritual battle that has unfolded since the beginning of human history.

The biblical story begins with an act of creation rooted entirely in truth. God speaks, and reality forms in perfect alignment with His word. Light separates from darkness, land rises from the waters, and life begins to flourish in every corner of creation. Nothing in that original moment is built on illusion, manipulation, or deception. Everything is grounded in the simple authority of divine truth expressed through the voice of God. Humanity is then created in the image of that truth-speaking Creator, meaning that the human mind, heart, and spirit were designed to function best when aligned with reality as God defines it. This alignment is not merely intellectual but relational, because truth in Scripture is never presented as an abstract concept detached from life. Truth is the atmosphere in which human beings were meant to live, breathe, and flourish. It is the moral oxygen of the soul. When humanity walks in truth, everything from relationships to communities to nations begins to reflect a harmony that mirrors the character of God Himself. But when truth becomes distorted or ignored, the consequences ripple outward in ways that gradually reshape every aspect of life.

The first fracture in humanity’s relationship with truth occurred in a moment that seemed deceptively small. The serpent in the garden did not begin with an open declaration of rebellion against God, nor did he attempt to overpower Adam and Eve with force or intimidation. Instead he introduced something far more subtle and far more powerful: doubt about the reliability of God’s words. His strategy revolved around one simple question that has echoed through human history ever since. “Did God really say?” In that moment the serpent shifted the conversation away from trust in divine truth and toward human interpretation, speculation, and suspicion. Once that door opened, the ground beneath humanity’s understanding of reality began to move. If God’s word could be questioned, then truth itself became negotiable. If truth became negotiable, then the authority to define reality shifted away from the Creator and toward the creature. What followed was not simply a moral mistake but the birth of a pattern that continues to shape the world today: whenever humanity attempts to construct reality apart from God’s truth, confusion multiplies and disorder spreads.

This ancient moment in the garden explains something about the world we now inhabit. Human beings possess an extraordinary capacity for creativity, intelligence, and innovation, yet they also possess an equally powerful ability to construct narratives that protect their comfort even when those narratives drift away from truth. Entire cultures can gradually become invested in illusions that feel stable and reassuring while quietly ignoring the deeper reality beneath them. These illusions often become reinforced by systems of influence, authority, and tradition until they appear nearly unshakeable. In such environments speaking truth can feel socially disruptive because truth threatens the stability of the illusion itself. The person who calmly points out reality may not intend to challenge the system, but the system reacts defensively because it senses the potential collapse that truth could bring. This is why throughout history truth tellers have often been treated not as guides back to reality but as threats to the existing order.

The biblical prophets understood this tension with remarkable clarity. Men like Isaiah, Jeremiah, and Ezekiel were not political agitators in the conventional sense, yet their commitment to speaking God’s truth placed them in direct conflict with the comfortable narratives of their time. These prophets often confronted leaders who preferred reassuring messages over honest warnings. They addressed communities that had grown accustomed to hearing what they wanted to hear rather than what they needed to hear. When prophets spoke about injustice, idolatry, or moral drift, the reaction was rarely celebration. Instead they encountered resistance, ridicule, and sometimes outright persecution. The reason was simple: their words exposed the gap between the reality God saw and the story people preferred to believe about themselves. That exposure felt threatening because acknowledging truth would require change, humility, and repentance. As long as the illusion remained intact, life could continue without disruption.

This pattern reaches a profound climax in the life of Jesus Christ. When Jesus entered the world, He did not arrive merely as a teacher offering interesting ideas about morality or spirituality. He came as the embodiment of divine truth itself. The Gospel of John opens with language that reveals the depth of this reality, describing Christ as the Word through whom all things were made. The same voice that spoke creation into existence now walked among humanity in human form. Truth was no longer simply spoken from heaven; it was standing in the streets, healing the sick, teaching crowds, and confronting the distortions that had taken root within religious and political systems alike. Jesus did not seek conflict for its own sake, but His presence naturally created tension wherever illusion had replaced truth. His teachings challenged the hypocrisy of religious leaders who valued outward appearance over inner transformation. His compassion exposed social systems that ignored the suffering of the marginalized. His authority unsettled political structures that depended on control rather than justice.

One of the most revealing moments in the life of Jesus occurs during His trial before Pontius Pilate. Pilate represents the Roman Empire, the most powerful political force of that era, a system built on authority, order, and the preservation of imperial stability. As Jesus stands before him accused of threatening the established order, Pilate asks a question that has echoed through centuries of human reflection: “What is truth?” The question itself reveals something about the mindset of power. Pilate speaks as someone who has seen countless political narratives, competing claims, and shifting loyalties. In his world truth may appear fluid, shaped by perspective and circumstance. Yet the irony of the moment is striking. The man asking the question is standing face to face with the embodiment of truth and still cannot recognize it. This encounter illustrates how systems built primarily on maintaining power can gradually lose the ability to perceive truth clearly. When stability becomes the highest priority, truth becomes inconvenient if it threatens the existing structure.

The crucifixion that follows might appear, from a purely earthly perspective, to be the victory of the empire over truth. Jesus is condemned, executed, and placed in a tomb under the authority of the state. For those watching from the outside, the machinery of power seems to have succeeded in removing the threat. Yet the resurrection three days later reveals a deeper reality about the nature of truth itself. Truth can be attacked, silenced, and temporarily buried, but it cannot ultimately be destroyed. The resurrection of Christ stands as the most profound demonstration that divine truth does not depend on human approval or institutional protection in order to endure. While empires rise and fall across centuries, the truth embodied in Christ continues to transform hearts, cultures, and civilizations. The Roman Empire that once executed Jesus eventually faded into history, while the teachings of Christ spread across continents and generations.

This reality carries profound implications for believers living in any era. Every generation eventually encounters moments when speaking truth becomes costly. Sometimes the cost is social, involving misunderstanding or criticism. Sometimes the cost is professional, affecting opportunities or advancement. In other cases the cost may involve deeper forms of opposition that test a person’s courage and faith. The question that arises in those moments is not simply whether truth is correct, but whether the individual is willing to stand with it even when doing so carries consequences. Scripture consistently portrays this choice as a defining element of faithful living. The heroes of faith described in the Bible were not individuals who avoided tension but individuals who remained aligned with God’s truth even when it placed them at odds with prevailing cultural narratives.

At the same time the Bible never portrays truth as a weapon intended to crush or humiliate others. The life of Jesus demonstrates that truth and compassion are inseparable. Christ spoke truth boldly, yet He also extended grace to those who were lost, confused, or trapped within deception. His confrontations were directed primarily toward systems of hypocrisy and power that oppressed others, not toward individuals who were sincerely searching for understanding. This balance is essential for believers seeking to live truthfully in a complex world. Truth without compassion can become harsh and self-righteous, while compassion without truth can drift into sentimentality that fails to address reality. The example of Christ calls believers to hold both together, reflecting a character that is firm in conviction while generous in mercy.

Living in truth also requires a deep internal alignment that begins within the heart of the individual. Before believers can speak truth into the world, they must first allow truth to reshape their own lives. This involves examining personal motives, confronting hidden fears, and surrendering areas where self-deception may have taken root. The spiritual journey is not merely about correcting external behavior but about transforming the inner landscape of the soul so that it reflects the character of Christ. When this transformation begins to take place, a quiet confidence emerges. The believer no longer depends on the approval of shifting cultural trends for identity or purpose. Instead identity becomes rooted in the unchanging truth of God’s love and calling.