Want to join in? Respond to our weekly writing prompts, open to everyone.

When Generosity Stops Being a Law and Becomes a Life

from Douglas Vandergraph

There comes a moment in every believer’s journey when the familiar practices of faith begin to feel too small for the questions rising inside them, and few subjects expose this tension more than tithing and church giving. For generations, Christians have been told that the ten-percent tithe is the unshakable standard for honoring God with financial obedience, and many have embraced this with sincerity, devotion, and even sacrificial commitment. Yet in quiet corners of their hearts, some have also wondered why the New Testament does not repeat the command, reinforce the number, or regulate giving with the same legal weight that defined ancient Israel’s system of temple support. The more a person reads the Gospels and the letters of the apostles, the more they discover that something shifts at the cross—something profound enough to reframe the entire conversation about generosity. This shift is not merely doctrinal but deeply relational, and it replaces obligation with transformation in a way that many churches today rarely explain from the pulpit. When believers begin to uncover this truth for themselves, they often find that their understanding of giving is not weakened but strengthened, not reduced but reborn, and not restricted by percentages but expanded by love. This article invites the reader into that journey, exploring how the early Christian community navigated finances, shared resources, and embodied generosity as an expression of grace instead of a requirement of law.

When we look honestly at the New Testament, we are not confronted by a continuation of Levitical tithing but by a radical reorientation of the human heart toward the life of God. Jesus does not instruct His disciples to tithe, nor does He command the early church to maintain the temple tax, yet He speaks often and directly about the posture of the heart toward money. He challenges greed, warns against storing treasure on earth, invites believers into a life of trust, and praises the widow who gave everything while giving almost nothing in measurable value. His teaching reveals that generosity is not meant to be measured by calculators but by character, not by the percentage given but by the condition of the heart doing the giving. The idea that one could fulfill righteousness through a fixed amount collapses under the weight of a kingdom built on sacrificial love. To follow Jesus is not to ask, “How little can I give and still be obedient?” but rather, “How fully can I live open-handed in a world trying to close my fists?” The tithe served a purpose under a covenant designed to shape a nation, sustain a priesthood, and preserve temple worship, but the cross ushers in a kingdom that no longer needs those structures to reveal the presence of God. As the curtain in the temple tears from top to bottom, the entire framework of religious obligation gives way to a new creation built on grace, and financial stewardship becomes a response to love rather than a requirement of law. This is the heartbeat of New Testament giving, and the early Christians embraced it with a passion that still echoes through the centuries.

It is important to understand that ancient Israel did not give one tithe but several, adding up to far more than ten percent when all requirements were combined. The people supported the Levites, provided for festivals, cared for the poor, and participated in a national economic system intertwined with worship, land ownership, and community identity. The tithe was part of a theocratic structure in which civil, ceremonial, and religious life were inseparable. When pastors today preach that Christians are commanded to give ten percent because “the Bible says so,” they are often collapsing an entire ancient economic system into a single generalized instruction. By the time Jesus steps into human history, the temple tithe is still practiced, but the heart of the people has drifted far from the God who instituted it. Jesus confronts this disconnect not by reinforcing the tithe but by exposing the deeper issue: the human heart had become a place of calculation instead of communion. His sharpest critiques fall upon those who tithed meticulously yet ignored justice, mercy, and faithfulness. He does not condemn the practice itself, but He makes it clear that the measure of devotion cannot be contained in numerical requirements. The coming kingdom demands something far more costly than percentages—it demands the transformation of the entire person. When the church was born, the apostles understood this shift, and they never reinstituted the tithe as a Christian requirement. Instead, they taught giving rooted in grace, accountability, compassion, and voluntary generosity inspired by the Spirit of God.

The book of Acts provides the clearest window into the heart of early Christian giving, and its picture is breathtaking in its simplicity and its power. The believers shared what they had not because they were commanded but because their hearts were awakened to a new kind of community. They sold property, redistributed resources, and ensured no one lived in lack, not because a legal system forced it but because love compelled it. Their generosity flowed from the realization that everything they possessed was ultimately God’s and that the Spirit was forming a family where each member was responsible for the well-being of the others. This was not socialism, nor was it religious taxation. It was voluntary, Spirit-driven, relational generosity shaped by the reality of resurrection life. The apostles never assigned percentages, never established tithing quotas, and never demanded fixed contributions from their congregations. Instead, they emphasized willing hearts, cheerful giving, and contributions that reflected gratitude, unity, and mutual care. The absence of the tithe in every instruction to the early churches is not accidental—it reveals that the apostles understood the difference between living under law and living under grace. Paul, Peter, James, and John never once instruct believers to give ten percent, yet they speak constantly about generosity, stewardship, accountability, and supporting the work of the ministry. Their vision of giving is not smaller than the tithe; it is far greater. It expands beyond the boundaries of obligation and invites believers into a life where everything belongs to God and generosity becomes a hallmark of spiritual maturity.

One of the clearest teachings on giving in the New Testament comes from Paul’s letters to the Corinthians, where he addresses financial support for the church and relief for suffering believers. His teaching is thorough, practical, spiritual, and strikingly free of legalism. Paul never once cites the tithe as a standard for the Christian life. Instead, he outlines a pattern rooted in voluntary generosity, intentional planning, sacrificial love, and joy. He tells believers to give as they have been prospered, but he does not assign a percentage or define prosperity in rigid terms. He emphasizes cheerful giving, which cannot be commanded without contradicting its essence. He avoids coercion, refuses manipulation, and rejects the idea that giving should be driven by guilt. His instruction is pastoral and relational, encouraging believers to participate in the work of the gospel and the care of the poor in ways that reflect their personal capacity and spiritual maturity. In short, Paul redefines giving from something believers must do to something they get to do. The difference is staggering. It shifts giving from a tax to an act of worship and from a duty to a declaration of devotion. The power of his teaching lies not in how much he asks believers to give but in how deeply he calls them to belong to one another through generosity.

Yet, it is equally vital to acknowledge that the New Testament does not portray giving as optional or casual. Grace does not lower the standard; it raises it. The absence of law does not free believers from responsibility; it deepens their accountability to love. Generosity becomes a reflection of discipleship, an outflow of transformation, and a response to the love of Christ. The early church took this seriously. They did not give less than the tithe; in many cases, they gave far more. They gave until the needs of the community were met. They gave until the gospel advanced. They gave until their love became visible. Their giving was not measured by what they contributed but by what they withheld. The tithe asked for a portion; grace asks for the whole heart. The tithe required a percentage; grace requires participation in the life of the kingdom. The tithe demanded obedience to a number; grace calls believers into a life where generosity becomes a natural expression of character shaped by God. When churches today emphasize the tithe without teaching the deeper call of the New Testament, they risk reducing generosity to a transaction rather than elevating it to an act of transformation.

The modern conversation around tithing becomes even more complicated when we consider the financial structures of contemporary churches. Buildings must be maintained, staff must be paid, ministries must be supported, and outreach must be funded. Many pastors lean on the tithe not because the New Testament commands it but because churches struggle to survive without predictable financial support. Yet this practical need does not rewrite Scripture, nor does it justify teaching requirements the apostles never gave. The early church survived and flourished without commanding a ten-percent tithe. They relied on love, community, stewardship, and Spirit-led generosity. They trusted that if believers were spiritually healthy, giving would follow naturally. They believed that teaching truth was more important than maintaining institutions. While modern churches face challenges the early church did not, the solution is not to reimpose the law but to deepen discipleship. When people fall in love with God, generosity becomes instinctive. When believers understand the mission of the gospel, they give toward it joyfully. When a church teaches stewardship as a lifestyle instead of a requirement, financial health follows as a fruit rather than a demand. The New Testament model invites us to build communities where giving flows from love, participation, and purpose, not pressure.

As the early church expanded beyond Jerusalem and reached into cities like Corinth, Ephesus, Philippi, and Thessalonica, the apostles consistently reinforced a model of giving that was relational rather than legal, communal rather than transactional, and deeply spiritual rather than merely financial. Their writings reveal an unbroken commitment to generosity rooted in changed hearts rather than external compulsion. Believers contributed to the needs of the saints, supported missionaries, cared for widows and orphans, and funded the spread of the gospel across the Roman world. These contributions were sometimes substantial, sometimes modest, and always voluntary. Paul often thanked churches for their generosity by highlighting their poverty, not their wealth, demonstrating that sacrificial giving is never measured by the size of the gift but by the depth of the devotion behind it. The Macedonian believers, described as giving beyond their ability, stand out as a profound example of New Testament generosity. They were not obeying a law; they were overflowing with grace. This distinction matters, because when generosity becomes a fruit of the Spirit rather than an obligation of the law, the result is a community shaped by love rather than fear, by abundance rather than scarcity, and by participation rather than pressure.

One of the most misunderstood passages concerning giving appears in Jesus’ teaching about the Pharisees, where He acknowledges their tithing practices before redirecting them to the weightier matters of the law. Many have taken these words as evidence that Jesus endorsed tithing for all believers, but the context reveals something entirely different. Jesus was speaking to Jews still living under the Old Covenant, still bound to temple worship, still subject to the law that He had not yet fulfilled. His acknowledgement of their tithe is not an instruction for Christians but a confrontation aimed at revealing their hypocrisy. They tithed carefully but neglected justice, mercy, and faithfulness. His teaching was not about how much they gave but how little their giving meant in the absence of a transformed heart. The cross had not yet taken place; the New Covenant had not yet been inaugurated. After the resurrection, after the church was born, after grace replaced law as the foundation of Christian discipleship, the apostles never instructed Gentile believers to adopt Israel’s tithing system. Instead, they invited them into a generosity shaped by gratitude for the gospel, partnership in the mission, and love for the family of faith. This shift is not subtle. It reshapes the entire landscape of Christian giving and exposes how incomplete many modern teachings on tithing truly are.

It is also important to recognize that the New Testament church operated in a world without church buildings, staff salaries, mortgages, utility bills, or modern budgetary pressures. Their gatherings were often in homes, open spaces, or borrowed facilities. Their resources flowed directly into the lives of believers and the advancement of the gospel rather than into institutional structures. This reality does not delegitimize the needs of modern churches, but it does provide perspective. Church expenses today can be significant, and ministries require financial support to function effectively. However, the solution is not to reinterpret Scripture but to cultivate communities of believers who understand the mission deeply enough that their generosity naturally sustains it. When pastors teach stewardship as a way of life rather than a numerical obligation, people respond with a sincerity that far exceeds the limits of a percentage. Generosity grows best in soil prepared by discipleship, prayer, transparency, and spiritual maturity. When the church operates with integrity, communicates its needs openly, and models accountability, believers are more likely to participate joyfully in supporting the ministry. The early church did not rely on the tithe, and yet the gospel spread like wildfire across nations, cultures, and languages. What propelled them was not a mandatory system but a movement of hearts awakened to the reality of Christ’s love.

In exploring the New Testament model of giving, it becomes clear that Jesus consistently elevates the conversation from obligation to transformation. He praises extravagant generosity not because of the amount but because of the surrender it reflects. He challenges a rich young ruler not to fulfill a percentage but to release a rival god from his heart. He teaches that where our treasure is, there our heart will be also, making generosity not merely an economic act but a spiritual diagnostic. He warns against storing up earthly riches, knowing that money often becomes the greatest competitor for human devotion. He encourages believers to give in secret, protecting generosity from becoming a performance. These teachings reveal that the essence of giving in the kingdom is not about meeting quotas but about cultivating hearts free from greed, fear, and self-reliance. The New Testament does not ask believers to calculate a tenth; it asks them to calculate the cost of discipleship. It is not concerned with the precision of one’s financial math but with the posture of one’s spirit. When the heart is transformed, generosity follows instinctively, joyfully, and powerfully, reflecting the character of Christ rather than the requirements of law.

Another crucial piece of this conversation is the relationship between giving and trust. The tithe under the Old Covenant functioned as a tangible reminder that everything belonged to God, but under grace, trust becomes the foundation of all generosity. The New Testament repeatedly links giving with faith, encouraging believers to see their resources not as possessions but as tools for kingdom impact. Paul’s teaching to the Corinthians emphasizes that God supplies seed to the sower, making generosity a cycle of provision rather than a loss. He assures the church that God is able to make all grace abound, freeing them from the fear that generosity will lead to lack. This promise does not guarantee wealth but assures believers that God will equip them for every good work. Giving becomes an expression of confidence in God’s faithfulness, a declaration that our security is found in Him rather than in what we store. When Christians understand this truth, they step into a level of freedom that transforms the way they view money. Fear loosens its grip, generosity becomes joyful, and stewardship becomes a natural extension of discipleship. Trust and generosity are inseparable in the life of the believer, and this truth is at the core of the New Testament model.

However, while the New Testament does not require the tithe, it does require something much deeper: a life fully surrendered to God. Grace does not shrink responsibility; it expands it. The call to follow Jesus includes every part of a believer’s life—time, talents, relationships, and finances. The early church understood that belonging to Christ meant holding nothing back. They lived with open hands, ready to share, ready to give, ready to serve, ready to respond to the needs of the body. Their generosity was not formulaic but dynamic, shaped by the needs of their community and the leading of the Spirit. They gave not because they had to but because they could not imagine doing anything else in light of what Christ had done for them. Their giving was not an act of compliance but an act of worship. They did not measure generosity by what they contributed but by how fully they lived for the kingdom. This is the model that many modern churches overlook when they reduce giving to a percentage. The New Testament asks for the heart, not for the tithe, and a surrendered heart always exceeds the boundaries of legal obligation.

There is a quiet tragedy in how often Christians today feel guilt, pressure, or shame around giving. Many have been taught that failure to tithe brings curses, misfortune, or spiritual blockage. Others have been told that blessings are directly tied to whether they give ten percent, turning generosity into a transactional exchange rather than a relational expression of faith. These teachings distort the heart of the gospel and misrepresent the character of God. In Christ, believers are invited into a relationship where blessings flow from grace, not from performance. Generosity is meant to be joyful, not fearful. It is meant to be liberating, not burdensome. It is meant to reflect love, not legalism. When giving becomes a tool for manipulation or fear, it ceases to reflect the kingdom Jesus proclaimed. The New Testament never weaponizes giving. It never threatens believers with curses for failure to meet percentages. Instead, it invites them into a life shaped by gratitude, trust, and participation in the mission of God. When churches rediscover this truth, they do more than free their people—they free themselves from the need to sustain their ministries through pressure instead of through discipleship.

One of the most beautiful outcomes of embracing New Testament generosity is the way it transforms community. When believers give from the heart, relationships deepen, empathy grows, and the church becomes a place where needs are met through love rather than obligation. People feel seen, valued, and supported. Giving ceases to be a ritual and becomes a shared expression of belonging. The early church understood this intuitively. Their generosity created a community that astonished the ancient world, a fellowship so compelling that people were drawn to it even before they understood the message of Jesus. They witnessed a love that transcended status, wealth, culture, and background, and they saw lives marked by sacrifice that reflected the character of Christ. This kind of generosity cannot be produced by legalism. It arises only when people have encountered grace so profoundly that they cannot help but mirror it with their lives. When the modern church embraces this model, it becomes a beacon of light, compassion, and transformation, demonstrating the power of a community shaped by the Spirit of God.

In the end, the question is not whether Christians should give but how they should give. The New Testament answers this with clarity: believers give willingly, cheerfully, sacrificially, intentionally, and relationally. They give in response to grace, not in obedience to law. They give not because ten percent is required but because one hundred percent belongs to God. They give not to earn blessings but to participate in the work of the kingdom. They give not to avoid curses but to reflect the character of Christ. The early church did not tithe—they did something far more powerful. They lived generously in every aspect of their lives, demonstrating that the love of God transforms not only the soul but the wallet, the priorities, and the way one sees the world. When modern believers return to this vision, they discover a freedom that legalism cannot offer and a joy that calculation cannot produce. Generosity becomes a way of life, a mark of discipleship, and a testimony to the world that the people of God are defined not by what they must give but by what they are empowered to give through grace.

The deeper you explore what the New Testament truly says about tithing and church giving, the more you discover that the question was never about percentages. It was always about the heart. It was about trust, transformation, love, and participation in the mission of God. The early church did not need the tithe to flourish, and neither does the modern believer need legalism to live generously. What we need is the same Spirit that filled those early Christians with courage, compassion, and conviction. When that Spirit shapes the heart, generosity becomes as natural as breathing, and giving becomes one of the most powerful ways to embody the love of God in a world desperate for hope. The New Testament invites us into this life—a life where generosity is not a rule but a rhythm, not a calculation but a calling, not a burden but a blessing. When believers embrace this truth, they step into a freedom that transforms both their lives and their communities, revealing the kingdom of God not in theory but in practice. This is what the apostles taught. This is what Jesus modeled. This is what the early church lived. And this is what every believer is invited to rediscover: a generosity shaped by grace, sustained by love, and empowered by the Spirit who makes all things new.

Your friend, Douglas Vandergraph

Watch Douglas Vandergraph inspiring faith-based videos on YouTube https://www.youtube.com/@douglasvandergraph

Support the ministry by buying Douglas a coffee https://www.buymeacoffee.com/douglasvandergraph

Monday

from  Roscoe's Story

Roscoe's Story

In Summary: * Got my weekly laundry all done today: 2 loads, washed, dried, folded and put away. So, productive. Now I'm fully into radio basketball mode. Go Spurs Go!

Prayers, etc.: * I have a daily prayer regimen I try to follow throughout the day from early morning, as soon as I roll out of bed, until head hits pillow at night. Details of that regimen are linked to my link tree, which is linked to my profile page here.

Starting Ash Wednesday, 2026, I'll add this daily prayer as part of the Prayer Crusade Preceding SSPX Episcopal Consecrations.

Health Metrics: * bw= 226.64 lbs. * bp= 130/80 (70)

Exercise: * morning stretches, balance exercises, kegel pelvic floor exercises, half squats, calf raises, wall push-ups

Diet: * 05:55 – 1 banana * 07:05 – 1 peanut butter sandwich * 09:25 – whole kernel corn * 10:30 – breaded pork chop * 14:00 – noodles, green vegetables and egg rolls, rice, cooked pork

Activities, Chores, etc.: * 04:00 – listen to local news talk radio * 05:00 – bank accounts activity monitored * 05:20 – read, pray, follow news reports from various sources, surf the socials, and nap * 09:20 – start my weekly laundry * 10:20 – watching old episodes of Classic Doctor Who * 14:00 to 15:00 – watch old game shows and eat lunch at home with Sylvia * 15:00 – listen to The Jack Riccardi Show * 17:00 – tuned to 1200 WOAI for the call of tonight's Spurs game, and the pregame and postgame shows.

Chess: * 16:00 – moved in all pending CC games

A New Dawn in the Shadow of the Temple

from Douglas Vandergraph

There are chapters in Scripture that feel like standing with your back against eternity while the winds of history rush past your face, and Luke 21 is one of them. It reaches toward you with a weight that refuses to be ignored, a gravity that draws you in even if you think you already understand it. This chapter does not simply record what Jesus said; it reveals the steady pulse of a Savior who refuses to let His people drift blindfolded into the storms that are coming. It reveals the quiet authority of the One who sees the beginning and the end with equal clarity, who can stand inside a single moment while still speaking with the language of ages. And if you read it slowly enough, long enough, honestly enough, you begin to realize the chapter is not merely about future events or ancient warnings, but about the condition of the human spirit when confronted with a world unraveling. It is about you. It is about me. It is about what endures when civilizations tremble and kingdoms collapse. It is about what matters when nothing else does. In this way, Luke 21 is less a prophecy lecture and more a mirror, revealing what Jesus sees when He looks at the world, at His disciples, and at the generations that will follow long after their footsteps fade from the dust of Jerusalem’s streets.

Many readers rush past the opening scene as if it were only a prelude, but that quiet moment with the widow’s offering is the doorway through which the rest of the chapter must be understood. In a way only Jesus could design, the entire architecture of the chapter hangs on that single act of surrender. While others give from comfort, the widow gives from collapse. While others donate without denting their future, she gives in a way that costs her the very life she is trying to sustain. And Jesus watches this with an attentiveness that tells you something about the Kingdom that human systems never quite grasp. Strength is not measured by what you have, but by what you willingly release. Security is not built by accumulation, but by trust. In a world obsessed with the visible, God is drawn to what cannot be seen: the motive of the heart, the posture of dependence, the quiet courage of a soul that refuses to hoard what little it has because it believes God is still enough. That is why her story is the key that unlocks the chapter. Luke 21 will speak of collapse, signs, upheaval, and events that shake nations, yet the first image offered is a woman who trusts God in a world that offers her nothing back. Jesus seems to be saying that no matter what happens next, those who stand firm in that spirit will never be swept away.

This becomes essential as the chapter pivots toward the disciples’ admiration of the Temple. They see stonework; Jesus sees history’s expiration date. They see permanence; Jesus sees impermanence disguised in stone. They see strength; Jesus sees fragility beneath the surface. And He does not rebuke their admiration; He simply replaces it with reality. The things you assume will stand forever may fall in your lifetime. The structures you believe are too large to fail may crumble within a generation. The systems you anchor yourself to may not survive the pressure of the future. Jesus is not trying to frighten them; He is trying to free them. He is teaching them that the Kingdom of God is never secured by architecture, institutions, or the visible pillars of culture. It is secured by the human heart fully yielded to the will of the Father, the way the widow was. The Temple itself, magnificent and seemingly eternal, would fall. But the faith forged in surrender, humility, and trust would outlast it. This is the heartbeat of Luke 21: the collapse of the temporary is not the collapse of the eternal.

When Jesus begins to speak of wars, earthquakes, famines, and fearful signs, He is not offering sensational predictions; He is offering perspective. Humanity tends to interpret turmoil as evidence that God has abandoned the world, yet Jesus reframes the coming chaos not as abandonment but as transition. The old world trembles when the new world begins to break through its cracks. The Kingdom of God does not rise without friction against the kingdoms of earth. And the more tightly the world clings to its illusions of control, the harder it shakes when confronted by the One who actually holds time in His hands. Jesus speaks of these events not as random disasters, but as birth pains. The imagery is intentional. Pain with purpose. Turmoil with trajectory. Suffering that leads somewhere. It is a difficult truth, but a liberating one: God does not lose control when the world loses stability. The shaking is not a sign of His absence, but a sign that the earth cannot remain as it is when the Kingdom draws near.

Then Jesus turns the lens inward, shifting from geopolitical events to the personal cost His followers will endure. Before any world-ending calamity arrives, the disciples themselves will face betrayal, accusation, persecution, and hostility from those closest to them. Jesus does not romanticize faithfulness; He describes it with precision. Following Him will not insulate them from the world’s anger; it will provoke it. Their allegiance will expose the insecurity of those who cling to worldly power. Their peace will confront the chaos in others’ hearts. Their loyalty to the Kingdom will create conflict with the kingdoms around them. Yet Jesus gives them a promise unlike anything found in any other worldview: when you stand before rulers, accusers, or adversaries, do not worry about your defense, for the Spirit of God will speak through you in that moment. In a world where every voice tries to secure its own platform, Jesus offers something radically different: the assurance that when your moment comes, the words will not be born from your own strength but from His presence within you. For the disciples and for every generation after them, this promise becomes the foundation of courage.

As Jesus continues describing events that would eventually unfold in the destruction of Jerusalem, His words carry both compassion and solemnity. He warns of days when fleeing will be wiser than fighting, when discernment will be more crucial than bravado, and when survival will require humility rather than stubborn allegiance to what God is removing. There is a tenderness in His warnings, because He is not trying to frighten; He is trying to shepherd. He is preparing His followers to recognize when judgment is unfolding and when the window of escape is open. The fall of Jerusalem was not just a historical event; it was a collision between human rebellion and divine patience reaching its limit. And Jesus, knowing what was coming, chose not to leave His disciples unprepared. His warnings were acts of love, not fear.

Yet Luke 21 never allows itself to become merely historical. Jesus expands the horizon again, lifting the conversation from the fall of one city to the trembling of the entire world. Signs in the sun, moon, and stars. Nations in distress. The roaring of the seas. People fainting from fear as the powers of heaven themselves appear unstable. All of these images are meant to communicate something deeper than meteorological events. They speak of a world losing its ability to hold itself together, of creation groaning under the weight of human rebellion, of cosmic systems responding to the spiritual reality unfolding within history. Jesus is not describing hysteria; He is describing the moment when the fabric between the seen and unseen becomes thin enough for humanity to realize that it is not the master of the universe. And then, in the midst of global fear, Jesus offers a sentence that has comforted believers for centuries: when you see these things begin to take place, stand up and lift your heads, for your redemption is drawing near. In other words, when the world sees an ending, the believer sees a beginning.

This contrast is essential. Jesus does not call His followers to panic but to posture. He does not call them to dread but to expectation. He does not call them to collapse inward but to rise in confidence. In a world defined by reaction, Jesus calls His people to response—a response rooted in the certainty that God does not lose His children in the chaos of collapsing systems. Redemption does not arrive when everything is calm; redemption arrives when everything else fails. That is why Jesus invites us to lift our heads. Our salvation is not behind us; it is approaching. Our hope is not fading; it is advancing. And our future is not determined by the instability of the world, but by the steadfastness of the One who promised to return.

Part of what makes Luke 21 so profoundly relevant is the way Jesus ties spiritual vigilance to ordinary life. He warns that the hearts of people will become heavy with dissipation, drunkenness, and the anxieties of life. It is striking that He pairs reckless indulgence and destructive distractions with the same seriousness as fear and worry. Jesus seems to be saying that the greatest danger in the last days is not merely catastrophic events; it is the slow, quiet erosion of the human spirit through a life numbed by consumption, entertainment, or the relentless pressure to survive. People may not fall away because of persecution; they may fall away because of preoccupation. They may not reject God out of rebellion; they may simply drift because their souls became too tired to look up. This is the quiet tragedy Jesus warns against: not dramatic defiance, but spiritual sleep.

Yet the chapter does not leave the reader in dread or resignation. Jesus offers a final instruction that becomes the anchor of every generation that reads Luke 21: be always on the watch, and pray that you may have the strength to escape what is coming and to stand before the Son of Man. Watchfulness is not paranoia; it is attentiveness. It is living awake in a world that is spiritually sedated. It is refusing to let your heart become dulled by the noise around you. It is learning to recognize God’s movement even when it happens beneath the surface. And prayer, in this context, is not merely asking for help but aligning your soul with the reality of the Kingdom so deeply that you remain steady when the world becomes unsteady. Jesus is not telling His followers to survive the future by their own strength; He is telling them that the strength they need comes from the connection they maintain with Him in the present.

Luke 21 ends with a rhythm of Jesus teaching in the Temple by day and withdrawing to the Mount of Olives at night, a rhythm that embodies the very message He delivered. Public engagement anchored by private communion. Ministry rooted in stillness. Clarity sustained by prayer. The One who tells the world to stay awake models what a life of spiritual attentiveness looks like. And the people rise early each morning to hear Him because something in them knows they are listening to a voice unlike any other. A voice that does not merely predict the future, but defines it. A voice that does not tremble at history, but directs it. A voice that does not fear the collapse of earthly kingdoms, because it comes from the One whose Kingdom will never collapse.

The significance of Luke 21 is not merely in the events Jesus describes, but in the kind of people He is shaping through His words. When you listen to Him speak in this chapter, you realize He is not trying to produce fearful followers but focused ones, not panicked believers but prepared ones, not people who cling to the world with white-knuckled desperation but people who live with the kind of spiritual clarity that sees through the illusions of the age. The entire chapter operates like a spiritual lens being adjusted with gentle, precise movements until what is blurry becomes sharp and what is overwhelming becomes understandable. Jesus knows the human mind becomes anxious when confronted with uncertainty, so He replaces uncertainty with awareness. He knows the human heart becomes unstable when pressured by chaos, so He replaces chaos with calling. And He knows the human soul becomes weary when burdened by the world’s weight, so He replaces burden with a promise: you are not forgotten, and the story is not spiraling out of control. Rather, it is moving exactly as He has said it would, and He will not lose you in the middle of it.

The quiet brilliance of Luke 21 is that Jesus does not separate the spiritual life from the external world. He never speaks as if faith is something sealed off behind the walls of private devotion. Instead, He shows that faith is lived at the intersection of history and eternity, where empires rise and fall, where nations tremble, and where ordinary disciples continue carrying the flame of the Kingdom in a world that has lost its bearings. Jesus does not promise them ease; He promises them endurance. He does not guarantee that the storms will bypass them; He guarantees that the storms will not break them. Every instruction He gives is shaped by the truth that faith is not an escape route but a lifeline, not a shelter from hardship but a strength within hardship, not a fantasy that denies reality but a clarity that interprets reality through the eyes of God. This is why He speaks with both tenderness and authority. He is not simply informing them of the future, but forming them for it.

Many people assume Jesus gives these warnings only to frighten or to condemn, but the opposite is happening. These words are mercy. They are preparation. They are the loving instructions of a Savior who refuses to let His people wander blindly into a season of upheaval. If you listen carefully, His voice in Luke 21 carries the same tone a loving father uses when he kneels beside his child before a difficult journey, ensuring they understand what lies ahead, not to scare them but to steady them. Jesus refuses to let the disciples mistake temporary structures for eternal foundations. He refuses to let them anchor their hope in buildings, positions, institutions, or cultural stability. He wants their security to be unshakeable because it is rooted in something unshakeable. And so He tells them plainly what will fall, not to traumatize them, but to liberate them from trusting what was never meant to carry the weight of their souls.

The destruction of the Temple becomes a symbol for every generation, because every age has its own temples: the things it believes will outlast time, the things it expects to remain unmovable, the things it assumes are too powerful to fall. But history has shown, again and again, that every human kingdom has an expiration date. Every empire eventually becomes a relic. Every monument eventually becomes a ruin. Yet the Kingdom Jesus announced continues to advance, continues to transform lives, continues to rewrite the human story from within. Luke 21 is not about despair; it is about discernment. It is about learning to see what God sees. It is about recognizing that even when the visible world trembles, the invisible Kingdom stands. And it is about understanding that the true stability of a believer does not come from the world’s predictability, but from God’s faithfulness.

When Jesus transitions to the imagery of cosmic signs and global upheaval, He is not describing fantasy but revelation. The world as we know it is not built to last forever. Creation itself is waiting, groaning, longing for the redemption God promised. Humanity often believes it can sustain the world through innovation, governance, or progress, yet Jesus tells the truth without apology: there is coming a moment when the world will reach the limits of its strength. The systems we trust will fail. The wisdom we rely on will falter. The stability we take for granted will evaporate. And in that moment, humanity will finally confront the truth it has ignored for centuries—that the world is not self-sustaining, and that the true King is not any earthly ruler, but the Son of Man returning in glory.

And yet, for the believer, this moment is not terror but triumph. Jesus says, when these things begin—begin, not conclude—stand up and lift your head, for your redemption is near. That single instruction overturns every instinct of human fear. When the world collapses inward, the believer rises upward. When the nations tremble, the believer looks toward the horizon where the King is approaching. Jesus does not tell His followers to cower, hide, or despair; He tells them to stand. This standing is not physical arrogance but spiritual clarity. It is the recognition that the moment the world fears most is the moment the believer has longed for. It is the moment when faith becomes sight, when promises become reality, when the hopes of every generation reach their culmination. Jesus is not warning His followers of disaster; He is preparing them for deliverance.

The parable of the fig tree deepens this message. Jesus, as He often does, frames eternal reality within the rhythm of creation. Just as leaves on the tree signal the change of seasons, so the signs He describes signal the approaching fulfillment of God’s plan. This is not meant to produce date-setting or speculation, but awareness. Jesus wants His followers to live with the same attentiveness a farmer has when studying the land. Disciples are not called to panic at every tremor, but to discern the difference between random disturbance and prophetic fulfillment. They are not called to interpret every global event through fear, but through faith. And they are not called to be experts in speculation, but experts in spiritual readiness. The fig tree does not try to predict the exact moment summer arrives; it recognizes the indicators and responds naturally. So must the follower of Jesus.

Jesus then speaks a phrase that has puzzled many: this generation will not pass away until all these things take place. Some assume this refers to the immediate disciples, but Jesus is speaking of the generation that sees the signs unfold. Every time Scripture refers to a generation in prophetic context, it refers to the collective group living during the unfolding of the events described. Jesus is not limiting the prophecy to the first century; He is anchoring its fulfillment to the season when the signs converge. And so, the chapter retains its relevance not because it predicts the exact timing, but because it shapes the posture required for every generation leading up to that moment.

Then Jesus offers a statement that feels like both thunder and reassurance: heaven and earth will pass away, but His words will never pass away. This is one of the most astonishing declarations in Scripture, because Jesus is placing His own words above the durability of creation itself. Everything visible will fade. Everything tangible will vanish. Everything temporal will dissolve. But what He has spoken will remain. This is the foundation that believers are meant to stand on when the world shakes. If His words outlast the world, then the one who stands on His words stands on something more secure than the world itself. And this is what Luke 21 is ultimately calling us toward: the kind of life anchored so deeply in His words that no amount of pressure, confusion, or turmoil can uproot it.

It is no surprise, then, that Jesus warns His followers not to let their hearts become weighed down. A weary heart is more dangerous than a hostile world. A distracted mind is more destructive than external persecution. Jesus describes dissipation and drunkenness not merely as immoral behaviors but as symptoms of a soul trying to numb itself. When life becomes overwhelming, many people try to escape through distraction, indulgence, or avoidance. Others collapse under the anxieties of survival. But Jesus warns that these inward conditions make the heart too heavy to respond when the moment of divine awakening arrives. The danger is not only missing the signs, but missing the ability to stand with clarity when they appear. A heart weighed down is a heart unable to lift its head. And so Jesus urges a life of attentiveness, not fear-driven but faith-driven, where the mind stays awake, the spirit stays connected, and the soul stays receptive.

Luke 21 concludes with an image of Jesus that is both simple and profound: He teaches in the Temple during the day and withdraws to the Mount of Olives at night. This rhythm is the secret to understanding the entire chapter. Jesus does not ask His followers to live in unbroken adrenaline, nor to survive by constant vigilance. He models a life of outward ministry grounded in inward communion. He teaches publicly from a place of private strength. He confronts the chaos of the world from a place of deep intimacy with the Father. He navigates conflict with clarity because His spirit is aligned with heaven. And the people, sensing something in Him that cannot be manufactured, rise early each morning to listen. Their hearts recognize the voice that carries eternity in it.

When you allow Luke 21 to settle into your spirit, you realize it is not primarily a chapter about the end of the world; it is a chapter about the end of superficial faith. It is the moment Jesus draws a line between those who follow Him casually and those who follow Him with conviction. It is the moment He invites His disciples to move from admiration to allegiance, from emotional reaction to spiritual readiness, from living in the comfort of the present to anchoring their identity in the unshakeable reality of His return. The chapter forces us to ask what we are really trusting. Are we trusting systems, comforts, routines, or assumptions? Or are we trusting the One who will remain when everything else fades?

If Jesus were standing in front of us today, speaking Luke 21 with the same tone He used that day, He would not be calling us to fear the future; He would be calling us to prepare for it with the same quiet courage He instilled in His disciples. He would be calling us to cultivate a heart like the widow’s: surrendered, trusting, unafraid to release what keeps us comfortable. He would be calling us to stop anchoring our identity to things that cannot survive the shaking. He would be calling us to discern the signs not so we can speculate, but so we can stay steady. He would be calling us to lift our heads when the world bows low in fear. He would be calling us to stay awake when others fall asleep spiritually. And He would be calling us to pray for the strength to stand before Him with a life lived in faith, hope, and steadfast endurance.

Luke 21 is ultimately an invitation to live with clarity in a world addicted to confusion. It is an invitation to live with courage in a world trained by fear. It is an invitation to live with hope in a world shaped by despair. It is an invitation to live with spiritual attention in a world lulled into distraction. And it is an invitation to anchor your soul in a Kingdom that cannot be shaken, a Word that cannot fade, and a Savior who will return exactly as He promised. The chapter is not a threat; it is a roadmap. It is not a warning of doom; it is a promise of redemption. It is not the collapse of hope; it is the revelation of hope. And it calls every reader, in every generation, to be a person who lives awake, lives ready, and lives confident in the God who holds all of history in His hands.

Luke 21 is not meant to frighten the believer but to fortify them. Not to leave them anxious but to make them anchored. Not to create dread but to produce discernment. And when you finally reach the end of the chapter, what remains is not fear but a strange, steady courage. The kind of courage that comes from knowing Jesus sees the future with absolute clarity and still tells you not to be afraid. The kind of courage that comes from knowing He has already accounted for every hardship, every pressure, every event, and every moment you will face. The kind of courage that comes from living with your heart tuned to the voice of the One who said heaven and earth will pass away, but His words will never pass away. And when His words become the foundation beneath your feet, no amount of shaking can unsettle you.

Your friend, Douglas Vandergraph

Watch Douglas Vandergraph’s inspiring faith-based videos on YouTube https://www.youtube.com/@douglasvandergraph

Support the ministry by buying Douglas a coffee https://www.buymeacoffee.com/douglasvandergraph

Anticlimactic

from  The happy place

The happy place

For some weeks now I’m having a Teams notification on the computer. I’ve got a new chat message somewhere, but when I click on it, on the icon, there’s nothing new.

Nothing is there

And yet the notification,

Were I an electron app (God forbid), then it’ll be this teams installation with this confused message which is not to be found, probably an important message hidden in some obscure channel

Or maybe just nothing

But I’ll never know and thank God I’m human although I’d like to be an elf or something but that’s off topic

We just get this hand of cards we’ll have to play as best we can

No one asks for this

The Unseen Carriers of the Kingdom

from Douglas Vandergraph

There is a quiet thread woven through the New Testament that rarely receives the attention it deserves, yet it shapes the architecture of the entire movement of Jesus in ways that are nothing short of astonishing. When I sit with the Scriptures long enough for the noise of the world to fall away and for the text to breathe in the way it was always meant to, I begin to sense the overwhelming intentionality behind the stories that are told and, perhaps even more importantly, the stories that are left untold. Not untold in the sense that their actions were hidden, but untold in the sense that their names were withheld. That deliberate absence, that purposeful silence, that gentle restraint from identifying certain people becomes its own kind of revelation once you notice it. Because once you notice it, you begin to realize that God was shaping a message that would stretch across millennia and touch the lives of every person who would ever feel unseen, unnoticed, uncelebrated, or overshadowed by the loudness of the world. And when you let that truth land deeply in your spirit, you begin to see a portrait of a God who does not just work through the headline-makers of history, but through the hidden ones whose faithfulness became the backbone of the Kingdom.

As I look across the span of the New Testament, what becomes clear is that Jesus consistently lifted up people the world barely acknowledged, as if He were quietly rewriting the criteria for greatness from the inside out. He honored widows whose offerings seemed small to everyone else but whose trust in God outweighed their financial poverty. He praised the faith of centurions whose names never appear in the story but whose belief surpassed that of the religious elite. He told stories where heroes were hidden in plain sight: a woman who swept her entire home looking for a lost coin, a shepherd who left ninety-nine sheep to find one, a servant who remained faithful with a few talents in the shadows where no one could applaud his diligence. In each of these narratives, something deeper is happening beneath the surface. Jesus is telling us that the Kingdom does not measure value the way the world measures value, and that the people we overlook are often the very people God is most inclined to use. It is as though every unnamed character becomes a mirror, inviting each ordinary believer to see themselves in the story, to realize that their anonymity does not diminish their worth in God’s eyes.

When I reflect on these moments, I begin to understand why so many people in the modern world resonate with the idea of being unseen. We live in an era of constant noise, constant comparison, constant striving for recognition in a culture that seems to reward visibility almost as much as virtue. People wake up each day carrying responsibilities no one praises, doing acts of love that never trend, shouldering burdens that never make it into conversation. They raise children at kitchen tables where no cameras are watching, care for aging parents in quiet moments where exhaustion weighs heavy, show up to jobs where their effort is taken for granted, and hold families together in ways that no one will ever truly understand. And in the midst of that, they hope their lives still matter to God. They wonder whether their story still holds weight, whether their contributions have been seen by the One whose eyes are said to roam the earth seeking the faithful. The New Testament answers those questions with a gentle certainty: yes, you matter, and yes, heaven sees you in every hidden moment of obedience.

As I continue to explore this theme, I begin to realize that the anonymity in Scripture is not a gap; it is a message. It is not an oversight; it is a teaching tool. It is not a lack of detail; it is a revelation about the heart of God. If the Scriptures included the names of every single person whose faith contributed to the early movement of Jesus, it would be easy for generations to come to believe that significance belongs only to the ones with names recorded in holy history. Instead, by leaving so many people unnamed but unforgettable, God created a pathway where every generation could walk into the story, not as spectators but as participants. It is as though the Spirit left open seats at the table for every believer who would ever feel like their life existed in the margins. By leaving space in the narrative, the New Testament becomes not just a record of past faithfulness but an ongoing invitation for present faithfulness. The story keeps breathing through the lives of those who embrace the hidden, humble, unseen work of the Kingdom.

The deeper I go into this truth, the more I realize that anonymity itself becomes a kind of refining fire in the life of a believer. When you serve without applause, your motives are purified. When you love without recognition, your heart is tested and strengthened. When you give without being seen, your faith grows roots that sink deeper than any public affirmation could ever reach. The early church was built not on celebrity but on surrender, not on fame but on faithfulness, not on spotlight but on sacrifice. These unseen believers carried the gospel into places where it was dangerous, into homes where it was illegal, into cities where it cost them their social standing, their families, and sometimes their very lives. They carried letters across miles of rough terrain, they opened their homes for gatherings, they risked imprisonment for hospitality, they shared meals with strangers, they prayed in secret, and they lived with a courage that would have made angels stop and watch. And yet their names are lost to us. Their actions are not.

What moves me most is the realization that God could have chosen to record their identities. He could have given us lists of names and biographies and lifelong stories. But He didn’t, because the purpose was not to elevate the individuals but to elevate the principle: the Kingdom advances through the faithful, not the famous. If God had named every hidden servant, generations might have read their stories as something unreachable, something reserved for an elite group of spiritual giants. But by leaving them unnamed, the New Testament says something far more generous: this could be you. You, with your quiet faith. You, with your uncelebrated obedience. You, with your daily sacrifices, your sleepless nights, your whispered prayers. You, with your desire to honor God in the unseen corners of life. The Kingdom was designed for people like you because the God who sees in secret delights in the ones who live in secret.

As I meditate on this truth, I begin to see the heart of Jesus more clearly. He was not drawn to the public image of people; He was drawn to their depth. He was not impressed by status; He was moved by sincerity. He did not elevate the ones who demanded attention; He elevated the ones who simply believed. And that remains true today. There is something sacred about the person who keeps showing up when no one notices, something holy about the person who stays faithful in seasons that feel thankless, something powerful about the person who continues to serve when exhaustion presses heavily on their shoulders. These are the ones God entrusts with His deepest work. These are the ones He shapes into pillars. These are the ones heaven celebrates long before the world ever sees their value. In this truth lies an unshakable hope: the God of the New Testament is still writing His story through people who have never chased recognition but have chased His heart.

As I move deeper into this exploration, I begin to understand that the New Testament’s emphasis on hidden faithfulness becomes a direct invitation for every generation to redefine greatness in a world desperate for attention. We have slowly drifted into an age where visibility is treated as virtue, where applause is mistaken for anointing, and where recognition is often confused with calling. Yet the Scriptures call us back to something far quieter and far more transformational. They remind us that God does not measure our impact by the reach of our platform, but by the reach of our obedience. They remind us that heaven’s algorithms are not influenced by trends, metrics, or public enthusiasm. They remind us that a whispered prayer in the darkness can shape history more than a shouted proclamation in the spotlight. When we allow this truth to reshape our understanding of ourselves, a powerful liberation begins to happen in the soul. Suddenly, a person does not need to be seen to be significant. They do not need to be known to be meaningful. They do not need public validation to carry divine purpose.

What becomes unmistakably clear is that the New Testament celebrates the people who live in the shadows with a faith that does not require recognition to remain steadfast. Every unknown disciple who carried the gospel across rugged roads, every unnamed woman who opened her home to weary believers, every quiet follower who risked their livelihood to be part of the early gatherings, every unrecorded encourager who strengthened the apostles in their lowest moments, every nameless intercessor who prayed with trembling hands for the church to survive another day—these are the details Scripture chooses not to spotlight with names but to spotlight with spiritual weight. Their hidden sacrifices were the scaffolding of revival. Their unseen courage was the architecture of the early church. Their ordinary faithfulness, multiplied thousands of times over, became the engine that carried the message of Jesus across empires, languages, and centuries. The absence of their names is not an erasure of their legacy; it is a divine strategy to ensure that their legacy becomes accessible to all who follow.

When a person begins to grasp this truth, it dismantles the lie that their life is too small to matter. It confronts the quiet narrative that has woven its way into many hearts—the narrative that says meaning comes from achievement, applause, recognition, or being known by the masses. But God tells a different story through the pages of the New Testament, one that lifts the weight off the shoulders of every listener who has ever felt like their efforts disappeared into the background. He shows us that the ones who felt unseen were the ones who carried the greatest spiritual authority. He shows us that the ones who lived quietly were the ones who shaped eternity most loudly. He shows us that the ones who were overlooked on earth were honored in heaven’s records with a glory that cannot fade. And if God has always worked through the invisible faithful, then no one who follows Jesus today ever walks in insignificance. Their entire life becomes a sacred vessel through which God continues the same unstoppable pattern He established in the first century.

The more I sit with this truth, the more I realize that this is not merely an observation about the past; it is a framework for how God continues to move today. If the New Testament was built through hidden servants, then the present-day church is also being carried by hidden servants. It is carried by parents who pray over their children late at night when worry comes in waves. It is carried by employees who work with integrity in environments where compromise is celebrated. It is carried by caretakers who quietly serve loved ones with tenderness that rarely receives recognition. It is carried by volunteers who show up early and leave late, creating spaces of worship and growth for others. It is carried by people who endure private battles with faith still intact. It is carried by believers who stand at gravesides with tears in their eyes and hope in their hearts. It is carried by the resilient, the kind, the steady, the gentle, and the ones who persist in loving others even when love seems costly. These modern-day unsung disciples mirror the nameless ones in Scripture, not because they seek anonymity but because their hearts remain anchored in something deeper than public applause.

What astonishes me is the way Jesus Himself affirms the value of the unseen in His teachings. When He tells His followers that the Father sees what is done in secret, He is not merely offering comfort; He is correcting an entire human instinct. He is pulling the soul away from the need for validation and pulling it toward a faith rooted in the unseen God who measures devotion differently than the world does. He teaches that the quiet giver, the hidden intercessor, the gentle servant, the one who forgives without being thanked, the one who keeps walking when weary—all of these hold a beauty that heaven celebrates even when earth does not. This is why Jesus pointed so often to the ones the world overlooked. Not because they were incidental characters, but because they embodied the truth that the Kingdom does not rise on fame but on faithfulness. If the New Testament had celebrated celebrity, we would all be left chasing shadows. But because it celebrated hiddenness, we find ourselves invited into a purpose no one can take away.

As I consider all of this, what becomes overwhelmingly clear is that this theme has the power to heal hearts in a way that few truths can. It reaches the person who has spent their entire life giving without receiving. It reaches the one who has carried a family, a marriage, a ministry, or a burden in silence. It reaches the one who has served others so faithfully that they have forgotten what it feels like to be appreciated. It reaches the one who feels backgrounded in their own story. It reaches the one who wonders whether God sees their effort, their loyalty, their exhaustion, or their long nights of pressing through. And it tells them that God has never looked away. Every hidden act of love has been seen. Every quiet prayer has been heard. Every unnoticed sacrifice has been recorded in the only place where records never fade. And every moment that felt small to them has been magnified in the eyes of heaven.

The New Testament teaches us that there is no such thing as an unimportant believer. There is no such thing as a life that doesn’t matter. And there is no such thing as insignificance in the hands of a God who builds His Kingdom through the ones who are willing to say yes in the shadows. When you take this truth into your soul, you find a freedom that the world cannot manufacture and a purpose that no circumstance can diminish. You find that the God who worked through the unnamed faithful then is still working through the unnamed faithful now. And in that realization comes a quiet strength, a deep assurance, a sense of holy dignity that rises like morning light in the hearts of those who have long walked unseen.

Your friend, Douglas Vandergraph

Watch Douglas Vandergraph’s inspiring faith-based videos on YouTube: https://www.youtube.com/@douglasvandergraph

Support the ministry by buying Douglas a coffee: https://www.buymeacoffee.com/douglasvandergraph

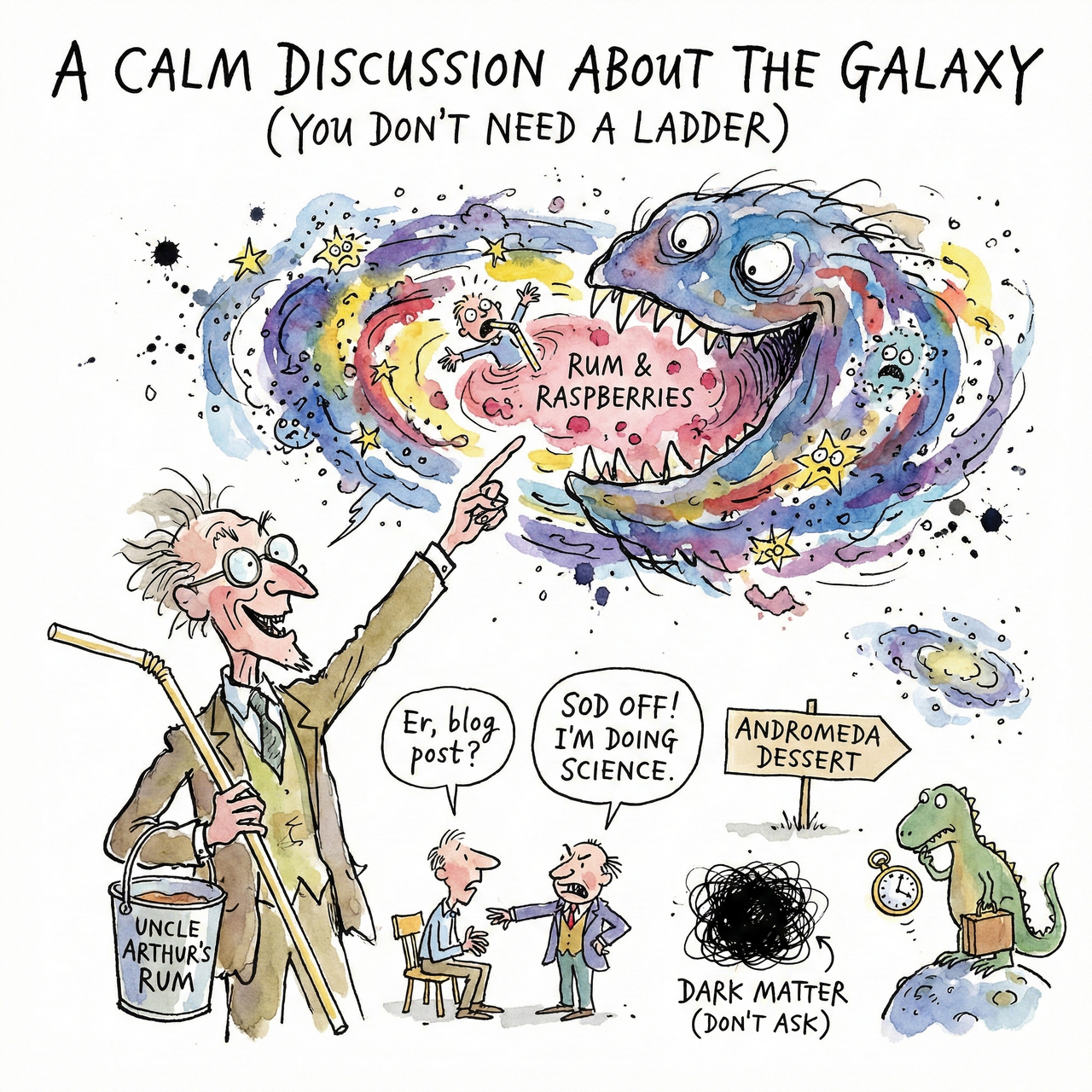

A Calm Discussion About The Galaxy (You Don’t Need a Ladder)

from  Andy Hawthorne

Andy Hawthorne

Right, pull up a chair. Not that one, it’s got a loose leg and a grudge against shins.

I thought I would share with you, my comprehensive knowledge of our galaxy. Because I know lots of interesting things about it.

For example, you may hear the galaxy described as a ‘majestic spiral’. Total twaddle. It is in fact, a celestial hoover that is very hungry. Our galaxy is basically that one relative at a wedding buffet who hides cocktail sausages in their pockets. We’re not floating in space; we’re just sitting in the stomach of a giant that’s currently eyeing up Andromeda for dessert. It puts the 'Great Void' into perspective, it’s just the galaxy waiting for the kettle to boil.

The scientists, men with very high foreheads and even higher trousers, say the centre of the Milky Way smells of rum and raspberries. This is a lie. If it smelled of rum, my Uncle Arthur would have been there years ago with a straw and a bucket, and we’d never have seen him again.

The truth is much worse. The Milky Way is a cannibal. It’s currently eating a smaller galaxy called Sagittarius. Just swallowing it whole! No salt, no pepper, not even a polite “Do you mind if I tuck in?“

Those same boffin-types estimate there are hundreds of billions of galaxies in the observable universe. Don’t know about you, but I find that inconsiderate. Because I can’t keep track of my socks.

While we are dealing with baffling things. Look outside, your window, not mine, I can’t have you all at my house. All that stuff you can see, cars, houses, that pigeon on a chimney, it’s only taking up 10% of what is actually there. Yes, that is what I said. 10%. The rest is taken up by dark matter. So dark, nobody can see it. You can’t touch it and don’t bother trying to explain it. It’s a dark matter.

Here’s another thing, We are currently hurtling toward the Andromeda Galaxy at about 250,000 miles per hour. We’ll smash into it in about 4 billion years. That is going to be one long, drawn-out Insurance claim isn’t it?

“Er, blog post?”

“Yes? Who are you? Stop interrupting, I’m doing science.”

“I’m Andy, the author of this blog—“

“Well, sod off then, while I finish revealing more splendid galaxy knowledge.”

“Okay, fine. I just wanted to make it clear that you are not an Astronomer.”

“Rude. I didn’t say I was.”

“Oh, I think the readers will pick up on that…”

Never mind him, here’s another great fact. The sun (and us) orbits the centre of the galaxy. It takes a while. 230 million years to complete one trip. Our local bus services have their timetables based on it. The last time we were in this exact spot in the galaxy, dinosaurs were just starting to think about maybe evolving. It gives a whole new meaning to “Are we there yet?”

Anyway, I thought I’d share a few snippets of knowledge. I’m one of those people that loves science, astronomy and all that clever stuff. While at the same time, not really having a clue how it all works.

Right, must go, I have to water the goldfish.

The world is a harsh place for soft hearts

from  The happy place

The happy place

It looks diry, but it’s merely heavy snowfall lit by these streetlights on the dark gray sky creating this monochromic brownish look to the world, but it’s not that cold.

There was another dog out there, my black one already scared from anticipation, and then, when the nightmares come true; right around the corner there was this mongrel attached to a young man!

He just lay there in the snow (the dog, not the boy), patiently waiting , obscured by the falling snow, and then they finally left and the

Panic in my little black dog subsided and he too was able to pee on the fresh new canvas of deep newfallen snow

And by comparison, other dog owners get to feel real smug about themselves because their dogs don’t freak out about the darkness and the

Mongrels

And

The

Luggage with wheels

I, however I have a deep understanding of how scary it might seem outside

Not only does it seem scary, it is.

It is.

Although it’s not these known horrors which are the worst: it’s the silence of those you thought were your friends or even those you counted as your family.

The sharp edge of cowardice

That’s why I’ll advice kindness always

And bravery.

Unbearable

from An Open Letter

I realized the “Letters to E #4” was just a draft on my laptop so there it is now. Out of order but oh well.

I talked with my therapist, and I was able to speak about how I was really struggling with this feeling of conflict from her words and her actions. and I was able to speak about how I was really struggling with this feeling of conflict from her words and her actions. What happened with her roommates was really traumatic for me, right before an important meeting having those people enter my house and physically block me, antagonize me, say incredibly shitty things, and even record me without my knowledge while I’m crying.

I struggle a lot with crying. There was a period of four years during high school where I couldn’t cry once, and at one point I had even written a suicide note and planned to hang myself and still couldn’t cry. I only ended up crying in college once I was out of that house, and still it was really fucking hard for me to do that. Growing up as a kid, whenever I would cry I would be hit by my dad who would yell at me to stop crying, and continued to hit me until I stopped. I learned that it is not safe to cry in front of others, and that became locked into me. I started to feel a little bit safer with E, and I was even able to cry in front of her a few times. That made it feel so much more like a betrayal when she came to my house and broke up with me so aggressively, and then after I had started sobbing she told me how her roommates were downstairs. She then pushed on it even more and they started going around the house with bags and taking her stuff, all while her roommates laughed and made shitty comments. Me crying was met with shitty comments, laughing at me, mocking me, and holy fuck. Writing it down makes me want to cry so badly and I want to just curl up into a ball and hide. I wish it didn’t happen. I wish it didn’t happen so fucking badly. I want to throw up so fucking bad right now. I was supposed to be safe, and I was supposed to be healing and getting more comfortable, and it feels like I was hit so far back into that cage I was trapped in as a kid.

The part that hurts me and causes so much conflict now is how she listened to me, and acknowledged a lot of stuff and validated how I felt. She apologized a lot, and wanted to show that she meant it and it wasn’t just words. But she hasn’t talked to them about how what happened was not ok. Or how it was fucked up the stuff they did, and how that was regardless a shitty thing to do to someone. Instead she made more plans with them, and is hanging out with them.

If someone you knew had a nazi friend, and you were someone directly hurt by that, how would you feel if they continue to interact with them? They don’t say anything or push back on nazi comments, and had even done that stuff with them earlier against you. If they apologize and say they’ve changed, but then continue to hang out with that person while not talking to them about how what happened was wrong, what would you think?

I think this may be a dealbreaker for me in some ways, if she cannot recognize how what happened was not ok, and show that she isn’t that person anymore. That has to come from accountability, and that includes talking to her fucking attack dogs that did those stuff to me. I just don’t feel safe until that if I’m being honest. How am I supposed to believe that I am safe if she’s telling me that she realizes how what happened was fucked up and not ok, but keeps making plans to hang out with them without even talking to them about it.

I know that I need to just wait, and right now my emotions are really high, and it would be healthy if I can take a bit of space and wait a bit. I am strong. I am strong. I am strong. I am strong.

Letters to E #4

from An Open Letter

Situation: E broke up with me in such a nuclear way, and it came out of nowhere.

Thoughts: This feels so unfair to me, and this feels like a lack of emotional regulation and super high volatility. I don’t know how I can move past this and not feel like I’m walking on eggshells.

Feelings: I feel like I don’t deserve this, and that it’s unfairly being pinned on me, for her lack of communication. I feel very upset and hurt, and betrayed in a way. I told her that this was what I was afraid of, and she did it.

Behaviors: We either immediately break up, or I suck it up and feel uncomfortable and unsafe emotionally for a while.

Thoughts: She was emotionally volatile, and she doesn’t have the best luck with her childhood either. Emotional regulation is hard for her, and this was a point where there was just too much volatility. She’s just faced with a lot of pain and discomfort, and she didn’t know how to communicate well enough to release that tension, and this happened. She doesn’t want to hurt me, she just doesn’t want to keep hurting.

Feelings: I mean I do love her. And it hurts because I feel wrongly hurt, but also she’s been hurting and we are a team. No need to justify why she is hurting or who’s “fault” that is. I don’t feel like she wants to break up, she wants to just stop feeling this much.

Behaviors: Yes I am within my rights to leave. So is she. But I care about her, and I can empathize with where she is coming from. I also believe that this volatility will go down, and I know that the good times are good with her.

I really like how we get to trade roles, and you let me be softer and the little spoon.

Massages from you make me feel warm and safe. Same with when you scratch my back, I feel like a cat that just melts.

I really love your family, especially your mom. But also I think H is such a good kid and I want to be in his life if I can be.

You sometimes make really fucking stupid faces, and I think it’s really cute.

You let me vent when I need to, and I know it’s hard and a bit foreign but you let me just rant and say stuff without having to filter it.

You make me feel very attractive, and there’s a lot of different ways where I struggle with that so that means a lot to me.

I love being able to do this with you.

I love how I get to show off to you at the gym, and how you do that little voice and talk about your strong boyfriend.

Our double high fives at the gym are something that I think is very iconic and makes me blush thinking about it for some reason.

I love how much time you make for me. I know that you have plenty of other things but you still manage to carve out a lot of time to spend with me and I appreciate that a lot.

I think you’re a really good designer in the sims, your houses and apartments are really well structured and it’s something I always marvel at.

I really appreciate how you apologize and go out of your way to take accountability with things, when you do that I feel very secure with you and emotionally safe.

I think you have an incredibly open mind, and that’s really nice because that means that I get to explore and try things with you.

I take a lot of pride in your bench press. Not only the weight, but how much you like it. You aren’t like the other girls.

I think your humor is a lot like mine, and I love how I can make all different kinds of jokes with you.

The fact that we have fights and conflict, but we make up makes me feel secure. I believe that even when things get bad and rough, you still fight to work it out with me.

Your glasses were at first a bit jarring to me, but now I wouldn’t want it any other way. You have such beautiful eyes, and I get to see two different types of them because of your massive lenses. I love it so much, and I almost take pride in that (if that’s ok with you)

I think you’re incredibly resilient for the shit that you’ve been dealt, and how you keep your chin up and fight for what you have and where you’ve gotten. I know that a lot of things are biologically harder for you, and you don’t even complain but you just grind and make it work. I admire that so much about you.

The $100,000 Heartbreak: Rethinking the College Dream in the Age of AI

A friend recently shared some startling news: she and her husband sold their family home to fund their daughter’s education at a prestigious private university. My initial reaction—a skeptical “Really?“—was perhaps a bit too transparent.

“It was her dream school,” she explained. “It’s where we both went. She’s our only child; it felt wrong to deny her that, especially since she wants to work in the arts.” Today, their daughter is happy and successful. On the surface, it’s a win. But as I walked away, I couldn't shake a nagging thought: In an unpredictable world, a paid-off home is a safety net. A degree is a gamble.

The “Education Premium” is Evaporating

My parents mortgaged their house to send me to school in the late 80s. Back then, a degree was the “end-all-be-all.” But the math has changed. We are entering an era where the “college premium”—the extra earning power a degree provides—is shrinking in real-time. With the arrival of AI, the entry-level “knowledge work” jobs that graduates traditionally occupied are being vaporized. I recently spoke with a professor at a national university who told me, “For the first time, I have Computer Science grads calling me because they’re driving Ubers to make ends meet.”

The statistics back up this anxiety:

Confidence is cratering: 63% of Americans now feel college isn't worth the cost, up from 40% in 2013.

The Job Gap: Only 12% of current seniors secure a full-time job by graduation.

The Degree Paradox: 52% of recent grads are working jobs that don’t even require a degree.

The Barbell Effect: Elite vs. The Rest